Learning analytics: Difference between revisions

m (→Introduction) |

|||

| (265 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

{{incomplete}} | {{incomplete}} | ||

<pageby nominor="false" comments="false"/> | <!-- <pageby nominor="false" comments="false"/> --> | ||

== Introduction == | == Introduction == | ||

One could define learning analytics as collection of methods that allow teachers and maybe the learners to understand '''what is going on'''. I.e. all sorts of tools that allow to gain insight on participant's | This piece is a "note taking" article. It may include some ideas and models of interest but would require much work to make it complete. It has not been revised since 2014. - [[User:Daniel K. Schneider|Daniel K. Schneider]] ([[User talk:Daniel K. Schneider|talk]]) 17:05, 29 March 2017 (CEST) | ||

One could define '''learning analytics''' as collection of methods that allow teachers and maybe the learners to understand '''what is going on'''. I.e. all sorts of data collection, transformation, analysis and visualization tools that allow to gain insight on participant's behaviors and productions (including discussion and reflections). The learning analytics community in their call for the [https://tekri.athabascau.ca/analytics/ 1st International Conference on learning analytics] provides a more ambitious definition: {{quotation|Learning analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimising learning and the environments in which it occurs}}. This definition includes a clearly [[transformative pedagogy|transformative]] perspective. | |||

'''Educational data mining''' is a field that has similar interests, but that focuses more on research. In addition, favorite data gathering, aggregation/clustering, analysis and visualization techniques differ a bit. | |||

This EduTechWiki article will particularly focus on analytics for [[project-oriented learning]] such as the [[Knowledge-building community model]] or [[Inquiry-based learning]]. We have the impression that learning analytics is mostly thought of as something that could be used to improve low-quality education, e.g. main-stream [[e-learning]], and not as a [[cognitive tool]] designed to improve activity-based learning designs. Although we will try to cover this area as a whole, please follow-up links or the literature (both below) if you are interested in tracking reading and quizzing activities or improving your school or the educational system. An additional reason for looking at other introductions and discussions is that we have a bias against what we call "bureaucratization through data graveyarding". While we do acknowledge the usefulness of all sorts of analytics, we must make sure that it will not replace [[design science|design]] and communication. - [[User:Daniel K. Schneider|Daniel K. Schneider]] 23:00, 11 April 2012 (CEST) | |||

See also: | See also: | ||

* [[Educational data mining]] | |||

* [[Learning process analytics]] (variant of a conference article) | |||

* [[Wiki metrics, rubrics and collaboration tools]] | * [[Wiki metrics, rubrics and collaboration tools]] | ||

* Other articles in the [[:Category:Analytics|Analytics]] category | * Other articles in the [[:Category:Analytics|Analytics]] category | ||

* [[Visualization]] and [[social network analysis]] | * [[Visualization]] and [[social network analysis]] | ||

* [[:Category: Knowledge and idea management|knowledge and idea management]] | |||

The Society for Learning Analytics Research [http://solaresearch.org/OpenLearningAnalytics.pdf Open Learning Analytics] (2011) proposal associates learning analytics with the kind of "big data" that are used in busines intelligence: | The Society for Learning Analytics Research [http://solaresearch.org/OpenLearningAnalytics.pdf Open Learning Analytics] (2011) proposal associates learning analytics with the kind of "big data" that are used in busines intelligence: | ||

| Line 20: | Line 29: | ||

}} | }} | ||

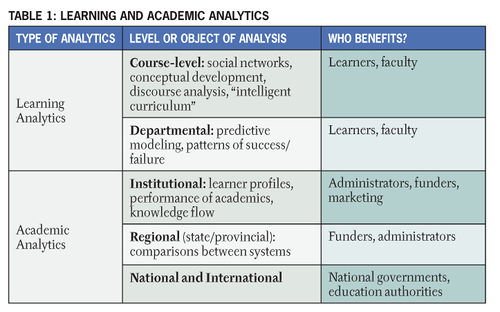

[[image:ERM1151_table.jpg|thumb| | [[image:ERM1151_table.jpg|thumb|500px|none|Learning and Academic Analytics. © 2011 George Siemens and Phil Long, EDUCAUSE Review, vol. 46, no. 5 (September/October 2011) . Licensed under the [http://creativecommons.org/licenses/by-nc/3.0/Creative Commons Attribution-NonCommercial 3.0 License] ]] | ||

In other words, learning analytics concerns people concerned by teaching and learning, e.g. learners themselves, teachers, course designers, course evaluators, etc. Learning analytics is seen {{quotation|LA as a means to provide stakeholders (learners, educators, administrators, and funders) with better information and deep insight into the factors within the learning process that contribute to learner success. Analytics serve to guide decision making about educational reform and learner-level intervention for at-risk students.}} (Simons et al. 2011: 5) | In other words, learning analytics concerns people concerned by teaching and learning, e.g. learners themselves, teachers, course designers, course evaluators, etc. Learning analytics is seen {{quotation|LA as a means to provide stakeholders (learners, educators, administrators, and funders) with better information and deep insight into the factors within the learning process that contribute to learner success. Analytics serve to guide decision making about educational reform and learner-level intervention for at-risk students.}} (Simons et al. 2011: 5) | ||

This definition of the society of learning analytics and the US Dept. of education report that we shall discuss below are political and like many other constructs in the education sciences "learning analytics" promises better education. We therefore conclude the introduction with the impression that learning analytics either can be seen (1) as tools that should be integrated into the learning environment and scenario with respect to specific teaching goals or (2) as a more general and bigger "framework" for doing "education intelligence". The latter also could include the former, but not necessarily so. | |||

We therefore distinguish three kinds of learning analytics: | |||

* Small scale learning analytics that is just another name for "awareness tools", "learning cockpits" and so forth and that have a long tradition in educational technology | |||

* Mid scale analytics who's primary function is to help improve courses, detect and assist students with difficulties | |||

* Large scale analytics (also called academic analytics) whose function is to improve institutions or even whole educational systems. | |||

== Approaches, fields and conceptual frameworks == | |||

* Grades | This chapter attempts to identify various strands of research and development and to discuss some of these in more detail. | ||

* [[learning e-portfolio]]s, i.e. students assemble productions and reflect upon these (use quite a lot in professional education) | |||

* Tracking tools in [[learning management system]]s | === Approaches to learning analytics and educational data mining === | ||

Some kinds of learning analytics and educational data mining have been known and used since education exists. Examples are: | |||

* Grades and test scores. | |||

* Student and teacher surveys of various kinds, e.g. course assessment or exit questionnaires. | |||

* [[learning e-portfolio]]s, i.e. students assemble productions and reflect upon these (use quite a lot in professional education). | |||

* Tracking tools in [[learning management system]]s. | |||

* Cockpits and [[scaffolding]] used in many [[CSCL]] tools. | * Cockpits and [[scaffolding]] used in many [[CSCL]] tools. | ||

* Student modeling in [[artificial intelligence and education]]. | |||

* On a more individual basis analytics is related to [[Infotention]] and other knowledge/idea management concepts and tools. | |||

Modern '''learning analytics''' (i.e. that is labelled as such) probably initially drew a lot of ideas from industry and its various attempts to deal with information, such as [http://en.wikipedia.org/wiki/Business_intelligence business intelligence], [http://en.wikipedia.org/wiki/Quality_management Quality management], [http://en.wikipedia.org/wiki/Enterprise_resource_planning Enterprise resource planning] (ERP), [http://en.wikipedia.org/wiki/Balanced_scorecard Balanced score cards], [http://en.wikipedia.org/wiki/Marketing_research marketing research] and [http://en.wikipedia.org/wiki/Web_analytics Web analytics]. The common idea is to extract [http://en.wikipedia.org/wiki/Key_performance_indicators Key performance indicators] from both structured and unstructured data and to improve decision making. | |||

A related second important influence was a political will to articulate and measure quality standards in education leading to a culture of quantiative assessment (Oliver and Whelan, 2011; Hrabowski, Suess et al. 2011). | |||

A third influence was and increasingly is prior research. According to Baker (2013), {{quotation|Since the 1960s, methods for extracting useful information from large data sets, termed analytics or data mining , have played a key role in fields such as physics and biology. In the last few years, the same trend has emerged in educational research and practice, an area termed learning analytics (LA; Ferguson, 2012) or educational data mining (EDM; Baker & Yacef, 2009). In brief, these two research areas seek to find ways to make beneficial use of the increasing amounts of data available about learners in order to better understand the processes of learning and the social and motivational factors surrounding learning.}} ([http://www.centeril.org/handbook/ Learning, Schooling, and Data Analytics], retrieved March 2014. | |||

One difference between various approaches to learning analytics (in a wide sense) concerns the locus of data collection and data analysis, and on how big a scale (number of students, activities and time) it is done. In [[radical constructivism|radical constructivist]] thought, students should be self-regulators and the role of the system is just provide enough tools for them as individuals or groups for understanding what is going on. In more teacher-oriented [[socio-constructivism]], the teachers, in addition, need indicators in order to monitor orchestrations (activities in [[pedagogical scenario]]s). In other designs such as [[direct instruction]], the system should provide detail performance data that are rather based on quantitative test data. Finally, powers above like schools or the educational systems would like to monitor various aspects. | |||

This proposal addresses the need for integrated toolsets through the development of four specific tools and resources: | [[Educational data mining]] (EDM) could be described as a research area that focuses more on data retrieval and analysis. I mainly targeted educational research using and developing all sorts of technology, e.g. information retrieval, clustering, classification, sequence mining, text mining, visualization, and social network analyis. Today, EDM also can be seen as "helper discipline" for more sophisticated learning analytics approaches. | ||

According to Baker & Yacef (2009) {{quotation|Educational Data Mining researchers study a variety of areas, including individual learning from educational software, computer supported collaborative learning, computer-adaptive testing (and testing more broadly), and the factors that are associated with student failure or non-retention in courses.}}. Key areas are: | |||

* Improvement of student models, i.e. models that are typically used in [[artificial intelligence and education]] systems such as [[intelligent tutoring system]]s. | |||

* Discovering or improving models of a domain’s knowledge structure | |||

* Studying pedagogical support (both in learning software, and in other domains, such as collaborative learning behaviors), towards discovering which types of pedagogical support are most effective. | |||

* Empirical evidence to refine and extend educational theories and well-known educational phenomena, towards gaining deeper understanding of the key factors impacting learning, often with a view to design better learning system. | |||

This overview paper also points out that relationship mining was dominant between 1995 and 2005, Prediction moved to the dominant position in 2008-2009. In addition, the authors noted an increase of data coming from the instrumentation of existing online courses as opposed to harvesting the researcher's-as-teacher's own data. | |||

Fournier et al. (2011) quote [http://campustechnology.com/newsletters/ctfocus/2010/10/collaboration_analytics_and-the-lms_a-conversation-with-stephen-downes.aspx Downs] (2010) {{quotation|There are different tools for measuring learning engagement, and most of them are quantificational. The obvious ones [measure] page access, time-on-task, successful submission of question results – things like that. Those are suitable for a basic level of assessment. You can ''tell whether students are actually doing something. That’s important in certain circumstances. But to think that constitutes analytics in any meaningful sense would be a gross oversimplification''. [...] There is a whole set of approaches having to do with content analysis. The idea is to look at contributions in discussion forums, and to analyze the kind of contribution. Was it descriptive? Was it on-topic? Was it evaluative? Did it pose a question?}}. For the later kind (btw. not just forums but all sorts of student productions), we do need text mining technology. In addition, Fournier point out that data mining technologies may have to be combined with semi-manual qualitative analysis, in particular [[social networking]] analysis. | |||

=== The SoLAR framework === | |||

The proposal form the [http://www.solaresearch.org/ Society for Learning Analytics Research (SoLAR)] addresses the need for integrated toolsets through the development of four specific tools and resources: | |||

# A Learning analytics engine, a versatile framework for collecting and processing data with various analysis modules (depending on the environment) | # A Learning analytics engine, a versatile framework for collecting and processing data with various analysis modules (depending on the environment) | ||

| Line 48: | Line 85: | ||

'''A short discussion''' | '''A short discussion''' | ||

The proposal starts somehow with the assumption that education continues to use rather simple tools like courseware or somewhat now popular web 2.0 tools like [[personal learning environment]]s. In other words, the '''fog''' is | The proposal starts somehow with the assumption that education continues to use rather simple tools like courseware or somewhat now popular web 2.0 tools like [[personal learning environment]]s. In other words, the '''fog''' is identified as the problem and not the way education is designed. I.e. this proposal focuses on "intelligence" as opposed to "design". If you like, "learning design" could become more like a "General motors" approach (marketing oriented) than "Apple" (design-oriented). Related to that we also can identify the general assumption that "metrics" work, while in reality test-crazy systems like the US high-school education have a '''much''' lower performance than design-based systems like the Finnish one. We are not really familiar with the long history of business intelligence, management information systems but we are familiar with the most prominent critique, i.e. that such systems put a distance between decision makers and staff, kill communication lines and inhibit managers from thinking. | ||

The project proposes a general framework based on modern [[service-oriented architecture]]s. So far, all attempts to use such architectures in education did fail, probably because of the lack of substantial long-term funding, e.g. see the [[e-framework]] project. We also a wonder a bit how such funding could be found, since not even the really simple [[IMS Learning Design]] has been implemented in a usable set of tools. In addition, even more simple stuff, like simple and useful wiki tracking is not available, e.g. read [[wiki metrics, rubrics and collaboration tools]] | |||

We would like to draw parallels with (1) the [[metadata]] community that spent a lot of time designing standards for describing documents and instead of working on how to create more expressive documents and understanding how people compose documents, (2) with business that spends energy on marketing and related business intelligence instead of designing better products, (3) with folks who believe in adaptive systems forgetting that learner control is central to deep learning and that most higher education is collaborative, (4) with the utter failure of [[intelligent tutoring system]]s trying to give control to the machine and (5) finally with the illusion of [[learning style]]. These negative remarks are not meant to say that this project should or must fail, but they are meant to state two things: The #1 issue is in education is not analytics, but designing good learning scenarios within appropriate learning environments (most are not). The #2 issue is massive long term funding. Such a system won't work before at least 50 man years over a 10 year period will be spent. | |||

Somewhat it also is assumed that teachers don't know what is going on and that learners can't keep track of their progress or more precisely that teachers can't design scenarios that will help both teachers and students knowing what is going on. We mostly share that assumption, but would like to point out that knowledge tools do exist, e.g. [[knowledge forum]], but these are never used. This also can be said with respect to [[CSCL]] tools that usually include [[scaffolding]] and meta-reflective tools. In other words, this proposal seems to imply that that education and students will remain simple, but "enhanced" with both teacher and student cockpits that sort of rely on either fuzzy data mined from social tools (SNSs, forums, etc.) or quantitative data from learning management systems. | |||

Finally, this project raises deep [[privacy]] and innovation issues. Since analytics can be used for assessment, there will be attempts to create life-long scores. In addition, if students are required to play the analytics game in order to improve chances, this will be other blow to [[creativity]]. If educational systems formally adopt analytics, it opens the way for keeping education in line with "main-stream" [[e-learning]], an approach designed for training simple subject matters (basic facts and skills) through reading, quizzing and chatting. This, because analytics will work fairly easily with simple stuff, e.g. scores, lists of buttons clicked, number of blog and forum posts, etc. | |||

This being said, we find the SoLAR project interesting, but under the condition that it should not hamper use of creative learning designs and environments. Standardization should be enabling (e.g. like notation systems in music are) and not reduce choice. | |||

=== US Dept. of education view === | |||

Bienkowski et al. (2012) in their [http://ctl2.sri.com/eframe/wp-content/uploads/2012/04/EDM-LA-Brief-Draft_4_10_12c.pdf Enhancing Teaching and Learning Through Educational Data Mining and Learning Analytics] (draft) prepared for the US Department of Education firstly defines Educational data mining. It is {{quotation| emerging as a research area with a suite of computational and psychological methods and research approaches for understanding how students learn. New computer-supported interactive learning methods and tools— intelligent tutoring systems, simulations, games—have opened up opportunities to collect and analyze student data, to discover patterns and trends in those data, and to make new discoveries and test hypotheses about how students learn. Data collected from online learning systems can be aggregated over large numbers of students and can contain many variables that data mining algorithms can explore for model building.}} (p.9, draft). | |||

Learning analytics is defined as data mining plus interpretation and action. {{quotation|Learning analytics draws on a broader array of academic disciplines than educational data mining, incorporating concepts and techniques from information science and sociology in addition to computer science, statistics, psychology, and the learning sciences. [...] Learning analytics emphasizes measurement and data collection as activities that institutions need to undertake and understand and focuses on the analysis and reporting of the data. Unlike educational data mining, learning analytics does not generally address the development of new computational methods for data analysis but instead addresses the application of known methods and models to answer important questions that affect student learning and organizational learning systems.}} (p.12, draft) | |||

'''Discussion''' | |||

We find it ''very'' suprising that the Bienkowski report argues that learning analytics do not address the development of new computational methods when in reality they most interesting learning analytics projects (e.g. Buckingham Shum & Crick, 2012 or Govaerts et al., 2010) exactly do that. In addition, we find that the report seems to missing one crucial issue: ''Enabling the learner and learner communities''. 20 years of research in educational technology is missing (e.g. [[cognitive tool]]s, [[learning e-portfolio|learning with portfolios]]s, [[project-oriented learning]] and [[writing-to-learn]]). On the other hand, [[artificial intelligence and education|AI&Ed concepts]] that have been given up in the 1990's (and for good reasons) seem to re-emerge without sufficient grounding in contemporary learning theory and first principles of instructional design. Analytics that attempt to improve the learning process through awareness tools are maybe "small scale analytics" and definitely not predictive. | |||

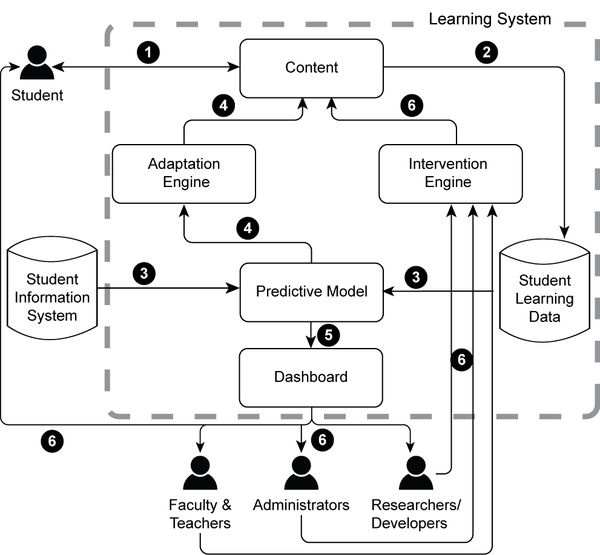

Below is a figure explaining how adaptive learning systems should work. Other scenarios are discussed in the report and we singled this one out, because adaptive learning systems never had any success since they do not fit into either constructivist or direct [[instructional design model]]s. Note the relative absence of the learner. In the picture the student is just directed to look at contents ... | |||

[[image:US-dept-education-2012-draft.png|thumb|600px|none|The Components and Data Flow Through a Typical Adaptive Learning System, Bienkowski et. al. 2012 (Draft version)]] | |||

=== The OU Netherlands framework === | |||

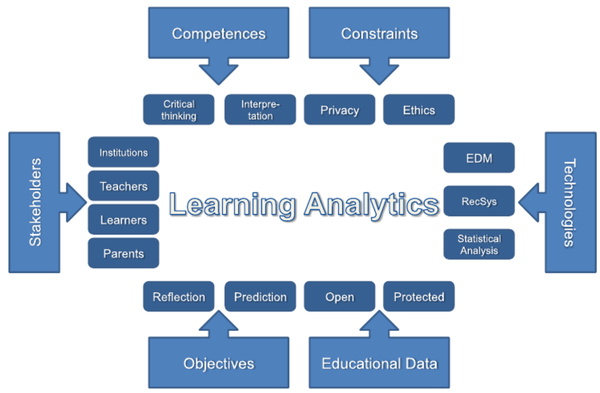

According to the [http://portal.ou.nl/en/web/topic-learning-analytics/home/-/wiki/Main/Learning%20Analytics%20framework Learning Analytics framework] page of the Centre for Learning Sciences and Technologies, Open Universiteit (retrieved April 13 2012), the {{quotation|topic of Learning Analytics is structured in six critical dimensions, shown in the diagramme below. Each of these dimensions has instantiations which are exemplified in the inner circle items. These are only typical examples and not a complete list of possible instantiations.}} | |||

In a presentation, called [http://www.slideshare.net/Drachsler/turning-learning-into-numbers-a-learning-analytics-framework Turning Learning into Numbers - A Learning Analytics Framework], Hendrik Drachsler and Wolfgang Geller presented the following global component framework of learning analytics. The framework also was presented in Hendrik Drachsler's [http://www.slideshare.net/Drachsler/recsystel-lecture-at-advanced-siks-course-nl lecture on recommender systems for learning] (April 2012). | |||

[[image:ou-nl-learning-analytics-framework.png|thumb|600px|none|Drachsler / Geller learning analytics framework. Copyright: Drachsler & Geller, Open Universiteit. Reproduced with permission by H. Drachsler]] | |||

Such a framework helps thinking about the various "components" that make up the learning analytics topic. | |||

=== Tanya Elias learning analytics model === | |||

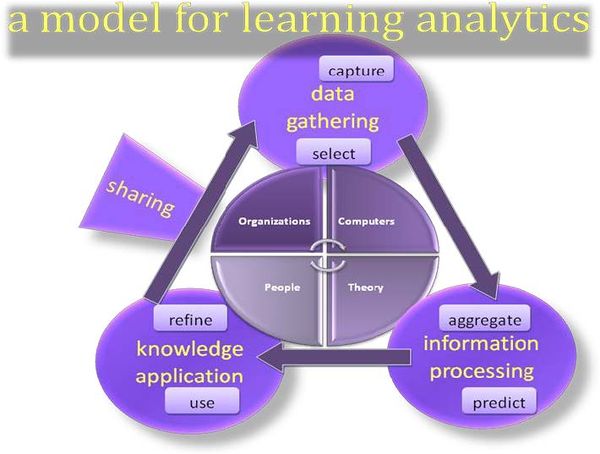

Tanya Elias (2011) in her Learning Analytics: Definitions, Processes and Potential argues that learning analytics can be matched to Baker's (2007) knowledge continuum, which somewhat could be compared to [[learning level]] models, such as the Bloom taxonomy. | |||

* Data - Obtain Raw Facts | |||

* Information - Give Meaning to Obtained Data | |||

* Knowledge - Analyze and Synthesize Derived Information | |||

* Wisdom - Use Knowledge to Establish and Achieve Goals. | |||

In particular, a comparison of ''Five Setps of Analytics'' (Campbell and Oblinger, 2008), ''Web Analytics Objectives'' (Hendricks, Plantz and Pritchard, 2008), ''Collective Applications Model'' (Dron and Anderson, 2009) lead to seven related ''Processes of Learning Analytics'' as shown in the following table: | |||

== Software == | {| class="wikitable" | ||

|+ Comparison of Analytics frameworks and models (Tanya Elias, 2011) / Layout changed by DKS | |||

! Knowledge Continuum !! Five Steps of Analytics !! Web Analytics Objectives !! Collective Applications Model !! Processes of Learning Analytics (Elias). | |||

|- | |||

| Data || Capture || Define goals<br/>Measure || Select<br/>Capture || Select<br/>Capture | |||

|- | |||

| Information || Report || Measure || Aggregate || Aggregate<br/>Report | |||

|- | |||

| Knowledge || Predict || Measure || Process || Predict | |||

|- | |||

| Wisdom || Act <br/>Refine|| Use <br/>Share|| Display || Use<br/>Refine<br/>Share | |||

|} | |||

According to the author (Elias:11) learning analytics consist of computer, people, theory and organizations. {{quotation| Learning analytics uses of four types of technology resources to complete ongoing pattern of three-phase cycles aimed at the continual improvement of learning and teaching}} (Elias, 2011:17). | |||

[[image:LearningAnalyticsDefinitionsProcessesPotential.jpg|thumb|600px|none|Learning analytics continuous improvement cycle. © Tanya Elias reproduced with permission by T. Elias]] | |||

'''Discussion''' | |||

This model has the merit to explicitly state that learning analytics is a complex iterative process. In particular, being able to put analytics to good use is not the same as producing reports or predictions. By the way, I wouldn't associate "knowledge" exclusively with ''prediction'' in the overview table. The latter refers to just one kind of academic knowledge. For participants (learners and teachers) it is much more important to create a deep ''understanding'' what "is going on". Also interesting is the "sharing" part. | |||

In addition, we believe that interpretation of data should be negotiated, i.e. we also would add a sharing tab between "information processing" and "knowledge application". A simple example can illustrate that. A Mediawiki analytics tool such as [[StatMediaWiki]] can show that some students actively use the discussion pages and others do not. It turns out that those who don't can be either weak students or very good ones. The latter meet face to face on a regular basis. In other words, one has to present the data to students and discuss with them. | |||

=== Ferguson and Buckingham Shum's five approaches to social learning analytics === | |||

[http://oro.open.ac.uk/32910 Ferguson and Buckingham Shum (2012)]'s ''Social Learning Analytics: Five Approaches'' defines five dimensions of social learning for which one could create instruments: | |||

{{quotationbox| | |||

* '''social network analytics''' — interpersonal relationships define social platforms | |||

* '''discourse analytics''' — language is a primary tool for knowledge negotiation and construction | |||

* '''content analytics''' — user-generated content is one of the defining characteristics of Web 2.0 | |||

* '''disposition analytics''' — intrinsic motivation to learn is a defining feature of online social media, and lies at the heart of engaged learning, and innovation | |||

* '''context analytics''' — mobile computing is transforming access to both people and content. | |||

}}([http://oro.open.ac.uk/32910 Ferguson and Buckingham Shum, Preprint 2012)], retrieved April 10 2012.) | |||

Work on a system is in progress. | |||

=== Technology for supporting Collaborative learning === | |||

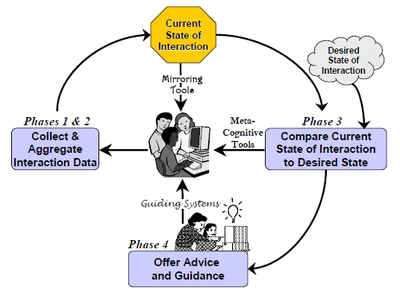

Soller, Martinez, Jermann and Muehlenbrock (2004, 2005:Abstract) develop a '''collaboration management cycle''' framework that distinguishs between mirroring systems, which display basic actions to collaborators, metacognitive tools, which represent the state of interaction via a set of key indicators, and coaching systems, which offer advice based on an interpretation of those indicators. | |||

{{quotation|The framework, or collaboration management cycle is represented by a feedback loop, in which the | |||

metacognitive or behavioral change resulting from each cycle is evaluated in the cycle that follows. Such feedback loops can be organized in hierarchies to describe behavior at different levels of granularity (e.g. operations, actions, and activities). The collaboration management cycle is defined by the four following phases}} (Soller et al., 2004:6): | |||

[[image:soller-et-al-collaboration-management-cycle.png|thumb|400px|right|The Collaboration Management Cycle, by A. Soller, A. Martinez, P. Jermann & M. Muehlenbrock (2004). [http://www.cscl-research.com/Dr/ITS2004Workshop/proceedings.pdf ITS 2004 Workshop on Computational Models of Collaborative Learning]]] | |||

* Phase 1: The data collection phase involves observing and recording the interaction of users. Data are logged and stored for later processing. | |||

* Phase 2: Higher-level variables, termed indicators are computer to represent the current state of interaction. For example, an agreement indicator might be derived by comparing the problem solving actions of two or more students, or a symmetry indicator might result from a comparison of participation indicators. | |||

* Phase 3: The current state of interaction can then be compared to a desired model of interaction, i.e. a set of indicator values that describe productive and unproductive interaction states. {{quotation|For instance, we might want learners to be verbose (i.e. to attain a high value on a verbosity indicator), to interact frequently (i.e. maintain a high value on a reciprocity indicator), and participate equally (i.e. to minimize the value on an asymmetry indicator).}} (p. 7). | |||

* Phase 4: Finally, remedial actions might be proposed by the system if there are discrepancies. | |||

* Soller et al. (2005:) add a phase 5: {{quotation|After exiting Phase 4, but before re-entering Phase 1 of the following collaboration management cycle, we pass through the evaluation phase. Here, we reconsider the question, “What is the final objective?”, and assess how well we have met our goals}}. In other words, the "system" is analysed as a whole. | |||

Depending on the locus and amount amount of computer-support, the authors then identify three kinds of systems: | |||

* '''Mirroring tools''' automatically collect and aggregate data about the students’ interaction (phases 1 and 2 in Figure 1), and reflect this information back to the user with some kind of visualization. Locus of processing thus remains in the hand of learners or teachers. | |||

* '''Metacognitive tools''' display information about what the desired interaction might look like alongside a visualization of the current state of indicators (phases 1, 2 and 3 in the Figure), i.e. offer some additional insight to both learners and teachers. | |||

* '''Guiding systems''' perform all the phases in the collaboration management process and directly propose remedial actions to the learners. Details of the current state of interaction and the desired model may remain hidden. | |||

Let's now examine an example: [http://oro.open.ac.uk/25829/1/DeLiddo-LAK2011.pdf Anna De Liddo et al. (2011:4)] present a research that uses a structured argumentation tool - the Cohere web application - as research vehicle. {{quotation|Following the approach of structured deliberation/argument mapping, Cohere renders annotations on the web, or a discussion, as a network of rhetorical moves: users must reflect on, and make explicit, the nature of their contribution to a discussion.}}. Since the tool allows participants (1) to tag a post with a rhetorical role and (2) to link posts or posts with participants, the following learning analytics can be gained per learner and/or per group (idem: 6): | |||

* Learners’ attention: what do learners focus on? What problems and questions they raised, what comments they made, what viewpoints they expressed etc. | |||

* learners’ rhetorical attitude to discourse contributions: With what and who do a learner agrees/disagrees? What ideas he supports? What data he questioned? | |||

* learning topics distribution: What are the hottest learning topics, by who they have been proposed and discussed? | |||

* learners’ social interactions: How do learners act within a discussion group? What are the relationships between learners? | |||

From that data, more high level constructs can be derived, such as | |||

* analyzing learner's attention | |||

* learner's moves, e.g. learner's rhetorical roles, ability to compare, or ability to broker information (between others) | |||

* topics distribution, e.g. most central concepts in a group discussion | |||

* social interactions, e.g. learner's with the highest centrality | |||

== Technology overview == | |||

Since "Analytics" is a term that can include any kind of purpose as well as any kind of activity to observe and analyze, the set of technologies is rather huge and being developped in very different contexts. Recent interest in learning analytics seems to be sparked by the growing importance of on-line marketing (including [[search engine optimization]]) as well as [[User experience and user experience design|user experience design]] of e-commerce sites. However, as we tried to show in our short discussion of CSCL technology, mining student "traces" has a longer tradition. | |||

The categories we describe below overlap to a certain extent. | |||

The technical side of analytics include: | |||

* Creating data for mining (optional) either automatically or manually by the users | |||

* Collecting data from many sources, e.g. log files or web contents | |||

* Aggregating, clustering, etc. | |||

* All sorts of relational analysis | |||

* Visualization of results (and raw data aggregations) | |||

=== Data mining === | |||

(Romero&Ventura, 2007) according to Baker & Yacef (2009) identifies the following types of educational data mining | |||

# Statistics and visualization | |||

# Web mining | |||

## Clustering, classification, and outlier detection | |||

## Association rule mining and sequential pattern mining | |||

## Text mining | |||

Baker & Yacef (2009) then summarize a new typology defined in Baker (2010): | |||

# Prediction | |||

#* Classification | |||

#* Regression | |||

#* Density estimation | |||

# Clustering | |||

# Relationship mining | |||

#* Association rule mining | |||

#* Correlation mining | |||

#* Sequential pattern mining | |||

#* Causal data mining | |||

# Distillation of data for human judgment | |||

# Discovery with models | |||

Data mining relies on several types of sources: | |||

* Log files (if you have access) | |||

* Analytics databases filled with data from client-side JavaScript code (user actions such as entering a page can be recorded and the user can be traced through cookies) | |||

* Web page contents | |||

* Data base contents | |||

* Productions (other than website), e.g. word processing documents | |||

* ... | |||

Types of analytics that can be obtained | |||

* quality of text | |||

* richness of content | |||

* content (with respect to some benchmark text) | |||

* similarity of content (among productions) | |||

* etc. (this list needs to be completed) | |||

=== Trace analysis === | |||

Probably very close to data mining, but a slightly distinct community. In earlier publications, in particular in the AI&Ed tradition, papers did not use the term ''analytics'' but referred to "traces" and "student modeling". E.g. see the publications from the [http://liris.cnrs.fr/equipes?id=44 SILEX group] at LIRIS (University of Lyon), e.g. Settouti (2009). Theses systems collect data from specially crafted log files (or databases) that belong to the application. | |||

(to be expanded...) | |||

=== client-side analytics === | |||

By client-side analytics we identify tools like [[user-side JavaScript]] programs that add functionality to a web browser. E.g. [[SNAPP]] is a JavaScript booklet that allows to conduct a social network analysis of a forum page, i.e. its discussion threads. | |||

A similar technique would be the Firefox [https://addons.mozilla.org/en-US/firefox/addon/748 Greasemonkey] add-on. When last checked, we didn't find any SNAPP-like tools, but a few dozen scripts that will modify or enhance Google Analytics, e.g. filter out referrers from social media sites. | |||

A different technique (which can't be called client-side analytics) concerns web widgets that owners of web pages can insert. These then can collect data and send it to a server. A typical example is the little "Share" widget to the left that uses the well known [http://www.addthis.com/ addThis] service. For example, one can imagine that teachers could require students of a class to share all visited pages on a common service. | |||

=== Special purpose built-in logging/analytics === | |||

Much educational software has built-in tools for collecting data. Data collected is principled, i.e. designed to be used either in education or research or both. Logging of user actions has two purposes: | |||

# Research in [[educational technology]] and related fields about learning, research in related fields that use education for data (e.g. computer science). | |||

# Most systems also provide data to the learner and the teacher in order to enhance learning and teaching processes. | |||

* [[Learning management system]]s usually implement [[IMS Content Packaging]] and [[SCORM 1.2]] tracking, i.e. show individual or class statics about pages consulted (IMS CP) and quizzing performance (SCORM). In addition, they may track student paths through their own "organizations". | |||

* Educational workflow systems such as [[LAMS]] (Dalziel, 2003) allow to monitor student progress with respect to activity completion, time spent on task, etc. LAMS also can export student productions as portfolio and then allows to look at student's productions and performance within various tools. | |||

* [[Intelligent tutoring system]]s, gone out of favor in the 1990's but still alive, include by define analytics. However, its purpose is to enhance the individual learner's process by various means, e.g. diagnosing and adapting. | |||

* As principle, most systems (initially) developed by research grants include data logging that then can be analysed by various analysis techniques. A good example is [[Collaborative Face to Face Educational Environment| CoFFEE]] | |||

== Software and systems == | |||

Notice: This section is partly obsolete and will be replaced by: | |||

* [[Portal: Data mining and learning analytics tools]] | |||

: [[User:Daniel K. Schneider|Daniel K. Schneider]] ([[User talk:Daniel K. Schneider|talk]]) 17:14, 16 March 2014 (CET) | |||

According to [http://en.wikipedia.org/wiki/Learning_analytics Wikipedia], retrieved 14:06, 2 March 2012 (CET). {{quotation|Much of the software that is currently used for learning analytics duplicates functionality of web analytics software, but applies it to learner interactions with content. [[Social network analysis]] tools are commonly used to map social connections and discussions}} | According to [http://en.wikipedia.org/wiki/Learning_analytics Wikipedia], retrieved 14:06, 2 March 2012 (CET). {{quotation|Much of the software that is currently used for learning analytics duplicates functionality of web analytics software, but applies it to learner interactions with content. [[Social network analysis]] tools are commonly used to map social connections and discussions}} | ||

None of the software listed below comes even close to the goals of SoLAR framework. We roughly can distinguish between software that gathers (and optionally displays) data in very specific applications, software that mines the web and finally general purpose analysis/visualization software. | |||

Ali, Hatala, Gašević & Jovanović (2011), Baker & Yacef (2009) and Asadi, Jovanović, Gašević & Hatala (2011) provide overviews of various learning analytics tools. We started looking a some of these systems. Some are not available and most others only seem work within very specific environments, although they may have been designed in a more general spirit. From what we understand right now (that is little), there exist currently very few interesting tools that could be used by teachers (or even somewhat tech-savy educational technologist). In particular, ready to use tools for innovative learning designs that engage students in various forms of writing seems to be almost totally missing. E.g. most creative teachers use wikis in one or another way and indeed could use a whole lot of analytics. Read more about that in the [[wiki metrics, rubrics and collaboration tools]]. | |||

It will take me some time to get a clearer picture - [[User:Daniel K. Schneider|Daniel K. Schneider]] 19:41, 15 March 2012 (CET). | |||

=== General purpose tools === | |||

A variety of tools made for other purposes is being used for educational analytics. Some of these have been developed for use in education. Below we list a few. | |||

==== Web analytics tools ==== | |||

Most of these require you to insert a JavaScript snippet in pages. That way some web site can track users. E.g. in this wiki, we use [[Google analytics]] (where users remain anonymous) | |||

Web analytics tools are both used for [[Search engine optimization]], [[Interaction design, user experience and usability]] studies. | |||

Besides Google Analytics, there are many other tools. Most are commercial, but minimal services are often free. E.g. [http://webmaster.live.com/ Bing WebMaster tools], [http://www.searchmetrics.com/en/ Search Metrics], [http://www.semrush.com/ SEMRush], [https://siteexplorer.search.yahoo.com/ Yahoo site explorer], [http://userfly.com/ Userfly], [http://tynt.com/ Tynt], [http://www.kissmetrics.com/ Kissmetrics], [https://mixpanel.com/ Mixpanel]. | |||

==== Social network analysis ==== | |||

See [[social network analysis]] | |||

Two popular ones are: | |||

* [http://research.uow.edu.au/learningnetworks/seeing/snapp/index.html SNAPP] | * [http://research.uow.edu.au/learningnetworks/seeing/snapp/index.html SNAPP], Social Networks Adapting Pedagogical Practice (Dawson, 2009), is {{quotation|a software tool that allows users to visualize the network of interactions resulting from discussion forum posts and replies. The network visualisations of forum interactions provide an opportunity for teachers to rapidly identify patterns of user behaviour – at any stage of course progression. SNAPP has been developed to extract all user interactions from various commercial and open source learning management systems (LMS) such as BlackBoard (including the former WebCT), and Moodle.}}, retrieved 14:06, 2 March 2012 (CET). SNAPP is implemented as JavaScript booklet that you can just drag on a brower's toolbar. It works with Firefox, Chrome and Internet explorer and for the visualization to work, you need Java installed. In Moodle (tested), this tool can visualize forum threads that are displayed hierarchically. Data also can be exported for further analysis. | ||

* [http://pajek.imfm.si/doku.php Pajek], is a program, for Windows, for analysis and visualization of large networks. | |||

==== Discussion/forum analysis tools ==== | |||

* The ACODEA Framework (Automatic Classification of Online Discussions with Extracted Attributes) by Mu et al. (2012). This is not a tool, but a configuration and use scenario for the SIDE tool (Mayfield and Rosé, 2010a). | |||

==== Awareness tools ==== | |||

So-called [[awareness tool]]s provide real-time analytics. According to Dourish and Belloti (1992), awareness is an understanding of the activities of others, which provides a context for your own activity. Gutwin and Greenberg (1996:209) define eleven elements for workspace awareness, for example: presence (who is participating in the activitiy ?), location (where are they working ?), activity level (how active are they in the workspace ?), actions (what are they doing ? what are their current activites and tasks ?), and changes (what changes are they making, and where ?) Note that "where" can refer both to a real and virtual location (e.g. a living room or a forum in a learning platform). | |||

Real-time awareness tools are popular in [[CSCL]] system, bust mostly absent from various forms of virtual learning environments, such as [[LMS]]s, [[content management system]]s and [[wiki]]s | |||

==== Text analytics (heavy data mining) ==== | |||

* TADA-Ed (Tool for Advanced Data Analysis in Education) combines different data visualization and mining methods in order to assist educators in detecting pedagogically important patterns in students’ assignments (Merceron & Yacef, 2005). We don't know if this project is alive. The [http://chai.it.usyd.edu.au/Projects#data-mining CHAI team] at the University of Sydney does have other more recent educational data mining projects. - [[User:Daniel K. Schneider|Daniel K. Schneider]] 19:41, 15 March 2012 (CET) | |||

* [http://lightsidelabs.com/ LightSide] will be an online service for automated revision asistance and classroom support. It also is available as free software that can be download, but that must be trained by yourself. | |||

=== | See also: | ||

* [[Wiki metrics, rubrics and collaboration tools]] (wiki-specific tools are indexed there) | |||

* [[latent semantic analysis and indexing]] (LSA/LSI) | |||

* [[Content analysis]] (General purpose text analysis tools are indexed here) | |||

==== web scraping, visualization and app building software ==== | |||

Various general purpose tools could be used, such as: | Various general purpose tools could be used, such as: | ||

| Line 88: | Line 338: | ||

* [http://www.hunch.com/ Hunch] | * [http://www.hunch.com/ Hunch] | ||

* [http://www-958.ibm.com/software/data/cognos/manyeyes/ Many Eyes] | * [http://www-958.ibm.com/software/data/cognos/manyeyes/ Many Eyes] | ||

* [http://needlebase.com/ Needlebase] | * [http://needlebase.com/ Needlebase]. See the [http://www.readwriteweb.com/archives/awesome_diy_data_tool_needlebase_now_available_to.php review on Read/Write Web] | ||

* [http://www.google.com/trends/ Google trends] | * [http://www.google.com/trends/ Google trends] | ||

* [http://realtime.springer.com/ Realtime] (Springer) | * [http://realtime.springer.com/ Realtime] (Springer) | ||

* [http://www.darwineco.com/ Darwin Awareness Engine] | * [http://www.darwineco.com/ Darwin Awareness Engine] | ||

* [http://www.sensemaker-suite.com/ SenseMaker] | * [http://www.sensemaker-suite.com/ SenseMaker] | ||

* [http://pipes.yahoo.com/pipes/ Yahoo pipes] | |||

* [http://www.involver.com/ Involver]. This is a toolbox that includes: Conversation Suite to monitor the conversation across Facebook, Twitter and Google+; Application Suite to deploy apps; Visual Builder and Social Markup Language (SML™) for designing pages and social apps. | |||

* [http://rapid-i.com/ Rapid Miner] is the most popular open source data mining tool. I can import data from many sources (also perform web scrapping), analyze with over 500 operators and visualize, etc. | |||

** [http://www.rcomm2013.org/ RapidMiner Community Meeting And Conference] (RCOMM 2013) in Porto between August 27 and 30, 2013 | |||

These tools are combinations of page scrapping (content extraction), text summarization a comparison), sorting, and visualization. Their scope and ease of use seems to differ a lot. | |||

See also: | See also: | ||

* [[social bookmarking]] services | * [[social bookmarking]], [[reference manager]] and [[Citation index]] services. These also provide quite a lot of data on what authors and other users produce and organize. | ||

=== Data visualization software === | ==== Data visualization software ==== | ||

Some online tools: | Some online tools: | ||

| Line 105: | Line 361: | ||

* [http://nodexl.codeplex.com/ NodeXL] | * [http://nodexl.codeplex.com/ NodeXL] | ||

See also: [[Visualization]], [[concept map]]s and [[social | Software: | ||

* [http://www.opendx.org/ OpenDX] (not updated since 2007) | |||

* [http://sites.google.com/site/netdrawsoftware/ NetDraw] a Windows program for visualizing social network data. (Borgatti, 2002). | |||

See also: [[Visualization]] and [[social network analysis]] | |||

==== Harvesting data from badges systems ==== | |||

[[Educational badges]] systems that are managed through some kind of server technology can be used to harvest information about learner achievements, paths, skills, etc. | |||

For example, {{quotation|Mozilla's Open Badges project is working to solve that problem, making it easy for any organization or learning community to issue, earn and display badges across the web. The result: recognizing 21st century skills, unlocking career and educational opportunities, and helping learners everywhere level up in their life and work.}} ([http://www.openbadges.org/en-US/about.html About], retrieved 15:37, 14 March 2012 (CET). | |||

See also: [[gamification]] | |||

==== Other tracking tools ==== | |||

* Most research systems built in educational technology, do have built-in tracking technology, see for example the [[CoFFEE]] system | |||

* [[e-science]] environments have access to a wide range of data. I don't know if any of these could be of use for learning analytics (except if students participate in an e-science project) | |||

* Some [[citizen science]] projects do have at least some light-weight analytics with respect to user participation | |||

* We don't have neither the time nor the resources to list tools developed for other domains (business analytics in particular). An interesting non-business initiative is [http://www.unglobalpulse.org/ Global pulse], a UN project. Part of Global Pulse’s approach is learning how to distill information from data so we can identify insights that can be useful in program planning and, in the long run, can contribute to shaping policy. Tools developed include for example "Twitter and perceptions of crisis-related stress" and "impact of financial crisis on primary schools". | |||

=== Tracking in e-learning platforms === | |||

By default, most so-called [[learning management system]]s have built-in tracking tools. In addition, there may be extra tools, either developed for research or available as additional modules in commercial systems. Such tools may help to improve traditional [[e-learning]] designs. | |||

==== LOCO Analyst ==== | |||

[http://jelenajovanovic.net/LOCO-Analyst LOCO-Analyst] {{quotation|is implemented as an extension of [[Reload Editor|Reload Content Packaging Editor]], an open-source tool for creating courses compliant with the IMS Content Packaging (IMS CP) specification. By extending this tool with the functionalities of LOCO-Analyst, we have ensured that teachers effectively use the same tool for creating learning objects, receiving and viewing automatically generated feedback about their use, and modifying the learning objects accordingly.}} ([http://jelenajovanovic.net/LOCO-Analyst/loco-analyst.html LOCO-Analyst], retrieved 14:06, 2 March 2012 (CET)). | |||

As of March 2012, the systems works with [http://ihelp.usask.ca/ iHelp Courses], an open-source standards-compliant LCMS developed at the University of Saskatchewan. | |||

Features: | |||

* In LOCO-Analyst, {{quotation|feedback about individual student was divided into four tab panels: Forums, Chats, Learning, and Annotations [...]. The Forums and Chats panels show student’s online interactions with other students during the learning process. The Learning panel presents information on student’s interaction with learning content. Finally, the Annotations panel provides feedback associated with the annotations (notes, comments, tags) created or used by a student during the learning process.}} (Ali et al. 2001). For each of the four "areas" key data can then be consulted. | |||

* {{quotation|The generation of feedback in LOCO-Analyst is based on analysis of the user tracking data. These analyses are based on the notion of Learning Object Context (LOC) which is about a student (or a group of students) interacting with a learning content by performing certain activity (e.g. reading, quizzing, chatting) with a particular purpose in mind. The purpose of LOC is to facilitate abstraction of relevant concepts from user-tracking data of various e-learning systems and tools.}} ([http://jelenajovanovic.net/LOCO-Analyst/loco-analyst.html LOCO-Analyst], retrieved 14:06, 16 March 2012). | |||

* LOCO Analyst is implemented through a set of formal [[RDF]] ontologies, i.e. the [http://jelenajovanovic.net/LOCO-Analyst/loco.html LOCO (Learning Object Context Ontologies) ontological framework] | |||

* [http://semwebcentral.org/scm/viewvc.php/?root=locoanalyst download] | |||

==== Virtual location-based meta-activity analytics ==== | |||

Jaillet (2005) presents a model with three indicators for measuring learning in the Acolad distance teaching platform. These indices are are based on Leontiev's activity theory that distinguishes three levels: ''Activities'' are high level and they are goal and needs driven. Activities are conducted using strategies and performing ''actions'' using tools (instruments). Actions are performed ''using operators''. In the context of education, both actions and operations can be either individual or collaborative (social). | |||

The study measures student activity (called meta-activity) through three dimensions: '''attendance''', '''availability''' and '''involvement''': All indicators are measured with respect to the most active student. | |||

* Attendance: Number of connections (% of max.) | |||

* Availability: Duration of connection (% of max.) | |||

* Involvement: Number of actions performed (% of max., e.g. upload a document, go to virtual place, participate in group discussion) | |||

Example: | |||

:<sub>653</sub>(61;84;42)<sub>2629</sub><sup>480</sup> means that a student connected 61% of 653 (with respect to the student that has the highest connection), during 84% of 480 hours and that he completed 42% operations out of 2692 (max.). | |||

It is not very clear from the paper whether the data leading to "meta-activity" indicators are supposed to measure actions or operations. The study also includes an other interesting idea. | |||

Since Acolad uses a 2D spatial metaphor, i.e. 3D drawings, for various learning activities, one can guess from the student's location what they are working on. There is evidence from research in CSCW that people do tend to relocated in virtual environment. In other words, learning analytics should provide better data from environments that associate learning activities with a space. | |||

==== Blackboard Analytics for learn ==== | |||

* The [http://www.blackboard.com/Platforms/Analytics/Products/Blackboard-Analytics-for-Learn.aspx Blackboard Analytics for Learn™ module] is advertized as {{quotation|easy, self-service access to data that can help give you an enterprise-level perspective. Gain insight into user activity, course design and student performance data across departments and colleges — enabling you to improve your use of the Blackboard Learn™ platform in support of teaching, learning, and student success.}} (retrieved April 5, 2012). | |||

According to the same source, one could get answers to questions like: | |||

* How are my students performing on learning standards over time? | |||

* How can I easily find students who aren’t engaged in their online courses? | |||

* Which tools are being used in courses the most? The least? | |||

* How many logins, page views, and other metrics have occurred over time? | |||

* Who are the most active instructors? Who are the least active? | |||

* What strategies to improve the quality of course design and instruction result in better student performance? Instructor training courses? Course reviews? | |||

==== Moodle analytics with MocLog ==== | |||

* [http://moclog.ch/project/ MOCLog – Monitoring Online Courses with Logfiles] (Project page) | |||

Quote: Teachers, students, study program managers and administrators need feedback about the status of the activities in online-courses. MOCLog will realize a monitoring system, that helps to analyze logfiles of the Moodle LMS more effectively and efficiently thus contributing to improve the quality of teaching and learning. | |||

==== List of other tools to explore ==== | |||

* [http://www.itap.purdue.edu/learning/tools/signals/ Purdue Course Signals] combines predictive modeling with data-mining from Blackboard Vista. This software [http://www.sungardhe.com/news.aspx?id=859&terms=signals has been bought by Sunguard] (now [http://www.ellucian.com/ Ellucian]), a supplier of campus software. {{quotation|Course Signals’ advisor view helps your institution’s professional and faculty advisors have a better window into how a student is performing in their current courses, making it easy for them to identify and reach out to students who are struggling in multiple courses, have risk factors such as low overall GPAs, or are first-generation status or transfer students. A real-time view of this data means advisors can act more immediately to help students before they abandon a program of study, or worse, leave school discouraged and unprepared for their futures}} ([http://www.sungardhe.com/WorkArea/DownloadAsset.aspx?id=1036 Redefining “early” to support student success], retrieved April 10 2012). See also its [http://www.sungardhe.com/Solutions/PowerCAMPUS-Enterprise-Reporting---Analytics/ PowerCAMPUS Analytics] and [http://www.datatel.com/products/products_a-z/colleague-erp.cfm Datatel Colleague] | |||

* [http://www.educause.edu/EDUCAUSE+Quarterly/EDUCAUSEQuarterlyMagazineVolum/VideoDemoofUMBCsCheckMyActivit/219113 Video Demo of UMBC’s “Check My Activity” Tool for Students], also based on Blackboard data | |||

* [http://wcet.wiche.edu/advance/par-framework WCET Predictive Analytics Reporting (PAR) Framework]. Aim: to conduct large-scale analyses of federated data sets within postsecondary institutions to better inform loss prevention and identify drivers related to student progression and completion. This project (as of April 2012) seems to be in planning stage. | |||

* '''Teacher ADVisor (TADV)''': uses LCMS tracking data to extract student, group, or class models (Kobsa, Dimitrova, & Boyle, 2005). | |||

* '''Student Inspector''': analyzes and visualizes the log data of student activities in online learning environments in order to assist educators to learn more about their students (Zinn & Scheuer, 2007). | |||

* '''CourseViz''': extracts information from WebCT. | |||

* '''Learner Interaction Monitoring System (LIMS)''': (Macfadyen & Sorenson, 2010) | |||

* [http://projects.oscelot.org/gf/project/astro/ ASTRO] and [http://projects.oscelot.org/gf/project/bbstats/ BBStats], some kind of Blackboard plugins (?) that provide a dashboard. | |||

=== Classroom tools === | |||

Tools like [http://mymetryx.com/ Metrix] (working with any mobile device) allow teachers to define a skill set and then enter student data that allow to "track, analyze and differentiate" students. | |||

=== General purpose educational tracking tools === | |||

Some institutions did or will implement tracking tools that pull in data from several sources. Some tools are designed for both teachers and learnings, others are in the realm of '''academic analytics''' (as opposed to learning analytics). | |||

==== UWS Tracking and Improvement System for Learning and Teaching (TILT) ==== | |||

The University of Western Sydney implemented a system with the following uses in mind ([http://www.uws.edu.au/__data/assets/pdf_file/0013/7411/UWS_TILT_system.pdf Scott], 2010). | |||

# To prove quality – e.g. data can be drawn from different quantitative and qualitative data bases and, after triangulation, can be used to prove the quality of what UWS is doing. Data analysis can use absolute criteria and/or relative (benchmarked) standards. | |||

# To improve quality – e.g. data can be used to identify key improvement priorities at the university, college, division, course and unit levels. | |||

This framework uses about ten data sources, mostly surveys (student satisfaction, after graduation) and peformance reports. Interestingly, it includes time series, e.g. student satisfaction during, at exit and after obtaining a degree. | |||

==== Course quality assurance at QUT ==== | |||

Towers (2010) describes Queensland University of Technology (QUT) annual [http://www.ltu.qut.edu.au/curriculum/coursequalit/ Courses Performance Report] as a combination of ''Individual Course Reports'' (ICRs), the ''Consolidated Courses Performance Report'' (CCPR), ''Underperforming Courses Status Update'' and the ''Strategic Faculty Courses Update'' (SFCU). {{quotation|Individual Course Reports (ICRs) is to prompt an annual health check of each course’s performance, drawing upon course viability, quality of learning environment, and learning outcomes data. In total, data on 16 indicators are included in the ICRs.}} (Towers, 2010: 119). The [http://eprints.qut.edu.au/33193/ paper] include an example of an 8-page report in the appendix. | |||

=== Tools for power teaching === | |||

Courses that aim at deep learning, e.g. applicable knowledge and/or higher order knowledge are usually taught in smaller classes most often uses software that is distinct from the ones used in so-called [[e-learning]], e.g. [[wiki]]s, [[learning e-portfolio]]s, [[content management system]]s, [[blog]]s, [[webtop]]s and combinations of these. In addition, some systems developed in [[educational technology]] research are being used. The latter often implement analytics for research, but often there is a teacher interface using the same data. Most creative power teaching, however, uses what we would call "street technology" and for these, analytics tool rarely exist. A good example would be the ''Enquiry Blog Builder'' we shortly describe below. Another example is [[StatMediaWiki]], a tool that we use to learn about student contributions in the french version of this wiki. Most analytics tools we found so far, require some learner participation like tagging or filling in shorter or longer questionnaires. | |||

Since [[project-oriented learning]] and related [[constructivism|constructivist]] designs do rarely include "pages to view" and quizzes, simple quantitative data do not provide good enough analytics, although some quantitative data such as "number of edits", "number of connections", etc. are useful. Some teachers, in particular in the engineering sciences also use [[project management software]] that by definition include analytics. However these are less suited for [[writing-to-learn|writing]], [[collaborative learning| collaboration]] and creating [[community of learning|communities of learning]] than typical out-of-the-box multi-purpose [[portalware]]. | |||

The main issue of what we would call "small scale analytics" is not how to measure learning, but how to improve learning scenarios through tools that help both the learner and the teacher understand "what is going on" and therefore improve [[reflection]]. | |||

==== Analytics for e-portfolios ==== | |||

Oliver and Whelan (2010) point out that {{quotation|Many agree that current available data indicators - such as grades and completion rates - do not provide useable evidence in terms of learning achievements and employability (Goldstein, 2005; Norris, Baer et al. 2008)}} and they argue that {{quotation|qualitative evidence of learning is traditionally housed in student portfolio systems}}. Such systems then should include mechanisms for self-management of learning that could feed into institutional learning analytics. At Curtin, to encourage students to reflect on and assess their own achievement of learning, the iPortfolio incorporates a [https://iportfolio.curtin.edu.au/help/my_ratings.cfm?popup=1 self-rating tool] based on [http://otl.curtin.edu.au/teaching_learning/attributes.cfm Curtin's graduate attributes], {{quotation|enabling the owner to collate evidence and reflections and assign themselves an overall star-rating based on Dreyfus and Dreyfus' Five-Stage Model of Adult Skill Acquisition (Dreyfus, 2004). The dynamic and aggregated results are available to the user: [as shown in the figure], the student can see a radar graph showing their self-rating in comparison with the aggregated ratings of their invited assessors (these could include peers, mentors, industry contacts, and so on).}} (Oliver & Wheelan:2010). | |||

This example as well as De Liddo et al. (2011) tagging system discussed above demonstrates a very simple principle that one could implement in any other environment: (Also) ask the learner. | |||

==== Student Activity Monitor and Cam dashboard for PLEs ==== | |||

The [http://www.role-showcase.eu/role-tool/student-activity-monitor SAM] (Student Activity Monitor) was developed in the context of the [http://www.role-project.eu/ EU ROLE] project. {{quotation|To increase self-reflection and awareness among students and for teachers, we developed a tool that allows analysis of student activities with different visualizations}}. Although SAM was developed in a project that focused on developing a kit for constructing [[personal learning environment]]s it can be used in any other context where the learning process is largely driven by rather autonomous learning activities. SAM is implemented as Flex software application and it is documented in the sourceforge ROLE project wiki as [http://sourceforge.net/apps/mediawiki/role-project/index.php?title=Widget_User_Guide:Student_Activity_Meter Widget Student Activity Meter]. It relies on a widget that users must use: the [http://sourceforge.net/apps/mediawiki/role-project/index.php?title=Widget_User_Guide:CAM_Widget Widget CAM Widget] that enables/disables user monitoring. Enabling the Monitoring provides learners with benefits regarding recommendation and self-reflection mechanisms. | |||

Its four purposes are (Govaerts et al., 2010): | |||

* Self-monitoring for learners | |||

* Awareness for teachers | |||

* Time tracking | |||

* Learning resource recommendation | |||

The [http://sourceforge.net/apps/mediawiki/role-project/index.php?title=Tool_User_Guide:Cam_dashboard Cam dashboard] is a an application that enables the visualization of usage data as a basis to detect changes in usage patterns. The purpose is to detect variations in the use of PLEs based on changes in usage patterns with widgets and services. | |||

Links: | |||

* [http://www.role-project.eu/ ROLE project page] | |||

* [http://www.role-showcase.eu/ ROLE Showcase Platform] | |||

* [http://www.role-project.eu/Showcases Showcases] (see also next item) | |||

* [http://www.role-widgetstore.eu/ ROLE Widget Store] | |||

Download: | |||

* So far we didn't find any .... - [[User:Daniel K. Schneider|Daniel K. Schneider]] 19:34, 11 April 2012 (CEST) (but maybe we will have to spend some more time searching or download the software kit). | |||

==== The EnuiryBlogger ==== | |||

[http://wordpress.org/extend/plugins/eb-enquiryblogbuilder/ Enquiry Blog Builder] provides a series of plugins for the very popular Wordpress [[blog]]gingware (Ferguson, R., Buckingham Shum, S. and Deakin Crick, R., 2011). As of April 2012, the list includes: | |||

{{quotationbox| | |||

* '''MoodView''' - This displays a line graph plotting the mood of the student as their enquiry progresses. The widget displays the past few moods and allows a new one to be selected at any time. Changing moods (a hard coded drop-down list from 'going great' to 'it's a disaster') creates a new blog entry with an optional reason for the mood change. The graph is created using the included Flot JavaScript library. | |||

* '''EnquirySpiral''' - This widget provides a graphical display of the number of posts made in the first nine categories. A spiral of blobs appears over an image with each blob representing a category. The blobs are small and red when no posts have been made. They change to yellow for one or two posts, and green for three or more. In this way it is easy for the student to see how they are progressing, assuming the nine categories are well chosen. | |||

* '''EnquirySpider''' - This widget works in the same way as the EnquirySpiral, except that the blobs are arranged in a star shape. They are associated with seven categories. (from nine to sixteen so they don't conflict with the EnquirySpiral). The categories are intended to match with the Effective Lifelong Learning Inventory diagram. | |||

}} ([http://wordpress.org/extend/plugins/eb-enquiryblogbuilder/ Enquiry Blog Builder], retrieved April 10 2012). | |||

The kit also includes ''BlogBuilder'' allowing batch creation of blogs in one go with provided teacher and student names. Teachers who login will then see the a '''dashboard''' showing the progress of the students assigned to them. In other words, the teacher will get monitoring widgets that correspond to the three widgets above. These will show the student widgets. | |||

==== Learner disposition ==== | |||

[http://oro.open.ac.uk/32823/1/SBS-RDC-LAK12-ORO.pdf Buckingham Shum and Deakin Crick (2012)] describe a setup that collects learning dispositions through a questionnaire. Results then are shown to the students and teachers. {{quotation|The inventory is a self-report web questionnaire comprising 72 items in the schools version and 75 in the adult version. It measures what learners say about themselves in a particular domain, at a particular point in time}} (Preprint: page 4). | |||

The seven measured dimensions are: | |||

* Critical curiosity | |||

* Meaning Making | |||

* Dependence and Fragility | |||

* Creativity | |||

* Learning Relationships | |||

* Strategic Awareness | |||

Students are shown a spider diagram of these dimensions. Since ELLI can be applied repetitively, a diagram also can display change, i.e. (hopefully) increase. Furthermore data can be aggregated across groups of learners in order to provide collective profiles for all dimensions or details about a specific dimension. | |||

==== Other writing tools and cognitive tools with built-in Analytics ==== | |||

There are many [[writing-to-learn]] environments, other [[cognitive tool]]s, [[knowledge-building community model|knowledge building environment]]s, etc. allowing to co-create knowledge and that do include some information about "what is going on". Some are developed for education, others for various forms of knowledge communities, such as [[community of practice|communities of practice]] | |||

Examples: | |||

* [http://cohere.open.ac.uk/ Cohere] from Open University is a visual tool to create, connect and share Ideas. E.g. de Liddo et al. (2011) analyse ''quality of contribution to discourse'' as an indicator for meaningful learning. | |||

* [[Knowledge forum]] and [[Computer Supported Intentional Learning Environment|CSILE]] | |||

* [[Babble]] | |||

* [[Collaborative Face to Face Educational Environment]] (CoFFEE) | |||

* [[Wiki]]s, see the [[Wiki metrics, rubrics and collaboration tools]] article | |||

* [[concept map]] tools and other [[idea manager]]s. Some of these run as services. | |||

== Discussion == | |||

=== Small vs. big analytics === | |||

Small analytics (individual learners, teachers and the class) are not new, although we hope that more tools that will help us understanding what is going on will be available in the future. Such tools are mostly lacking for environments that engage students in rich activities and productions (as opposed to so-called [[e-learning]] that uses [[learning management system]]s). In [[project-oriented learning]] designs, we also should think about building tools into the scenario that let the students add data, e.g. "what did I contribute" and "why". | |||

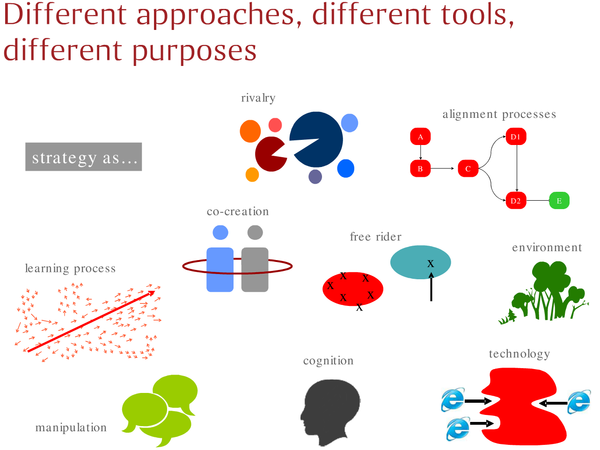

Big time analytics should carefully think about strategy, i.e. ways of thinking about improving education. In particular we believe it important that one should not automatically focus on alignment as in a [http://en.wikipedia.org/wiki/Balanced_scorecard balanced scorecard] method and rather adopt a "learning organization" perspective, e.g. adopt some kind of [[expansive learning]] philosophy. Below is a picture from a [http://www.slideshare.net/arvetica/strategy-grand-tour talk] by Stefano Mastrogiacomo that illustrates various way of thinking. | |||

[[image:stefano-mastrogiacomo-strategies.png|thumb|600px|none|Catalogue of strategies (© S. Mastrogiacomo)]]. | |||

Of course, discussion about the aims of learning analytics already takes place, in particular ethical issues (refs?). However, we suggest to focus on the idea that [[Change management|Change]] should be related to learner and teacher [[empowerment]] as opposed to strengthening the already sometimes counterproductive education bureaucracy. | |||

=== A list of questions === | |||

Items to expand (also should merge some stuff above) | |||

* Learning analytics is an old topic in educational technology. Some [[CSCL]] research already was mentioned. Much older [[artificial intelligence and education]] always had some kind of analytics for both the learner and the teacher built-in. Adaptive systems have been produced by the dozen and failed to interest teachers. An educationally more light-weight variant of tutoring systems are so-called recommended systems. These may become more popular, although attention must be payed of not atttempting to lock students into a box. E.g. one could image adding 20% of "noise" (i.e. recommendation that are rather far as opposed to close). That's how genetic algorithms work. | |||

* New in learning analytics are the ability to track students across environments (e.g. all social services) and the interest for using analytics as evaluation tool az institution level and above. This leads to [[privacy]] issues but not just. Analytics can have intrinsic adverse effects. See the next item. | |||

* Analytics in other fields often had an adverse effect. In public policy, monitoring and reporting tool had the effect to blow up procedures, kill initiative, remove communication, etc. Analytics can (but must not) lead to absolute disasters both in terms of both cost and effectiveness. Reliance on key indicators and reporting can bring out the dark side of management: {{quotation|toxic leadership, managerial worst practices, project failures and suffering at work.}} ([http://stefanomastrogiacomo.com/ Stefano Mastrogiacomo]) and increase the power of bureaucracy at the expense of individual creativity and communication. In education that could mean: Teachers will have to use mind crippling e-learning platforms (else the organization can't collect data), participants will learn how to produce data for the system (as opposed to produce meaning) and participants will talk less to each other (since time has to be spend on collecting and archiving data). | |||

* Lack of tools. Use of data mining and visualization tools need month of training (at least) | |||

* Why don't teachers '''ask''' more information from the learners (self-reporting)? Many tools allow students to tell what they did and in addition to reflect upon, and teachers can require it. For example, [[LAMS]] activities can be configured with a (mandatory) notebook to be filled in at the end. Wiki's such as this one include personal home pages where students can add links to their contributions. Actually, tools we found for project-oriented designs such as inquiry learning or portfolio construction do this. | |||

* Are there analytics that can distinguish between development of routine expertise, as opposed to adaptive expertise (new schemas that allow to respond to new situtations) ? (Alexander 2003, cited by Werner et al. 2013)). | |||

== Links == | == Links == | ||

=== Literature === | |||

'''Overviews and bibliographies''' | '''Overviews and bibliographies''' | ||

* [https://tekri.athabascau.ca/analytics/ 1st International Conference on learning analytics] (includes a short introduction) | |||

* [http://en.wikipedia.org/wiki/Learning_analytics Learning analytics - Wikipedia, the free encyclopedia] | * [http://en.wikipedia.org/wiki/Learning_analytics Learning analytics - Wikipedia, the free encyclopedia] | ||

* [https://docs.google.com/document/d/1f1SScDIYJciTjziz8FHFWJD0LC9MtntHM_Cya4uoZZw/edit?hl=en&authkey=CO7b0ooK&pli=1 Analytics Bibliography] | * [https://docs.google.com/document/d/1f1SScDIYJciTjziz8FHFWJD0LC9MtntHM_Cya4uoZZw/edit?hl=en&authkey=CO7b0ooK&pli=1 Learning and Knowledge Analytics Bibliography] by George Siemens (et al?). | ||

'''Journals''' | |||

* [http://www.educationaldatamining.org/JEDM/ JEDM] - Journal of Educational Data Mining | |||

=== Organizations and communities === | |||

'''Organizations and conferences''' | '''Organizations and conferences''' | ||