Learning process analytics: Difference between revisions

m (→Bibliography) |

|||

| (57 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Requirements for learning scenario and learning process analytics == | == Requirements for learning scenario and learning process analytics == | ||

A first version of this article that was submitted to EdMedia 2012 and accepted. '''This''' wiki version includes pictures and will evolve over time. | |||

The original conference paper is available on [http://www.editlib.org/p/40963 EditLib at http://www.editlib.org/p/40963] as Schneider, D., Class, B., Benetos, K. & Lange, M. (2012). ''Requirements for learning scenario and learning process analytics''. In T. Amiel & B. Wilson (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2012 (pp. 1632-1641). Chesapeake, VA: AACE. | |||

'''Authors''' | |||

Daniel K. Schneider | Daniel K. Schneider | ||

| Line 17: | Line 21: | ||

: Rich Internet Application Developer, New Zealand | : Rich Internet Application Developer, New Zealand | ||

'''Abstract:''' This contribution attempts to define what kind of learning analytics both learners and teachers should have in various forms of project-oriented learning designs in order to enhance the learning and teaching process within learning scenarios. | '''Abstract:''' | ||

This contribution attempts to define what kind of learning analytics both learners and teachers should have in various forms of project-oriented learning designs in order to enhance the learning and teaching process within learning scenarios. | |||

'''Keywords:''' | |||

[[learning analytics]], [[constructivism]], [[CSCL]], [[project-based learning]], [[problem-based learning]], [[inquiry-based learning|inquiry learning]], [[writing-to-learn]], and [[Knowledge-building community model|knowledge community learning]]. | |||

==Introduction== | ==Introduction== | ||

In this discussion paper, we define '''learning process analytics''' as a collection of methods that allow teachers and learners to understand '''what is going on''' in a | In this discussion paper, we define '''learning process analytics''' as a collection of methods that allow teachers and learners to understand '''what is going on''' in a '''learning scenario''', i.e. what participants work(ed) on, how they interact(ed), what they produced(ed), what tools they use(ed), in which physical and virtual location, etc. [[Learning analytics]] is most often aimed at generating predictive models of general student behavior. So-called academic analytics even aims to improve the system. We are trying to find a solution to a somewhat different problem. In this paper we will focus on improving [[Project-oriented learning|project-oriented learner-centered designs]], i.e. a family of educational designs that include any or some of knowledge-building, writing-to-learn, project-based learning, inquiry learning, problem-based learning and so forth. | ||

We will first provide a short literature review of '''learning process analytics''' and related frameworks that can help improve the quality of educational scenarios. We will then describe a few project-oriented educational scenarios that are implemented in various programs at the University of Geneva. These examples illustrate the kind of learning scenarios we have in mind and help define the different types of analytics both learners and teachers need. Finally, we present a provisional list of analytics desiderata divided into “wanted tomorrow” and “nice to have in the future”. | We will first provide a short literature review of '''learning process analytics''' and related frameworks that can help improve the quality of educational scenarios. We will then describe a few project-oriented educational scenarios that are implemented in various programs at the University of Geneva. These examples illustrate the kind of learning scenarios we have in mind and help define the different types of analytics both learners and teachers need. Finally, we present a provisional list of analytics desiderata divided into “wanted tomorrow” and “nice to have in the future”. | ||

| Line 29: | Line 37: | ||

==A short overview of learning scenario and learning process analytics== | ==A short overview of learning scenario and learning process analytics== | ||

From a technical point of view, “learning analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (1st International conference on learning analytics, 2011). We define ''learning scenario and learning process analytics'' as the measurement and collection of learner actions and learner productions, organized to provide feedback to learners, groups of learners and teachers during a teaching/learning situation. This information can be presented in various forms, e.g. a browsable analytics web site or a dashboard and should engage participants in reflection with respect to their different goals, roles, tasks, productions, and so forth. | From a technical point of view, “learning analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (1st International conference on learning analytics, 2011). We define ''learning scenario and learning process analytics'' as the measurement and collection of learner actions and learner productions, organized to provide feedback to learners, groups of learners and teachers during a teaching/learning situation. This information can be presented in various forms, e.g. a browsable analytics web site or a [[educational dashboard|dashboard]] and should engage participants in reflection with respect to their different goals, roles, tasks, productions, and so forth. | ||

This type of analytics has a long tradition in educational technology research, in particular in the subfields of ''artificial intelligence and education | This type of analytics has a long tradition in educational technology research, in particular in the subfields of ''[[artificial intelligence and education]] (including [[intelligent tutoring system]]s) and in ''computer-supported collaborative learning ([[CSCL]])''. We shall not discuss the former, since we are not interested in using computers in the role of a virtual teacher/expert. In a project-oriented setting, the learner is to provide the intelligence, not the computer. Computers are best used only as tools that assist the learner’s cognition. “[[Cognitive tool]]s [...] are unintelligent tools, relying on the learner to provide the intelligence, not the computer. This means that planning, decision-making, and self-regulation are the responsibility of the learner, not the technology. Cognitive tools can serve as powerful catalysts for facilitating these higher order skills if they are used in ways that promote reflection, discussion, and collaborative problem-solving [...]” (Derry & Lajoie, 1993, p. 5). | ||

=== Analytics in the CSCL and knowledge building tradition === | |||

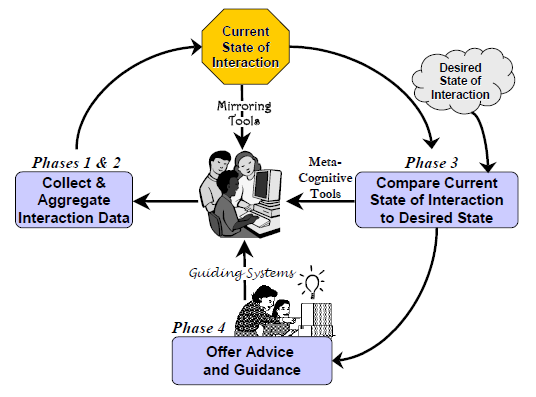

In the field of CSCL, Soller, Martinez, Jermann and Muehlenbrock (2005) developed a ''collaboration management cycle'' framework that distinguishes between ''mirroring systems'', which display basic actions to collaborators, ''metacognitive tools'', which represent the state of interaction via a set of key indicators, and ''coaching systems'', which offer advice based on an interpretation of those indicators. “The framework, or collaboration management cycle, is represented by a feedback loop, in which the [[metacognition|metacognitive]] or behavioral change resulting from each cycle is evaluated in the cycle that follows. Such feedback loops can be organized in hierarchies to describe behavior at different levels of granularity (e.g. operations, actions, and activities). The collaboration management cycle is defined by the four following phases” (Soller et al., 2004:6). | |||

* Phase 1: The data collection phase involves observing and recording the interaction of users. Data are logged and stored for later processing. | |||

* Phase 2: Higher-level variables, termed indicators are computed to represent the current state of interaction. For example, an agreement indicator might be derived by comparing the problem solving actions of two or more students, or a symmetry indicator might result from a comparison of participation indicators. | |||

* Phase 3: The current state of interaction can then be compared to a desired model of interaction, i.e. a set of indicator values that describe productive and unproductive interaction states. “For instance, we might want learners to be verbose (i.e. to attain a high value on a verbosity indicator), to interact frequently (i.e. maintain a high value on a reciprocity indicator), and participate equally (i.e. to minimize the value on an asymmetry indicator).” (idem, p. 7). | |||

* Phase 4: Finally, remedial actions might be proposed by the system if there are discrepancies. | |||

[[image:soller-et-al-collaboration-management-cycle.png|thumb|600px|none|The Collaboration Management Cycle, by A. Soller, A. Martinez, P. Jermann & M. Muehlenbrock (2004). [http://www.cscl-research.com/Dr/ITS2004Workshop/proceedings.pdf ITS 2004 Workshop on Computational Models of Collaborative Learning]]] | |||

Soller et al. (2005:) add a phase 5 that is outside of the cycle: “After exiting Phase 4, but before re-entering Phase 1 of the following collaboration management cycle, we pass through the evaluation phase. Here, we reconsider the question, ‘What is the final objective?’ and assess how well we have met our goals”. In other words, the behaviors in the "system" are analyzed as a whole. | Soller et al. (2005:) add a phase 5 that is outside of the cycle: “After exiting Phase 4, but before re-entering Phase 1 of the following collaboration management cycle, we pass through the evaluation phase. Here, we reconsider the question, ‘What is the final objective?’ and assess how well we have met our goals”. In other words, the behaviors in the "system" are analyzed as a whole. | ||

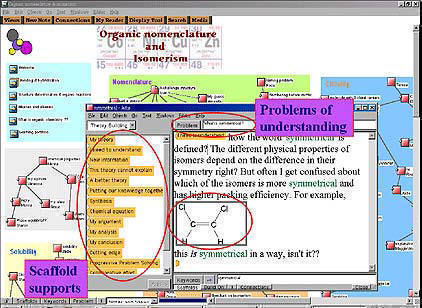

Another school of thought in CSCL concerns knowledge-building communities. “Sustaining knowledge-building communities online requires the creation of electronic environments that support both formal and informal learning, and capture significant tasks and activities that are central to the day-to-day work of the participants. These environments must provide supports for real world activities and learning, while providing the potential for something more. That something more is knowledge building, or the production and continual improvement of ideas of value to a community” (Scardamalia & Bereiter, 2003). This knowledge-building environment model relies on so-called Computer Supported Intentional Learning Environments. Its commercial version, Knowledge Forum, is an “electronic group workspace designed to support the process of knowledge building, [i.e.] any number of individuals and groups can share information, launch collaborative investigations, and build networks of new ideas…together,” (Knowledge Forum, 2012). The technology can be described as a concept map, hypertext and forum system hybrid that can display information from multiple vantage points and entry points. In other words, there are no special analytics, but its networked architecture and various display options allow users to understand “connections” between contents, between contents and users, and between users themselves. | Another school of thought in CSCL concerns knowledge-building communities. “Sustaining knowledge-building communities online requires the creation of electronic environments that support both formal and informal learning, and capture significant tasks and activities that are central to the day-to-day work of the participants. These environments must provide supports for real world activities and learning, while providing the potential for something more. That something more is knowledge building, or the production and continual improvement of ideas of value to a community” (Scardamalia & Bereiter, 2003). This knowledge-building environment model relies on so-called Computer Supported Intentional Learning Environments. Its commercial version, [[Knowledge Forum]], is an “electronic group workspace designed to support the process of knowledge building, [i.e.] any number of individuals and groups can share information, launch collaborative investigations, and build networks of new ideas…together,” (Knowledge Forum, 2012). The technology can be described as a concept map, hypertext and forum system hybrid that can display information from multiple vantage points and entry points. In other words, there are no special analytics, but its networked architecture and various display options allow users to understand “connections” between contents, between contents and users, and between users themselves. | ||

[[image:knowledge-forum-vdc.jpg|frame|none|Knowledge Forum: Source : [http://vdc.cet.edu/entries/lee.htm Virtual Design Center] ]] | |||

While CSCL research produces many interesting approaches and systems, these are rarely used in practice since they require advanced knowledge of collaborative learning and a mastery of the technology used. In addition, orchestrating CSCL scenarios is very time consuming. | |||

=== Analytics for learning management systems === | |||

Most [[LMS]] do include some features for tracking student behavior. For a teacher or a student this information can be useful in order to monitor if participation requirements are met. However, these tools are often fairly cumbersome and could argue that LMSs are not really designed for data mining, analysis and visualization. {{quotation| IF tracking data is provided in a VLE, it is often incomprehensible, poorly organized, and difficult to follow, because of its tabular format. As a result, only skilled and technically savvy users can utilize it (Mazza & Dimitrova, 2007). But even for them it might be too time consuming.}} (Dyckhoff et al., 2012: 59). | |||

However, there exist various add-ons (either free or commercial) for some popular systems, e.g. [http://moclog.ch/project/ MOCLog] for Moodle or [http://www.blackboard.com/Platforms/Analytics/Products/Blackboard-Analytics-for-Learn.aspx Blackboard Analytics]. In both cases, learning analytics will only tell that students consume some resource or activity and how they perform with respect to manually or automatically graded activities. In order words, these analytics are not designed to support active pedagogies, however they can nicely enhance traditional e-learning if [[user experience]] (usability and usefulness) is good. Typically, a good LA system should include easy to use and informative teacher and student [[educational dashboard|dashboard]]s. Both teachers and learners then can reflect on visualized user participation and activity data. | |||

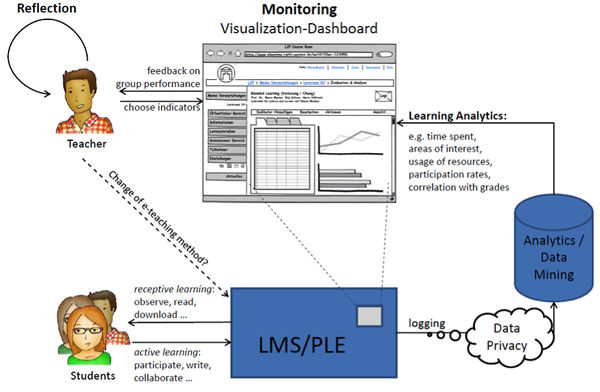

The following figure (Dyckhoff et al., 2012) shows this idea. It summarizes the {{quotation|Learning Analytics Toolkit, which enables teachers to explore and correlate learning object usage, user properties, user behavior, as well as assessment results based on graphical indicators}} ([http://mohamedaminechatti.blogspot.ch/2012/09/design-and-implementation-of-learning.html Design and Implementation of a Learning Analytics Toolkit for Teachers], retrieved March 2014). | |||

[[File:Elat-laprocess.jpg|600px|thumbnail|none|Learning analytics architecture of the eLAT Toolkit (Dyckhoff et al., 2012)]] | |||

Educational workflow systems like [[LAMS]] or VLPE, an experimental system based on [[BPMN]] (Adesina, 2013) provide more and better analytics than traditional LMSs, since it is fairly easy to add some data collection and visualization features to systems that are designed around structured human activities. | |||

=== Analytics for street technology === | |||

Most courses that aim at deep learning, e.g. applicable knowledge and/or higher order knowledge are usually taught in small classes and many teachers seem to use software that is distinct from ones used in mainstream conceptions of e-learning. Typical environments are [[wiki]]s, [[learning e-portfolio]]s, [[CMS|content management systems]], [[blog]]s, [[webtop]]s, [[social networking]] sites and/or any combination of these. In addition, scenarios can include the use of various special-purpose social software, such as bookmarking services, reference managers and feed aggregators. While such software is well suited for supporting a wide range of learning activities, it can become difficult for both learners and teachers to follow and understand “what is going on”. | |||

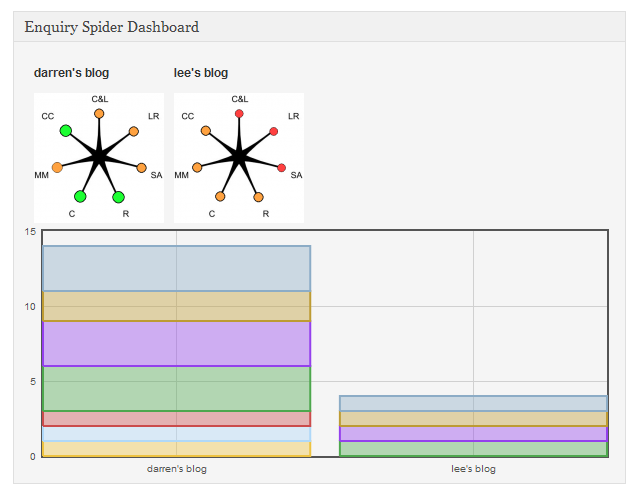

Few systems seem to exist that enhance this kind of “streetware” that is popular in education. [http://oro.open.ac.uk/30598 Ferguson, Buckingham Shum, and Crick (2011)] developed ''[http://wordpress.org/extend/plugins/eb-enquiryblogbuilder/ Enquiry Blog Builder]''; an interesting set of [http://wordpress.org/ Wordpress] plugins that will enhance inquiry-learning scenarios. The ''MoodView'' plugin can produce a line graph plotting the mood of the student as their enquiry progresses. Students can consult the evolution of their mood over time or insert a new one by selecting an item from a drop down list (from 'going great' to 'it's a disaster'). Changing a mood creates a new blog entry where the student may articulate his/her reason for the mood change. Both the ''EnquirySpiral ''and the''' '''''EnquirySpider''''' '''are widgets that provide a graphical display of the number of posts made in various blog categories. The ''spiral'', a key metaphor in inquiry learning, concerns the first nine and the ''spider'' the seven following ones. This implies that the sixteen categories should be well chosen in advance. The nine “Spiral categories” concern the inquiry process and the seven “spider” categories are based on the Effective Lifelong Learning Inventory diagram. The toolkit then includes a blog generator that will generate a blog for each student and one for the teacher(s). The teacher blog will then include a ''[[educational dashboard|dashboard]]'' showing the progress of students assigned to the connected teacher. | |||

[[image:enquiry-spider-1.png|frame|none|View on the dashboard for a teacher of two students. Source [http://wordpress.org/extend/plugins/eb-enquiryblogbuilder/screenshots/ Enquiry Blog Builder], June 2012]] | |||

Other systems have emerged, e.g. the [http://hapara.com/ Hapara] Teacher [[Educational Dashboard|Dashboard]] provides a “view” over the class Google Apps environment. It mainly automates many configuration tasks, but also provides a “birds view” and may in the future include more advanced analytics. | |||

De Liddo et al. (2011) implement a structured [http://oro.open.ac.uk/25829/ deliberation/argument mapping design] in the [http://core.kmi.open.ac.uk/display/41245 Cohere] system. It “renders annotations on the web, or a discussion, as a network of rhetorical moves: users must reflect on, and make explicit, the nature of their contribution to a discussion”. Since the tool allows participants (1) to tag a post with a rhetorical role and (2) to link posts to these roles or to participants, the following learning analytics can be gained per learner and/or per group: learners’ attention, rhetorical attitude, learning topics distribution, and social interactions. These indicators are then used to create higher-level constructs such as learners’ rhetorical moves, distribution, and interactions. | |||

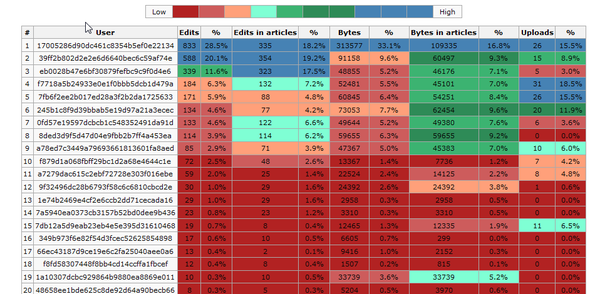

The popularity of Wikipedia, initiated a whole field of “Wikipedia science” that attracted many researchers from the data mining and the data visualization community. However, tools used in their research are mostly not suitable for teachers, since they require fairly high-level technical skills. There are a few easier to use examples that work with specific Wiki technologies. E.g. for [[Mediawiki]]s (the Wikipedia engine), [[StatMediaWiki]] (Rodríguez-Posada et al., 2011) can generate static XHTML pages including tables and graphics, showing content evolution timelines, activity charts for users and pages, rankings, tag clouds, etc. We tested this system on our own MediaWikis described in Schneider et al. (2011) and found it very useful, in spite of the features lacking, identified in part by the authors. | |||

[[image:statmediawiki-1.png|thumb|600px|none|Statmediawiki analytics (EdutechWiki (fr) - Top users (anonymyzed)]] | |||

=== Self-reporting strategies === | |||

One solution for coping with the lack of analytics and management tools is to rely on self-reporting. For example, at Curtin University, to encourage students to reflect on and assess their own achievement of learning in the e-portfolio environment, the iPortfolio system incorporates a self-rating tool based on Curtin's “graduate attributes”. The tool enables “the owner to collate evidence and reflections and assign themselves an overall star-rating based on Dreyfus and Dreyfus' Five-Stage Model of Adult Skill Acquisition (Dreyfus, 2004). The dynamic and aggregated results are available to the user: [..], the student can see a radar graph showing their self-rating in comparison with the aggregated ratings of their invited assessors (these could include peers, mentors, industry contacts, and so on).” (Oliver & Wheelan: 2010). This strategy is used by several other projects, e.g. de Pedro Puente (2007) requested students to tag wiki contributions from a list of ''contribution types'', e.g. “New hypotheses”, “New information” or “help partner”. From these indicators he then computed process indices that were combined with the grading of the final product. | One solution for coping with the lack of analytics and management tools is to rely on self-reporting. For example, at Curtin University, to encourage students to reflect on and assess their own achievement of learning in the e-portfolio environment, the iPortfolio system incorporates a self-rating tool based on Curtin's “graduate attributes”. The tool enables “the owner to collate evidence and reflections and assign themselves an overall star-rating based on Dreyfus and Dreyfus' Five-Stage Model of Adult Skill Acquisition (Dreyfus, 2004). The dynamic and aggregated results are available to the user: [..], the student can see a radar graph showing their self-rating in comparison with the aggregated ratings of their invited assessors (these could include peers, mentors, industry contacts, and so on).” (Oliver & Wheelan: 2010). This strategy is used by several other projects, e.g. de Pedro Puente (2007) requested students to tag wiki contributions from a list of ''contribution types'', e.g. “New hypotheses”, “New information” or “help partner”. From these indicators he then computed process indices that were combined with the grading of the final product. | ||

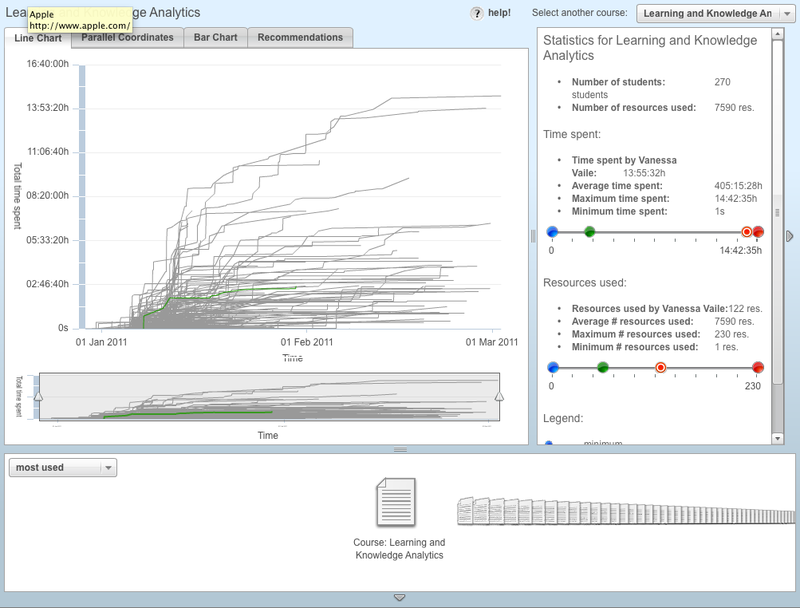

Another, more portable strategy was developed in the framework of the EU ROLE project. Govaerts et al., (2010) describe a ''Student Activity Monitor'' (SAM). Its main purpose is to increase self-reflection and awareness among students and it can also provide learning resource recommendations. It allows teachers to examine student activities through different visualizations. Although SAM was developed in a project that focused on developing a kit for constructing personal learning environments it can be used in other contexts where the learning process is largely driven by rather autonomous learning activities. SAM is implemented as a Flex software application and it will aggregate data from environments that include the CAM widget developed in the same project. In principle, widgets can be inserted in most environments. However, so far, we did not try to test this with our own environments. | Another, more portable strategy was developed in the framework of the EU ROLE project. According to the [http://www.role-showcase.eu/home Showcase platform] and an [http://labspace.open.ac.uk/course/view.php?id=7433 introduction to ROLE course] (retrieved 4/2013), ROLE stands for ''Responsive Open Learning Environments'' and seeks to put learners in the position to build their own technology enhanced learning environment based on their needs and preferences. | ||

A number of tools have been developed so far in the ROLE project and they are available through the [http://www.role-widgetstore.eu/tools ROLE widget store]. Check this out! Govaerts et al., (2010) describe a ''[http://www.role-showcase.eu/role-tool/student-activity-monitor Student Activity Monitor]'' (SAM). Its main purpose is to increase self-reflection and [[awareness]] among students and it can also provide learning resource recommendations. It allows teachers to examine student activities through different visualizations. Although SAM was developed in a project that focused on developing a kit for constructing personal learning environments it can be used in other contexts where the learning process is largely driven by rather autonomous learning activities. SAM is implemented as a Flex software application and it will aggregate data from environments that include the CAM widget developed in the same project. In principle, widgets can be inserted in most environments. However, so far, we did not try to test this with our own environments. | |||

[[image:SAM-main.png|thumb|800px|none|Student Activity Meter. Source: [http://sourceforge.net/apps/mediawiki/role-project/index.php?title=Widget_User_Guide:Student_Activity_Meter Widget User Guide:Student Activity Meter] ]] | |||

=== Client-side JavaScript Widgets === | |||

Least technically intrusive portable analytics systems can be implemented through user-side JavaScript. A good example is [http://research.uow.edu.au/learningnetworks/seeing/snapp/index.html SNAPP] (Dawson, 2009). Implemented as a JavaScript booklet, it can analyze some types of structured forum pages (Blackboard, Moodle and Desire to Learn) through the web browser and provide a networking diagram. SNAPP is a nice tool to have when no other tools are available and it is a good tool for analyzing “deep” forum debates. Since it is defeated by forums that spread over several web pages, teachers have to adapt to SNAPP and organize forums (including Q/A forums) as single nested topics. | |||

[[image:SNAPP-1.gif|frame|none|Discussion forum and same discussion as a network diagram using SNAPP. Source: [http://research.uow.edu.au/learningnetworks/seeing/snapp/index.html About SNAPP] (June 2012)]] | |||

=== Project management and Busines Process modelling === | |||

An older powerful approach is to rely on project management principles, i.e. organise an educational design as a workflow process and ''use'' workflow tools to implement it. | |||

Helic et al. (2005) describe a web-based training system that includes a ''virtual project management room.'' The system includes what they called ''support for data analysis'', i.e. tools that allow teachers to help learners follow the right path and learners to better understand, present, and apply the results. Web-based agile project management tools such as Pivotal Tracker also find their way into education. Agile design methodologies like SCRUM (Schwaber & Beedle, 2002) also may turn out to be closer to project-oriented learning (Pope-Ruark, in press) than traditional project methodology. Since project tools by definition include minimal analytics, they can be used off the shelf as orchestration and monitoring tools, although in both cases some adaptation and repurposing would be needed. | |||

[[File:chemwriter-tracker.png|thumb|none|600px|Pivotal tracker screenshot. Source: [http://depth-first.com/articles/2010/11/19/building-chemwriter-2-pivotal-tracker-for-project-management/ Building ChemWriter 2] ]] | |||

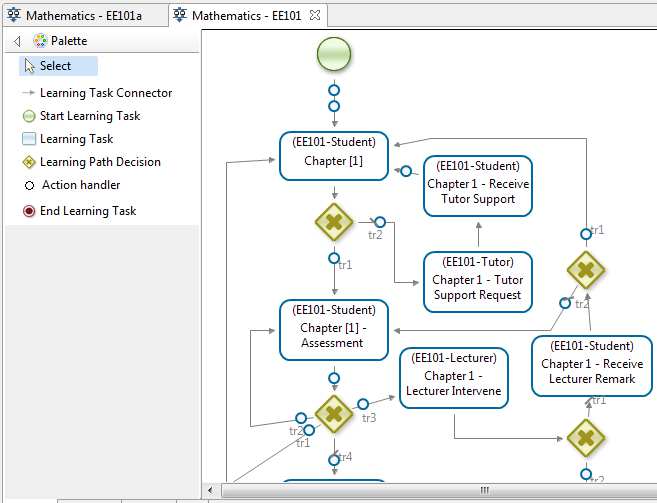

Adesina and Molloy (2012) present a Virtual Learning Process Environment (VLPE) | |||

based on the Business Process Management (BPM) conceptual framework, where course designers use the [[BPMN]] notational design language. Since a BPN system implements workflows, it allows {{quotation|behavioural learning processes of the cohort of students – right from the inception of the teaching and learning process – to be continuously monitored and analysed until completion}} (page 429). | |||

[[image:waset-BPMN.jpg|frame|none|VLPE Design Process Screenshot. Source: [http://www.waset.org/journals/ijshs/v6/v6-67.pdf A. Adesina and D. Molloy (PDF)]]] | |||

=== Summary of strategies and principles === | |||

To conclude this discussion of a few example systems, we summarize a few strategies and principles: | To conclude this discussion of a few example systems, we summarize a few strategies and principles: | ||

* Systems that structure learning activities and contents in one way or another usually include some kind of analytics. In addition, structured environments provide, per definition, more structured information to the participants. | |||

* Asking users is an easy strategy that can provide good information with respect to learners’ own perceptions of their learning, their contributions, and their interactions. | |||

* Student productions are key indicators for learning. | |||

* Project-management tools, i.e. tools that require learners to self-report in a structured way do include both structured data and at least some overall analytics. | |||

* Modern web technology allows for the insertion of widgets into various online environments. Widgets can “talk” to other services and therefore can be used to create aggregating [[educational dashboard|dashboard]]s, e.g. for the teacher. | |||

* Analytics are meant to be used by both learners and teachers. | |||

* Analytics can provide various levels of assistance and insight: from simple mirroring tools, to metacognitive tools, to guiding systems (Soller et al., 2005). | |||

The bottom line that emerges from this short discussion of a few systems is that “locally” used analytics are already available in more sophisticated systems built for education, in particular in CSCL. Some isolated devices (like plugins or widgets) are available to enhance simpler writing and collaboration environments like wikis, CMSs and blogs, as well as collection services (news aggregators, webtops, link and artifact sharing, social networking). This type of street technology is much more popular in project-oriented education than systems that grew out of educational technology research, however, various “portalware” do lack the analytics components that we would like to have. | The bottom line that emerges from this short discussion of a few systems is that “locally” used analytics are already available in more sophisticated systems built for education, in particular in CSCL. Some isolated devices (like plugins or widgets) are available to enhance simpler writing and collaboration environments like wikis, CMSs and blogs, as well as collection services (news aggregators, webtops, link and artifact sharing, social networking). This type of street technology is much more popular in project-oriented education than systems that grew out of educational technology research, however, various “portalware” do lack the analytics components that we would like to have. | ||

| Line 87: | Line 140: | ||

In order to exemplify some of our needs we briefly present three learning scenarios that have been designed or co-designed by the authors. A forth case concerns a whole training program and it illustrates how learning process analytics joins “main-stream” analytics. | In order to exemplify some of our needs we briefly present three learning scenarios that have been designed or co-designed by the authors. A forth case concerns a whole training program and it illustrates how learning process analytics joins “main-stream” analytics. | ||

=== | ===Problem-based learning activity (MAS in interpreter training)=== | ||

'''Learning objectives''' | '''Learning objectives''': Learners should understand the mechanisms of modeling and be able to apply them to the development of pedagogical tools. | ||

'''Description:''' The activity required learners to select a common difficulty in simultaneous or consecutive interpreting, identify the cognitive processes most likely responsible for the cognitive constraints, and remodel the process to address the constraints. Groups of three to four students work together on one scenario collaboratively. Each group selects a known difficulty in simultaneous or consecutive interpreting, identifies the cognitive processes most likely responsible for the cognitive constraint, and finally remodels the process in order to offer solutions to these constraints. | '''Description:''' The activity required learners to select a common difficulty in simultaneous or consecutive interpreting, identify the cognitive processes most likely responsible for the cognitive constraints, and remodel the process to address the constraints. Groups of three to four students work together on one scenario collaboratively. Each group selects a known difficulty in simultaneous or consecutive interpreting, identifies the cognitive processes most likely responsible for the cognitive constraint, and finally remodels the process in order to offer solutions to these constraints. | ||

| Line 105: | Line 158: | ||

'''Existing analytics:''' Almost none | '''Existing analytics:''' Almost none | ||

'''Needs:''' What is of interest to teachers is not only the final outcome of the activity but also and maybe even more, the process: how did learners manage to achieve this outcome? Which concrete steps did they take? Teachers cannot read everything learners produce on the portal. And since some of the work is done outside the portal, the tools teachers mostly rely upon now are the forum to check the evolution of the work done and the journal for the process. However, this is a tedious task and involves looking at isolated bits of data will “hide the forest”. Concerning process, it is not the process itself that they can track but the reporting of the process. Problems linked to this approach are twofold: teachers hardly ever have access to the process itself and the entire process is seldom entirely reported. This suggests that in addition to data mining, some easy to use self-reporting tools would be needed. | '''Needs:''' What is of interest to teachers is not only the final outcome of the activity but also and maybe even more, the process: how did learners manage to achieve this outcome? Which concrete steps did they take? Teachers cannot read everything learners produce on the portal. And since some of the work is done outside the portal, the tools teachers mostly rely upon now are the forum to check the evolution of the work done and the journal for the process. However, this is a tedious task and involves looking at isolated bits of data that will “hide the forest”. Concerning process, it is not the process itself that they can track but the reporting of the process. Problems linked to this approach are twofold: teachers hardly ever have access to the process itself and the entire process is seldom entirely reported. This suggests that in addition to data mining, some easy to use self-reporting tools would be needed. | ||

=== | ===Mini-project based learning (Blended course on Internet technology, MA in EduTech)=== | ||

Four courses on Internet technology use a similar design. A central page outlines the program, course level outcomes and general rules. Topics are taught through problem exercises, typically nine mini-projects for a 6-ECTS course. All information needed is centralized in an exercise/topic page. | Four courses on Internet technology use a similar design. A central page outlines the program, course level outcomes and general rules. Topics are taught through problem exercises, typically nine mini-projects for a 6-ECTS course. All information needed is centralized in an exercise/topic page. | ||

| Line 127: | Line 180: | ||

'''Existing analytics:''' We use simple MediaWiki functionality like page history and user contribution, in addition to the collaboration diagram extension we installed. The external StatMediaWiki tool runs as a batch job accessing the wiki database and provides a good amount of data with respect to user contributions and evolution of articles. A PHP script can summarize data from the XML project files. | '''Existing analytics:''' We use simple MediaWiki functionality like page history and user contribution, in addition to the collaboration diagram extension we installed. The external StatMediaWiki tool runs as a batch job accessing the wiki database and provides a good amount of data with respect to user contributions and evolution of articles. A PHP script can summarize data from the XML project files. | ||

'''Needs:''' Currently, the teacher can monitor student progress by inspecting their XML work page. However, since it only includes links to productions, work in progress is not captured. In complement, the teacher could inspect the web server’s file system and make guesses from forum (non)participation. The Wiki can display individual student contributions or can list or visualize edits made to pages. At grading time, we also use the StaMediaWiki Analytics tool to evaluate wiki participation. To improve both students’ self-monitoring and teacher monitoring we would like to have a simple combination of a portfolio system and a task-based project management tool. With respect to Wiki participation, we would like to have a dashboard showing number and amount of edits. The StatMediaWiki tool should also be improved to include collaboration diagrams, support for group-wide analysis, plus more interesting word lists that summarize contributions. | '''Needs:''' Currently, the teacher can monitor student progress by inspecting their XML work page. However, since it only includes links to productions, work in progress is not captured. In complement, the teacher could inspect the web server’s file system and make guesses from forum (non)participation. The Wiki can display individual student contributions or can list or visualize edits made to pages. At grading time, we also use the StaMediaWiki Analytics tool to evaluate wiki participation. To improve both students’ self-monitoring and teacher monitoring we would like to have a simple combination of a portfolio system and a task-based project management tool. With respect to Wiki participation, we would like to have a [[educational dashboard|dashboard]] showing number and amount of edits. The StatMediaWiki tool should also be improved to include collaboration diagrams, support for group-wide analysis, plus more interesting word lists that summarize contributions. | ||

=== | ===Project-based learning (course in educational sociology and history, BA in education)=== | ||

'''Learning objectives'''<nowiki>: Students should acquire both in-depth understanding of a topic in special education and general skills, such as working within a “collective intelligence” environment, conducting interviews, article writing and so forth.</nowiki> | '''Learning objectives'''<nowiki>: Students should acquire both in-depth understanding of a topic in special education and general skills, such as working within a “collective intelligence” environment, conducting interviews, article writing and so forth.</nowiki> | ||

| Line 147: | Line 200: | ||

'''Needs:''' The standard (insufficient) MediaWiki tools like page history, recent contributions plus a collaboration diagram extension are used. In future editions, we plan to use the StatMediaWiki tool. | '''Needs:''' The standard (insufficient) MediaWiki tools like page history, recent contributions plus a collaboration diagram extension are used. In future editions, we plan to use the StatMediaWiki tool. | ||

=== | ===Certificate in e-learning=== | ||

'''Learning objectives:''' Apply the theories, processes and tools of ICT-based teaching and learning to the development of blended and distance learning. | '''Learning objectives:''' Apply the theories, processes and tools of ICT-based teaching and learning to the development of blended and distance learning. | ||

| Line 175: | Line 228: | ||

Teachers and learners need analytics for project oriented teaching and learning in four domains: production, interaction, reflection, and management and regulation. The list below, divided into ''wanted tomorrow'' (i.e. really urgent support) and ''wanted in the future'', i.e. a vision for ICT-supported project based development is an attempt to call for sustainable capacity building. | Teachers and learners need analytics for project oriented teaching and learning in four domains: production, interaction, reflection, and management and regulation. The list below, divided into ''wanted tomorrow'' (i.e. really urgent support) and ''wanted in the future'', i.e. a vision for ICT-supported project based development is an attempt to call for sustainable capacity building. | ||

=== | ===Productions=== | ||

Ideally, an analytics system should provide data from each type of environment used. Using an [[LMS]] is not an option, since (for good reasons) students cannot upload executable files. An LMS is also not a productive collective writing environment nor does it allow student productions to be shared with the world. | |||

'''Wanted tomorrow''': | |||

(1) A kind of lightweight productions/[[learning e-portfolio|portfolio system]] that also includes a simple task management system and a rubrics-based [[Learner assessment|assessment/grading tool]] like the one the is available in [[Blog:DKS/Moodle 2.2 is out and it includes grading rubrics|Moodle 2]]. Productions can be produced and deposited in any kind of environment as long as all participants have at least read access. Both learners and teachers should have access to a [[educational dashboard|dashboard]] showing task progress, productions, and grades. A stand-alone tool probably would add maximum flexibility, but it also could be designed as portal module or as a web service that could interact with other systems. E.g. Synteta and Schneider (2002) describe a simple portal extension that required students to edit an XML-based project file and from which dashboard data was extracted, but it is no longer working. One also could image repurposing an agile project management tool such as [[Pivotal tracker]] | |||

(2) A tool like [[StatMediaWiki]] that provides visualizations for content evolution. The tool should be enhanced with a better tag cloud generator and take into account categories and user groups and show collaboration graphs. | |||

=== | '''Wanted in the future'': | ||

(1) A [[E-framework]]-like [[service-oriented architecture]] that would allow students to manage their [[personal learning environment]] and include some kind of course-specific “segment” allowing other students and teachers to interact. | |||

(2) Web API-based content and collaboration analytics for writing and discussion environments such as [[wiki]]s, [[CMS]] and forums. Such functionality would allow creating centralized [[educational dashboard|dashboard]]s, networking and visualizations of content structure/links. “In a Web 3.0 world the relationships and dynamics among ideas are at least as important as those among users. As a way of understanding such relationships we can develop an analogue of [[Social network analysis|social network analysis]]—idea network analysis. This is especially important for knowledge building environments where the concern is social interactions that enable idea improvement” (Scardamalia et al., 2002). | |||

===Interactions=== | |||

In all our scenarios, students will engage in some form of collaboration. In some cases (not described above) students engage in more complex scenarios where they have to play various roles (e.g. “discussant”). We would also like students to be aware of how they contribute to texts and hypertexts. Typically, we find that when students engage in loosely defined scenarios, they tend to interact as little as possible with each other’s text segments. Rewriting others’ productions is an interesting pedagogical activity that all participants should be able to monitor. | In all our scenarios, students will engage in some form of collaboration. In some cases (not described above) students engage in more complex scenarios where they have to play various roles (e.g. “discussant”). We would also like students to be aware of how they contribute to texts and hypertexts. Typically, we find that when students engage in loosely defined scenarios, they tend to interact as little as possible with each other’s text segments. Rewriting others’ productions is an interesting pedagogical activity that all participants should be able to monitor. | ||

'''Wanted | '''Wanted tomorrow''': | ||

Collaboration diagrams for wikis that work across individual pages, categories of pages and groups of participants. Collaboration diagrams for forums, e.g. tools that behave like [http://edutechwiki.unige.ch/mediawiki/index.php?title=SNAPP&action=edit&redlink=1 SNAPP] but that works across forum topics. | |||

'''Wanted in the future:''' | |||

Collaboration diagrams that work across systems. This may require the use of some standardized digital identity like OpenId and will raise privacy issues. An alternative is to rely on self-reporting. However, in that case self-reporting should be easy and contextual, e.g. in a wiki there should be a button that allows the user to tell what he just did and maybe why. This data should then be sent to an external collection device. | |||

===Reflections=== | |||

In most of our scenarios, students at some point should engage in reflection. Reflections can be part of other writing activities and may be difficult to detect. This is why in many pedagogical workflow systems, e.g. in the [[LAMS]] learning activity platform (Dalziel, 2004), students can be forced to write notebook entries after each activity. Another method is to require that learners tag certain productions (e.g. forum entries or blog posts) as “reflective”. Some environments include progress or mood widgets (e.g. Enquiry Builder) that can collect and centralize learner-provided metrics. One could also imagine using an experience sampling approach, i.e. querying students at given intervals or after completion of an operation. | |||

'''Wanted tomorrow:''' | |||

Portable widgets like [http://wordpress.org/extend/plugins/eb-enquiryblogbuilder/ Enquiry Blog Builder] with a server-side component (optional) that could be run by teachers concerned or their organization. | |||

'''Wanted | '''Wanted in the future: ''' | ||

Design of reflection tools and analytics should be included in the discussion about e-portfolio systems and personal learning environments. Many learning institutions, particularly in the applied sciences, now define institutional competence catalogues that could be linked to students’ reflective activities, to which the learner should be able to add his own goals. | |||

=== | ===Management and regulation=== | ||

Management and regulation refers to both teacher and learner interventions that aim to organize the learning process. First, it would be interesting to know (within reason) who is doing what and where learners are in the process. This is an easy task in educational workflow systems such as LAMS or CELS, but it is neither in open designs that we described here nor in main-stream e-learning platforms such as Moodle. Second, learners and teachers should be able to monitor Q/A “help-desk”-like activities and productions. Third, when learners are given group work, group participants should have the means to distribute and monitor tasks. | Management and regulation refers to both teacher and learner interventions that aim to organize the learning process. First, it would be interesting to know (within reason) who is doing what and where learners are in the process. This is an easy task in educational workflow systems such as LAMS or CELS, but it is neither in open designs that we described here nor in main-stream e-learning platforms such as Moodle. Second, learners and teachers should be able to monitor Q/A “help-desk”-like activities and productions. Third, when learners are given group work, group participants should have the means to distribute and monitor tasks. | ||

'''Wanted tomorrow: '''A simple monitoring dashboard for the lightweight productions/portfolio/planning system described in point 1. It may be possible to repurpose an existing agile development tool. | '''Wanted tomorrow: ''' | ||

A simple monitoring [[educational dashboard|dashboard]] for the lightweight productions/portfolio/planning system described in point 1. It may be possible to repurpose an existing agile development tool. | |||

'''Wanted in the future: | |||

(1) '''A [[LAMS]]-like monitoring tool that works across environments. | |||

(2) Q/A help-desk like forums that provide a state of problems addressed and solved. Such tools do exist of course, but they are not integrated within production environments like wikis, blogs, or CMS and this means that questions/answers are too decontextualized. The advantage of a discussion/forum page in wiki for example, is that participants can easily hyperlink to other contents. Existing tools may also not provide the views a teacher needs. E.g. getting an overview of open problems is fine, but one should also be able to see who is active, who helps others, etc. | |||

=== Conclusion === | |||

In conclusion, we believe that merging ideas from various technical domains such as web analytics, general purpose data mining, semantic web, project management and educational technology research could provide tremendous opportunities for all sorts of project-oriented designs such as project-based learning, problem-based learning, inquiry learning, writing-to-learn, or knowledge community learning. A lot of interesting tools for ''learning process metric''s could be implemented at fairly low cost. Prototypes for several tools actually already do exist but need to be further developed. We hope to contribute a little bit towards raising awareness of various issues and opportunities. | In conclusion, we believe that merging ideas from various technical domains such as web analytics, general purpose data mining, semantic web, project management and educational technology research could provide tremendous opportunities for all sorts of project-oriented designs such as project-based learning, problem-based learning, inquiry learning, writing-to-learn, or knowledge community learning. A lot of interesting tools for ''learning process metric''s could be implemented at fairly low cost. Prototypes for several tools actually already do exist but need to be further developed. We hope to contribute a little bit towards raising awareness of various issues and opportunities. | ||

==Bibliography== | ==Bibliography== | ||

Adesina, Ayodeji and Derek Molloy (2012). Virtual Learning Process Environment: Cohort Analytics for learning and learning processes, ''International Journal of Social and Human Sciences'' 6, 429-438. [http://www.waset.org/journals/ijshs/v6/v6-67.pdf PDF] | |||

1<sup>st</sup> International conference on Learning Analytics, retrieved April 12, 2012 from https://tekri.athabascau.ca/analytics/ | 1<sup>st</sup> International conference on Learning Analytics, retrieved April 12, 2012 from https://tekri.athabascau.ca/analytics/ | ||

| Line 215: | Line 294: | ||

Dalziel, J. (2003). Implementing Learning Design: The Learning Activity Management System (LAMS), ''Proceedings of ASCILITE 2003''. Available at http://www.melcoe.mq.edu.au/documents/ASCILITE2003%20Dalziel%20Final.pdf | Dalziel, J. (2003). Implementing Learning Design: The Learning Activity Management System (LAMS), ''Proceedings of ASCILITE 2003''. Available at http://www.melcoe.mq.edu.au/documents/ASCILITE2003%20Dalziel%20Final.pdf | ||

Dawson, S. (2009). ‘Seeing’ the learning community: An exploration of the development of a resource for monitoring online student networking. ''British Journal of Educational Technology'', 41(5), 736-752 | |||

De Liddo, A., Buckingham Shum, S., Quinto, I., Bachler, M. and Cannavacciuolo, L. (2011). Discourse-Centric Learning Analytics. ''Proc. 1st International Conference on Learning Analytics & Knowledge''. Feb. 27-Mar 1, 2011, Banff. ACM Press: New York. Eprint: http://oro.open.ac.uk/25829 | De Liddo, A., Buckingham Shum, S., Quinto, I., Bachler, M. and Cannavacciuolo, L. (2011). Discourse-Centric Learning Analytics. ''Proc. 1st International Conference on Learning Analytics & Knowledge''. Feb. 27-Mar 1, 2011, Banff. ACM Press: New York. Eprint: http://oro.open.ac.uk/25829 | ||

De Pedro Puente, Xavier, (2007) New method using Wikis and forums to assess individual contributions, WikiSym '07. Available at http://www.wikisym.org/ws2007/proceedings.html | De Pedro Puente, Xavier, (2007) New method using Wikis and forums to assess individual contributions, WikiSym '07. Available at http://www.wikisym.org/ws2007/proceedings.html | ||

Derry, S.J., & LaJoie, S.P. (1993). A middle camp for (un)intelligent instructional computing: An introduction. In S.P. LaJoie & S.J. Derry (Eds.), Computers as cognitive tools. Hillsdale, NJ: Lawrence Erlbaum Associates. | |||

Dyckhoff, A. L., Zielke, D., Bültmann, M., Chatti, M. A., & Schroeder, U. (2012). Design and Implementation of a Learning Analytics Toolkit for Teachers. | |||

Educational Technology & Society, 15 (3), 58–76. http://www.ifets.info/journals/15_3/5.pdf | |||

E-Framework for Education and Research, retrieved from http://www.e-framework.org/ (this is a dead project, but it remains inspiring) | E-Framework for Education and Research, retrieved from http://www.e-framework.org/ (this is a dead project, but it remains inspiring) | ||

Ferguson, R., Buckingham Shum, S. and Deakin Crick, R. (2011). EnquiryBlogger – Using widgets to support awareness and reflection in a PLE setting. In W. Reinhardt, & T. D. Ullmann (Eds.), ''1st Workshop on Awareness and Reflection in Personal Learning Environments'', PLE 2011 Conference, UK. Eprint: http://oro.open.ac.uk/30598 | |||

Govaerts, S., K. Verbert, J. Klerkx, E. Duval (2010). Visualizing Activities for Self-reflection and Awareness, ''The 9th International Conference on Web-based Learning'', ICWL 2010, Springer, Lecture Notes on Computer Science. Available at https://lirias.kuleuven.be/handle/123456789/283362. | |||

Hapara Teacher Dashboard. Retrieved from http://hapara.com/ | Hapara Teacher Dashboard. Retrieved from http://hapara.com/ | ||

Helic, D., Krottmaier, H., Maurer, H. & Scerbakov, N. (2005). <span class="match1">Enabling</span> <span class="match2">Project-Based</span> <span class="match3">Learning</span> in <span class="match4">WBT</span> <span class="match5">Systems</span>. <cite>International Journal on E-</cite><span class="match3">''Learning''</span><cite>, 4</cite>(4), 445-461. Norfolk, VA: AACE. | Helic, D., Krottmaier, H., Maurer, H. & Scerbakov, N. (2005). <span class="match1">Enabling</span> <span class="match2">Project-Based</span> <span class="match3">Learning</span> in <span class="match4">WBT</span> <span class="match5">Systems</span>. <cite>International Journal on E-</cite><span class="match3">''Learning''</span><cite>, 4</cite>(4), 445-461. Norfolk, VA: AACE. | ||

Mazza, R., & Dimitrova, V. (2007). CourseVis: A graphical student monitoring tool for supporting instructors in Web-based distance courses. ''International Journal of Human-Computer Studies'', 65 (2), 125–139. | |||

Pivotal tracker. Retrieved from http://www.pivotaltracker.com/ | Pivotal tracker. Retrieved from http://www.pivotaltracker.com/ | ||

| Line 239: | Line 327: | ||

ROLE Project. Retrieved April 12 2012 from http://www.role-project.eu/. | ROLE Project. Retrieved April 12 2012 from http://www.role-project.eu/. | ||

Scardamalia, M., Bransford, J., Kozma, R., & Quellmalz, E. (2002). ''New assessments and environments for Knowledge building''. Springer. Draft available at http://ikit.org/fulltext/2010_NewATKB.pdf. | |||

Schneider, D.K., Benetos, K. & Ruchat, M. (2011). MediaWikis for research, teaching and learning. In T. Bastiaens & M. Ebner (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2011 (pp. 2084-2093). Chesapeake, VA: AACE. | |||

Schwaber, K.; Beedle, M. (2002). ''Agile software development with Scrum''. Prentice Hall. [http://en.wikipedia.org/wiki/International_Standard_Book_Number ISBN] http://en.wikipedia.org/wiki/Special:BookSources/0130676349 0130676349. | |||

Soller, A.,A. Martinez, P. Jermann, and M.Muehlenbrock (2005). From Mirroring to Guiding: A Review of State of the Art Technology for Supporting Collaborative Learning. ''Int. J. Artif. Intell. Ed''. 15, 4 261-290. | Soller, A.,A. Martinez, P. Jermann, and M.Muehlenbrock (2005). From Mirroring to Guiding: A Review of State of the Art Technology for Supporting Collaborative Learning. ''Int. J. Artif. Intell. Ed''. 15, 4 261-290. | ||

Soller, A., A. Martinez, P. Jermann, and M. Muehlenbrock (2004). From Mirroring to Guiding: A Review of State of the Art Technology for Supporting Collaborative Learning. ITS 2004 ''Workshop on Computational Models of Collaborative Learning'', Retrieved, April 12 2012 from http://www.cscl-research.com/Dr/ITS2004Workshop/proceedings.pdf | |||

Synteta, P. & Schneider, D. (2002) EVA_pm: Towards Project-Based e-Learning, ''Proceedings of E-Learn 2002'', Montreal, 15-19 october 2002 | Synteta, P. & Schneider, D. (2002) EVA_pm: Towards Project-Based e-Learning, ''Proceedings of E-Learn 2002'', Montreal, 15-19 october 2002 | ||

| Line 256: | Line 342: | ||

'''Acknowledgements:''' Thanks to Michele Notari for pushing us to investigate. | '''Acknowledgements:''' Thanks to Michele Notari for pushing us to investigate. | ||

[[Category: Analytics]] | |||

[[Category:Project-oriented instructional design models]] | |||

Latest revision as of 17:22, 3 May 2014

Requirements for learning scenario and learning process analytics

A first version of this article that was submitted to EdMedia 2012 and accepted. This wiki version includes pictures and will evolve over time.

The original conference paper is available on EditLib at http://www.editlib.org/p/40963 as Schneider, D., Class, B., Benetos, K. & Lange, M. (2012). Requirements for learning scenario and learning process analytics. In T. Amiel & B. Wilson (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2012 (pp. 1632-1641). Chesapeake, VA: AACE.

Authors

Daniel K. Schneider

- TECFA, Faculty of Psychology and Sciences of Education, University of Geneva, Switzerland

- Daniel.Schneider@unige.ch

Barbara Class

- Interpreting Department, Faculty of Translation and Interpreting

- University of Geneva, Switzerland

Kalliopi Benetos

- TECFA, Faculty of Psychology and Sciences of Education, University of Geneva, Switzerland

Marielle Lange

- Rich Internet Application Developer, New Zealand

Abstract:

This contribution attempts to define what kind of learning analytics both learners and teachers should have in various forms of project-oriented learning designs in order to enhance the learning and teaching process within learning scenarios.

Keywords:

learning analytics, constructivism, CSCL, project-based learning, problem-based learning, inquiry learning, writing-to-learn, and knowledge community learning.

Introduction

In this discussion paper, we define learning process analytics as a collection of methods that allow teachers and learners to understand what is going on in a learning scenario, i.e. what participants work(ed) on, how they interact(ed), what they produced(ed), what tools they use(ed), in which physical and virtual location, etc. Learning analytics is most often aimed at generating predictive models of general student behavior. So-called academic analytics even aims to improve the system. We are trying to find a solution to a somewhat different problem. In this paper we will focus on improving project-oriented learner-centered designs, i.e. a family of educational designs that include any or some of knowledge-building, writing-to-learn, project-based learning, inquiry learning, problem-based learning and so forth.

We will first provide a short literature review of learning process analytics and related frameworks that can help improve the quality of educational scenarios. We will then describe a few project-oriented educational scenarios that are implemented in various programs at the University of Geneva. These examples illustrate the kind of learning scenarios we have in mind and help define the different types of analytics both learners and teachers need. Finally, we present a provisional list of analytics desiderata divided into “wanted tomorrow” and “nice to have in the future”.

A short overview of learning scenario and learning process analytics

From a technical point of view, “learning analytics is the measurement, collection, analysis and reporting of data about learners and their contexts, for purposes of understanding and optimizing learning and the environments in which it occurs” (1st International conference on learning analytics, 2011). We define learning scenario and learning process analytics as the measurement and collection of learner actions and learner productions, organized to provide feedback to learners, groups of learners and teachers during a teaching/learning situation. This information can be presented in various forms, e.g. a browsable analytics web site or a dashboard and should engage participants in reflection with respect to their different goals, roles, tasks, productions, and so forth.

This type of analytics has a long tradition in educational technology research, in particular in the subfields of artificial intelligence and education (including intelligent tutoring systems) and in computer-supported collaborative learning (CSCL). We shall not discuss the former, since we are not interested in using computers in the role of a virtual teacher/expert. In a project-oriented setting, the learner is to provide the intelligence, not the computer. Computers are best used only as tools that assist the learner’s cognition. “Cognitive tools [...] are unintelligent tools, relying on the learner to provide the intelligence, not the computer. This means that planning, decision-making, and self-regulation are the responsibility of the learner, not the technology. Cognitive tools can serve as powerful catalysts for facilitating these higher order skills if they are used in ways that promote reflection, discussion, and collaborative problem-solving [...]” (Derry & Lajoie, 1993, p. 5).

Analytics in the CSCL and knowledge building tradition

In the field of CSCL, Soller, Martinez, Jermann and Muehlenbrock (2005) developed a collaboration management cycle framework that distinguishes between mirroring systems, which display basic actions to collaborators, metacognitive tools, which represent the state of interaction via a set of key indicators, and coaching systems, which offer advice based on an interpretation of those indicators. “The framework, or collaboration management cycle, is represented by a feedback loop, in which the metacognitive or behavioral change resulting from each cycle is evaluated in the cycle that follows. Such feedback loops can be organized in hierarchies to describe behavior at different levels of granularity (e.g. operations, actions, and activities). The collaboration management cycle is defined by the four following phases” (Soller et al., 2004:6).

- Phase 1: The data collection phase involves observing and recording the interaction of users. Data are logged and stored for later processing.

- Phase 2: Higher-level variables, termed indicators are computed to represent the current state of interaction. For example, an agreement indicator might be derived by comparing the problem solving actions of two or more students, or a symmetry indicator might result from a comparison of participation indicators.

- Phase 3: The current state of interaction can then be compared to a desired model of interaction, i.e. a set of indicator values that describe productive and unproductive interaction states. “For instance, we might want learners to be verbose (i.e. to attain a high value on a verbosity indicator), to interact frequently (i.e. maintain a high value on a reciprocity indicator), and participate equally (i.e. to minimize the value on an asymmetry indicator).” (idem, p. 7).

- Phase 4: Finally, remedial actions might be proposed by the system if there are discrepancies.

Soller et al. (2005:) add a phase 5 that is outside of the cycle: “After exiting Phase 4, but before re-entering Phase 1 of the following collaboration management cycle, we pass through the evaluation phase. Here, we reconsider the question, ‘What is the final objective?’ and assess how well we have met our goals”. In other words, the behaviors in the "system" are analyzed as a whole.

Another school of thought in CSCL concerns knowledge-building communities. “Sustaining knowledge-building communities online requires the creation of electronic environments that support both formal and informal learning, and capture significant tasks and activities that are central to the day-to-day work of the participants. These environments must provide supports for real world activities and learning, while providing the potential for something more. That something more is knowledge building, or the production and continual improvement of ideas of value to a community” (Scardamalia & Bereiter, 2003). This knowledge-building environment model relies on so-called Computer Supported Intentional Learning Environments. Its commercial version, Knowledge Forum, is an “electronic group workspace designed to support the process of knowledge building, [i.e.] any number of individuals and groups can share information, launch collaborative investigations, and build networks of new ideas…together,” (Knowledge Forum, 2012). The technology can be described as a concept map, hypertext and forum system hybrid that can display information from multiple vantage points and entry points. In other words, there are no special analytics, but its networked architecture and various display options allow users to understand “connections” between contents, between contents and users, and between users themselves.

While CSCL research produces many interesting approaches and systems, these are rarely used in practice since they require advanced knowledge of collaborative learning and a mastery of the technology used. In addition, orchestrating CSCL scenarios is very time consuming.

Analytics for learning management systems

Most LMS do include some features for tracking student behavior. For a teacher or a student this information can be useful in order to monitor if participation requirements are met. However, these tools are often fairly cumbersome and could argue that LMSs are not really designed for data mining, analysis and visualization. “IF tracking data is provided in a VLE, it is often incomprehensible, poorly organized, and difficult to follow, because of its tabular format. As a result, only skilled and technically savvy users can utilize it (Mazza & Dimitrova, 2007). But even for them it might be too time consuming.” (Dyckhoff et al., 2012: 59).

However, there exist various add-ons (either free or commercial) for some popular systems, e.g. MOCLog for Moodle or Blackboard Analytics. In both cases, learning analytics will only tell that students consume some resource or activity and how they perform with respect to manually or automatically graded activities. In order words, these analytics are not designed to support active pedagogies, however they can nicely enhance traditional e-learning if user experience (usability and usefulness) is good. Typically, a good LA system should include easy to use and informative teacher and student dashboards. Both teachers and learners then can reflect on visualized user participation and activity data.

The following figure (Dyckhoff et al., 2012) shows this idea. It summarizes the “Learning Analytics Toolkit, which enables teachers to explore and correlate learning object usage, user properties, user behavior, as well as assessment results based on graphical indicators” (Design and Implementation of a Learning Analytics Toolkit for Teachers, retrieved March 2014).

Educational workflow systems like LAMS or VLPE, an experimental system based on BPMN (Adesina, 2013) provide more and better analytics than traditional LMSs, since it is fairly easy to add some data collection and visualization features to systems that are designed around structured human activities.

Analytics for street technology

Most courses that aim at deep learning, e.g. applicable knowledge and/or higher order knowledge are usually taught in small classes and many teachers seem to use software that is distinct from ones used in mainstream conceptions of e-learning. Typical environments are wikis, learning e-portfolios, content management systems, blogs, webtops, social networking sites and/or any combination of these. In addition, scenarios can include the use of various special-purpose social software, such as bookmarking services, reference managers and feed aggregators. While such software is well suited for supporting a wide range of learning activities, it can become difficult for both learners and teachers to follow and understand “what is going on”.

Few systems seem to exist that enhance this kind of “streetware” that is popular in education. Ferguson, Buckingham Shum, and Crick (2011) developed Enquiry Blog Builder; an interesting set of Wordpress plugins that will enhance inquiry-learning scenarios. The MoodView plugin can produce a line graph plotting the mood of the student as their enquiry progresses. Students can consult the evolution of their mood over time or insert a new one by selecting an item from a drop down list (from 'going great' to 'it's a disaster'). Changing a mood creates a new blog entry where the student may articulate his/her reason for the mood change. Both the EnquirySpiral and the EnquirySpider are widgets that provide a graphical display of the number of posts made in various blog categories. The spiral, a key metaphor in inquiry learning, concerns the first nine and the spider the seven following ones. This implies that the sixteen categories should be well chosen in advance. The nine “Spiral categories” concern the inquiry process and the seven “spider” categories are based on the Effective Lifelong Learning Inventory diagram. The toolkit then includes a blog generator that will generate a blog for each student and one for the teacher(s). The teacher blog will then include a dashboard showing the progress of students assigned to the connected teacher.

Other systems have emerged, e.g. the Hapara Teacher Dashboard provides a “view” over the class Google Apps environment. It mainly automates many configuration tasks, but also provides a “birds view” and may in the future include more advanced analytics.

De Liddo et al. (2011) implement a structured deliberation/argument mapping design in the Cohere system. It “renders annotations on the web, or a discussion, as a network of rhetorical moves: users must reflect on, and make explicit, the nature of their contribution to a discussion”. Since the tool allows participants (1) to tag a post with a rhetorical role and (2) to link posts to these roles or to participants, the following learning analytics can be gained per learner and/or per group: learners’ attention, rhetorical attitude, learning topics distribution, and social interactions. These indicators are then used to create higher-level constructs such as learners’ rhetorical moves, distribution, and interactions.

The popularity of Wikipedia, initiated a whole field of “Wikipedia science” that attracted many researchers from the data mining and the data visualization community. However, tools used in their research are mostly not suitable for teachers, since they require fairly high-level technical skills. There are a few easier to use examples that work with specific Wiki technologies. E.g. for Mediawikis (the Wikipedia engine), StatMediaWiki (Rodríguez-Posada et al., 2011) can generate static XHTML pages including tables and graphics, showing content evolution timelines, activity charts for users and pages, rankings, tag clouds, etc. We tested this system on our own MediaWikis described in Schneider et al. (2011) and found it very useful, in spite of the features lacking, identified in part by the authors.

Self-reporting strategies

One solution for coping with the lack of analytics and management tools is to rely on self-reporting. For example, at Curtin University, to encourage students to reflect on and assess their own achievement of learning in the e-portfolio environment, the iPortfolio system incorporates a self-rating tool based on Curtin's “graduate attributes”. The tool enables “the owner to collate evidence and reflections and assign themselves an overall star-rating based on Dreyfus and Dreyfus' Five-Stage Model of Adult Skill Acquisition (Dreyfus, 2004). The dynamic and aggregated results are available to the user: [..], the student can see a radar graph showing their self-rating in comparison with the aggregated ratings of their invited assessors (these could include peers, mentors, industry contacts, and so on).” (Oliver & Wheelan: 2010). This strategy is used by several other projects, e.g. de Pedro Puente (2007) requested students to tag wiki contributions from a list of contribution types, e.g. “New hypotheses”, “New information” or “help partner”. From these indicators he then computed process indices that were combined with the grading of the final product.

Another, more portable strategy was developed in the framework of the EU ROLE project. According to the Showcase platform and an introduction to ROLE course (retrieved 4/2013), ROLE stands for Responsive Open Learning Environments and seeks to put learners in the position to build their own technology enhanced learning environment based on their needs and preferences.

A number of tools have been developed so far in the ROLE project and they are available through the ROLE widget store. Check this out! Govaerts et al., (2010) describe a Student Activity Monitor (SAM). Its main purpose is to increase self-reflection and awareness among students and it can also provide learning resource recommendations. It allows teachers to examine student activities through different visualizations. Although SAM was developed in a project that focused on developing a kit for constructing personal learning environments it can be used in other contexts where the learning process is largely driven by rather autonomous learning activities. SAM is implemented as a Flex software application and it will aggregate data from environments that include the CAM widget developed in the same project. In principle, widgets can be inserted in most environments. However, so far, we did not try to test this with our own environments.

Client-side JavaScript Widgets

Least technically intrusive portable analytics systems can be implemented through user-side JavaScript. A good example is SNAPP (Dawson, 2009). Implemented as a JavaScript booklet, it can analyze some types of structured forum pages (Blackboard, Moodle and Desire to Learn) through the web browser and provide a networking diagram. SNAPP is a nice tool to have when no other tools are available and it is a good tool for analyzing “deep” forum debates. Since it is defeated by forums that spread over several web pages, teachers have to adapt to SNAPP and organize forums (including Q/A forums) as single nested topics.

Project management and Busines Process modelling

An older powerful approach is to rely on project management principles, i.e. organise an educational design as a workflow process and use workflow tools to implement it.

Helic et al. (2005) describe a web-based training system that includes a virtual project management room. The system includes what they called support for data analysis, i.e. tools that allow teachers to help learners follow the right path and learners to better understand, present, and apply the results. Web-based agile project management tools such as Pivotal Tracker also find their way into education. Agile design methodologies like SCRUM (Schwaber & Beedle, 2002) also may turn out to be closer to project-oriented learning (Pope-Ruark, in press) than traditional project methodology. Since project tools by definition include minimal analytics, they can be used off the shelf as orchestration and monitoring tools, although in both cases some adaptation and repurposing would be needed.

Adesina and Molloy (2012) present a Virtual Learning Process Environment (VLPE) based on the Business Process Management (BPM) conceptual framework, where course designers use the BPMN notational design language. Since a BPN system implements workflows, it allows “behavioural learning processes of the cohort of students – right from the inception of the teaching and learning process – to be continuously monitored and analysed until completion” (page 429).

Summary of strategies and principles

To conclude this discussion of a few example systems, we summarize a few strategies and principles:

- Systems that structure learning activities and contents in one way or another usually include some kind of analytics. In addition, structured environments provide, per definition, more structured information to the participants.

- Asking users is an easy strategy that can provide good information with respect to learners’ own perceptions of their learning, their contributions, and their interactions.

- Student productions are key indicators for learning.

- Project-management tools, i.e. tools that require learners to self-report in a structured way do include both structured data and at least some overall analytics.

- Modern web technology allows for the insertion of widgets into various online environments. Widgets can “talk” to other services and therefore can be used to create aggregating dashboards, e.g. for the teacher.

- Analytics are meant to be used by both learners and teachers.

- Analytics can provide various levels of assistance and insight: from simple mirroring tools, to metacognitive tools, to guiding systems (Soller et al., 2005).