Wiki metrics, rubrics and collaboration tools

<pageby nominor="false" comments="false"/>

Introduction

The main purpose of this article is to survey various strategies, methods and tools that allow to measure what participants (and students in particular) do in a wiki. Surveyed literature also will include topics that are only indirectly related to this area, but are of technical interest, e.g. the literature on trust metrics or wiki health.

In addition we will look at strategies, tools and tactics that will enhance wiki participation and collaboration. I.e. modern wikis such as Mediawikis allow to add simple collaboration visualizations or social networking that could enhance spontaneous collaboration. Of course, in education, it's the pedagogical scenario (the instructional design model) that will have the most impact and that will not discussed here.

Finally, we will try to outline a few paths for further development, i.e. we will try to formulate some desiderata for educational wiki software with respect to organization, orchestration, monitoring and assessment.

It will take some time before this piece will achieve good draft status - 10:51, 4 November 2011 (CET).

See also:

Contribution and collaboration metrics

Research and development about wiki collaboration and contributions has interested researchers from various fields and for various reasons. A lot of research was triggered directly or indirectly by the success of the WikiMedia projects that includes Wikpedia, Wikibooks and Wikiversity. Directly, because it's interesting how such large sites grow and indirectly because it's a huge database that researchers from many fields can play with. Examples of such research fields are visualization, data-mining, language processing and social networking Finally, the Mediawiki foundation itself sponsors internal research projects. Below we shall look at a few projects that are of interest to education and related fields.

How could we measure contribution ?

Arazy et al. (2009:172) developed “a wiki attribution algorithm that: (a) calculates the authors’ contributions to each wiki page, (b) cannot be easily manipulated, (c) estimates the extent of a contribution using a sentence as the basic unit of meaning, and (d) distinguishes between contributions that persist on the page from those that are deleted”. More particularly, the algorithm is based mainly based on sentence ownership. Sentences between the current and previous release are compaired and considered to similar (i.e. the same) if the sentence in the current release is very similar to one in the previous release. From that principle, the authors can compute (a) the total amount of work and (b) the total contributions that persist, i.e. measure the relevancy of a sentence and therefore the quality of a user's contribution. In addition the number of internal and external links and word-level changes are measured.

How could we measure collaboration

Meishar-Tal and Tal-Elhasid describes the methodology developed in the Open University of Israel (OUI) to measure collaboration among students in wikis. They used three indicators: relative diversity (how many students in a group participate), interactivity and intensity.

- Relative diversity, was calculated by the formula:

- Relative Diversity = Diversity / No. of potential editors

- E.g. “If a group of five students is able to work on a page but only four students are active and contributing, the level of collaboration would be 0.8”

- Interactivity, was (simply) measured by:

- Number of interactions among the participants, i.e. the number of edits

- Intensity, is expressed by the following ratio.

- Intensity= Interactivity / Diversity

Quality

Dennis Wilkinson and Bernardo Huberman (2007a, 2007b) “examined all 50 million edits made to the 1.5 million English-language Wikipedia articles. In their literature review, the authors identified several automatic quality metrics: number of edits and unique editors to an article (Lih, 2004) factual accuracy (Giles 2005, Encyclopaedia Britannica, 2006 and Desilets, 2006], credibility (Chesney, 2006), revert times (Víegas et al, 2004)), and formality of language (Emigh et al., 2005). They argue that that encyclopedia quality (Wikipedia) cannot be assessed using a single metric (e.g. Crawford, 2001), but that complex combinations of metrics (Stvilia, 2005) seem to depend on rather arbitrary parameter choices.

Wilkinson and Humberman found that the high-quality articles are distinguished by a marked increase in number of edits, number of editors, and intensity of cooperative behavior, as compared to other articles of similar visibility and age.” (Wilkison, 2007b:157). Simplifying a lot the mathematics of this research, the method used could be described as follows. The overal dynamics of editing over a longer time (e.g. 240 weeks) is log normal with a heavy tail, i.e. typical articles are edited 100 times, very few thousands of times and few only a few times. In other words, only a small number of articles accrete a disproportionally large number of edits. This distribution could then compared to the list of 1211 (0.081%) featured articles by the Wikipedia community according to criteria such as accuracy, neutrality, completeness, and style. However, the authors made some adjustments, e.g. "topic popularity" was controlled by using google pageranks. For all types of articles (moderately popular to very popular), there was a significant difference, i.e. all types of featured articles had a higher editing number than non-featured articles in the same category. The same principle was then applied to the number of contributors (editors)

In this research, Wilkinson and Huberman demonstrated demonstrated the correlation between number of edits, number of distinct editors, and article quality in Wikipedia. In other words, we also could conclude, that in similar wikis both a high edit number and distinct editors should a poweful indicator of quality. Edit number may have to be adapted to small wikis such as EduTechWiki, since student behavior can be different with respect to saving, i.e. one probably should rather look at blocks of edits. Some people (like the main author of this article) use an external editor and hit the save button in frequent intervals since the editing window is kept open. Others would write the text in an external editor and then copy and paste a final section.

Cooperation was also studied in this 1.5 million articles study and it had to operationalized by a simple indicator, i.e. the number of "posting" to the talk page. Again, the study demonstrated a strong correlation between number of comments posted to a talkpage and quality of the corresponding article. “It is worth noting that the difference between the featured and nonfeatured populations is more distinct in this figure than the corresponding plots for edits and distinct editors, suggesting that cooperation could be a more important indicator of article quality than raw edit counts” (ibid, p. 161). It also was suggested the number of edits is correlated to cooperation, since an “editor is very unlikely to engage in cooperative authoring without making at least several edits.”.

Ask the user, a solution for teachers

Xavier de Pedro Puente (2007) asked the question on how to measure student work within an experiential learning scenario, that is composed of the following stages: (1) "Concrete Experience", (2) "Reflective Observation", (3) "Abstract Conceptualization", and (4) "Feedback or Active Experimentation". Students were requested to choose explicitly from a list of contribution types:

| importance (***=important) |

contribution type | Descriptiong |

|---|---|---|

| Others (report) | Other contribution type not listed in the menu at present (report which one to teachers) | |

| * | Organizational aspects | Proposals and other questions related to the organization of the work team, scheduling,.... |

| * | Improvements in markup | Improvements in markup, spelling, etc. (bear in mind that final document quality for printing (nicer tables, paginated table of contents, page markup...) will be performed at the end |

| * | Support requests | Simple questions, help requests, etc. without too much making of previous information |

| ** | Help partners | Help group or course mates who asked questions, requested support, formulated doubts, etc. (group or course forum) |

| ** | New information | New information has been added to text or discussion |

| *** | New hypotheses | New hypothesis has been prepared from preexisting information, and possibly, some new information (if so, mark option 'New information ' also) |

| *** | Elaborated questions and new routes to advance | Elaborated questions and new ways to move forward in the work which they were not taken into account previously (not just simple questions or elementary requests of support) |

| *** | Synthesis / making of information | Synthesize or refine speech with preexisting information |

This was implemented as pulldown menu in a Tikiwiki.

Knowing both type and size of contributions provides an initial method of valuing each student's contribution, which the author labelled process (P) In addition, a final submitted text in word processing format, labelled final product (FP) was also graded. Both types of indicators then are then used in the following grading rubric.

| Criteria | Estimation method | % of grade |

|---|---|---|

| Teamwork | (P): Sum of sizes of all contribution types | 15 |

| Synthesis & clarity of information | (P): Statement (corrected) of students, and sum of sizes of specific contribution type (*** Synthesis / making of information) | 30 |

| Quantity & quality of contributed information | (a) (P): Statement (corrected) of students and sum of sizes of related contributions types (** New Information, *** New hypothesis and *** Synthesis / making of information") (b) (FP): Revision by teachers of individual attribution statements of each section at final printed document |

40 |

| Formal quality of work | (a) (P): Statement of the students and specific contribution size and type (* Improvements of presentation") (b) (FP): Arbitrary scoring by teachers to last work by editors-inchief to final document prior to printing |

15 |

P indicators can be extracted from the database since “information detailing each student's contribution (type/s and size), was recorded in the Tiki action log, and types could be re-associated by the teacher, if necessary” (p 89). We don't know if the extensions are available.

Use extra collaboration tools

A wiki may implement rating systems, social networking, project management. In the Mediawiki realm, some extensions can help with that (see below).

Wiki health

Camille Roth (2007) started investigating the sustainability of wikis by looking at factors like distinct policies, norms, user incentives, technical and structural features, and by examining the demographics of a portion of the wikisphere. Fistly, he found intertwining of population and content dynamics and also identified various stages of growth. He found that “some factors underlying population growth are directly linked to content characteristics.” (Roth, 2007:123). Content characteristics are factored into content stabilization, i.e. mechanism for regulation an arbitration, and quality evaluation, e.g. system to rate articles.

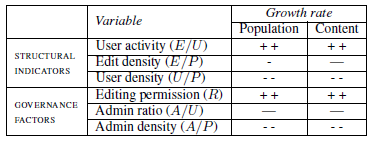

Roth et al (2008), in further research, identified a simple list of indicators that allow to measure the viability of a wiki as whole.

- rank - Daily rank of the wiki in terms of content growth

- id - Internal identifier of the wiki

- name - Full name of the wiki

- total - Total number of pages

- good - Number of real pages after discarding system pages

- edits - Number of edits

- views - Total number of views

- admins - Number of users with administrator privileges

- users - Total number of users

- images - Number of images uploaded to the wiki

- ratio - Ratio of good pages over total

- type - Wiki engine

- url - wiki root url

A study of over 360 wikis that had an initial population between 400 and 20000 users that both structural and governance factors have an impact on the growth rate. (to be completed ....)

Taraborelli et al (2009) then proposed a WikiTracer system that would allow to follow to measure the performance and growth of wiki-based communities with a standard plug-in system. It introduces over twenty indicators grouped into several categories: Wiki identification, population indicators, content indicators, governance indicators, access control and system information.

Social Text: Wiki Analytics asks the question whether there are “algorithmic ways of determining the health of a Wiki”. The following categories are suggested:

- Page names - The better the names, the more likely people will link to those pages, both intentionally and accidentally. Goodness can be measured by "smaller is better", i.e. number of characters, words/tokens and number of non-alphabetic characters.

- Link (Graph Analysis)- If no pages are linked to anything else, then every page is an island of one, and you are probably not using the Wiki in a useful way. This is measured by number of "single connections" (blocks), patterns of interconnectedness, longest diameter (path to reach another page) and number of incoming/outgoing links.

- Page Content Analysis. Simple metrics would include page size, number of sections and word clouds.

- Time Analysis - how content evolves -- how it is refactored (or not), how conflict is resolved, and in general, what the patterns of interaction look like

- Tags (categories), i.e. another kind of incoming/outgoing link

- Usage patters. Measured with edits/accesses, how many times orphan pages are assessed and edited, most wanted pages and numbers of editors.

See also Wiki metrics that provides more operational definitions of some indicators.

EduTechWiki does fairly well with respect to all of these, execpt the usage pattern ....

Revision history

All wikis by definition include a revision history. This history is of interest to authors (e.g. learners), researchers and administrators (e.g. teachers). However, revision history is somewhat "difficult" to read and various interfaces have been suggested.

Mikalai Sabel (2007) introduced version trees, a “mechanism to arrange versions of wiki page history into a weighted tree structure that reflects the actual page evolution”. The author believes “that this mechanism will be of great use for emerging wiki reputation, trust and reliability algorithms (Sabel et al., 2005, Zeng et al., 2006). Secondly, adoption coefficients themselves and their visualization is a powerful tool for wiki analysis, complementary to HistoryFlow (Víegas et al, 2004). HistoryFlow focuses on the detailed evolution of text fragments, while adoption coefficients and version trees provide general view of content evolution.” (Sabel, 2007:125).

Similarity between page versions are characterized numerically by adoption coefficients that measure how much content of version i is preserved in version j. “The scale for adoption coefficient is [0, 1], with 0 corresponding to independent versions, and 1 meaning that j is a copy of i, with no differences” (p. 126). The algorithm implemented works roughly in the following way:

- Text of versions is segmented into blocks, roughly representing sentences.

- From two "simplified" wiki page versions we compute N insertions and N deletions

- The "normalized" adoption coefficient is then found as:

- a = 1 - (N insertions + N deletions) / (N total of version j + N deletions).

Wiki design

Built-in wiki collaboration and quality tools

“Based on a classification made Carley (2004), Wiki-specific networks can be arranged in four categories (Figure 1): social perspective (who knows who), knowledge perspective (who knows what), information perspective (what refers to what), and temporal perspective (what was done before). Relationships in one network usually imply relationships in another” (Müller and Meuthrath, 2007).

Some wikis include a reviewing process that allows authors to write drafts that only are published once it has been reviewed. A typical example is found in Wikimedia's Wikibooks. This is implemented with the Flagged Revisions extension.

Policies and guidelines

Wikipatterns.com, a toolbox of patterns & anti-patterns, lists a large variety of "people and anti-people patterns" and "adoption and and anti-adoption patterns.

Quality in a wiki can be determined by user evaluation (including self evaluation). In this Wiki, we use a simple templates like "stub" and "incomplete" to convey self-assessed quality statements to the reader.

“Wikipedia has developed several user-driven approaches for evaluating the articles. High quality articles can be marked as “Good Articles” or “Featured Articles” whereas poor quality articles can be marked as “Articles for Deletion”” (Wöhner and Peters, 2009). Features and processes are documented in Wikipedia's Good article reassessment and also Good article criteria

Software

Mediawiki extensions

- The Collaboration diagram extension using the Graphviz extension dynamically creates graphs that show user contributions for an article, a list of articles or a category of articles. (Click on "authors" in this wiki to see how it works).

- W4G Rating Bar. Lets users vote on pages, lists top pages (globally or by category), lists latest votes, etc.

- Extension:TODOListProgressBar aimed at making the process of tracking the progress of a TODO list (or other list) easier. (last updated 6/2010). This extension requires (light) XML editing skills from users.

- Semantic Project Management is a semantic MediaWiki extension that enables the user to display and export project structures. I.e. it helps collaboration (as opposed to measuring it). See this Gannt chart demo at kit.edu.

- SocialProfile Incorporates a basic social profile into a MediaWiki, specifically Avatars, Friending, Foeing, User Board, Board Blast, basic Profile Information, User Levels, Awards and Gifts.

- Semantic Social Profile represents social information of Social Profile extension as semantic annotations that are stored on a user’s userpage

- See also: Social tools and Category:Social tools

Using the MediaWiki API

Any (not too old) MediaWiki has by default an API enabled that allows programs to interact with it. he API supports many output formats, including JSON, WDDX, XML, YAML and PHP's native serialization format.

See

Wikipedia research tools list

See Research Tools: Statistics, Visualization, etc. at meta.wikimedia.org

- StatMediaWiki is a project that creates tools to collect and aggregate information available in a MediaWiki installation. In StatMediaWiki Classic, results are static HTML pages including tables and graphics that can help to analyze the wiki status and development. StatMediaWiki Interactive (Beta) is an interactive application with several menus, which generate analysis, graphs and tables according to user instructions.

External widget kits

Michele Notari suggests to used external widgets for monitoring, evaluating and scaffolding. A widget set could include:

- Rating of pages or page elements

- Request feedback

- Some kind of work package management (e.g. simple progress visualization)

- A console that could pull data from the other widgets.

In addition, widgets ought to able to work with groups. Since double authentication is not necessarily practical, one also could imagine using various APIs for authentication, group making and such.

Many wikis already allow use of widgets. E.g. for Mediawikis, one could use

- Widgets

- SecureWidgets (not sure that this is still recommended, see the next item)

- the more restrictive(?) Gadgets extension. See also Extension:Gadgets/Scripts and MediaWiki:Gadgets-definition (Example gadgets definition page).

Simple existing widgets

- rating widgets

External analysis software

Below we only list software found in the wiki-related literature. See also Social network analysis.

- StatMediaWiki collects and aggregates information available in a MediaWiki installation. This tool works will with small Mediawikis (tested with 17.x on Jan 2012), but it can take days (or possible weeks) to analyse a large wiki. Also, if you use extra namespaces, a minor code change is required in the python program.

- BCFinder is a free open-source software for detecing communities in bipartite networks. The program is written in Java (platform independant).

- SoniVis has the mission to create, as a community, a leading network analysis and network mining software that will run on all major platforms. As of Jan 2012, this project seems to be dead, although the software is still available.

- SonyViz can interact with a MediaWiki database

- SONIVIS:Wiki

- Installation manual and User manual

- Cognitive Computation Group (University of Illinois) created a series of natural language processing tools. Used by Arazy et al. (2009).

- Wikitracer is a web service providing platform-independent analytics and comparative growth statistics for wikis.

- This seems to be a stalled project, since a public beta is not yet available (nov 2011)

- WT Plugin Data Format

- See Roth et al. and Taraborelli et al.

- Tinaweb A web application to explore online or off line bipartite graphs.

- Tinasoft desktop A desktop application to text-mine corpora, build maps and explore the resulting networks.

General purpose text analytics tools

- See Editing Content analysis (and more specialized entries like latent semantic analysis and indexing)

Links

Conferences, organizations and journals

- Conferencences

- http://www.wikisym.org/

- Wikisym 2009 proceedings

- 2008 (not available ?)

- Wikisym 2007 proceedings

- Organizations / Groups

- http://altmetrics.org/ (an organization that tries to promote alternative citation metrics)

- WikiMedia Research. Centralized research conducted about Wikimedia.

- Research:Projects A list of projects.

- Wikipedia:Academic studies of Wikipedia

- Wikipedia:Wikipedistik/Soziologie. This german wikipedia article summarizes some sociological research about the German Wikipedia.

- Research projects

- http://qlectives.eu/ A FP 7 EU project, that aims to combine social networks, collaborative content production and peer-to-peer systems, in order to form Quality Collectives.

- http://tina.csregistry.org/ Tools for INteractive Assessement of Projects Portfolio and Visualization of Scientific Landscapes

- http://www.patres-project.eu/ A FP 6 EU project about pattern resilience.

Policies and strategies

Metrics

- Social Text: Wiki Analytics

- Social Text: Wiki Metrics

- Wikipedia statistics

- Wiki Science (A wikiversity book).

- Wiki Creativity Index

Other good resources

- snurblog run by Axel Bruns (Queensland University of Technology) includes some postings/news about wikis (among other things).

Bibliography

Collaboration and productivity metrics

- Almeida, R.; B. Mozafari, and J. Cho. On the evolution of Wikipedia. In Proceedings of the International Conference on Weblogs and Social Media (ICWSM '07), Boulder, March 2007.

- Arazy, Ofer and Eleni Stroulia, A Utility for Estimating the Relative Contributions of Wiki Authors, Proceedings of the Third International ICWSM Conference (2009). PDF

- “we introduce an algorithm for assessing the contributions of wiki authors that is based on the notion of sentence ownership. The results of an empirical evaluation comparing the algorithm’s output to manual evaluations reveal the type of contributions captured by our algorithm.”

- Buriol, L.S., Castillo, C., Donato , D., Leonardi , S., and Millozzi , S. (2006): Temporal Analysis of the Wikigraph. To appear in Proceedings of the Web Intelligence Conference (WI), Hong Kong 2006. Published by IEEE CS Press Abstract, PDF

- K. M. Carley: “Dynamic Network Analysis”; In: R. Brelger K. Carley, P. Pattison, (eds.): “Dynamic Social Network Modeling and Analysis: Workshop Summary and Papers” National Academy Press, (2004), 133-145.

- Ding, X., Danis, C., Erickson, T., & Kellogg, W. A. (2007). Visualizing an enterprise wiki. In Proceedings of ACM CHI '07, 2189-2194, San Jose.

- Ehmann K., Large A., and Beheshti J., 2008, Collaboration in context, First Monday, 13:10, 6 October 2008.

- Emigh. W and S. Herring (2005). Collaborative authoring on the Web. In Proc. HICSS, 2005.

- Gabrilovich, Evgeniy; Markovitch, Shaul (2007): Computing Semantic Relatedness using Wikipedia-based Explicit Semantic Analysis. Proceedings of The 20th International Joint Conference on Artificial Intelligence (IJCAI), Hyderabad, India, January 2007. PDF reprint

- Joachim Kimmerle, Johannes Moskaliuk, and Ulrike Cress, Understanding Learning - the Wiki Way, Proceedings of the 5th International Symposium on Wikis and Open Collaboration (WikiSym '09). PDF

- Hoisl, B., Aigner, W., & Miksch, S. (2006). Social rewarding in wiki systems - motivating the community. Lecture Notes in Computer Science , 4564, 362-371.

- Jesus, Rut; Martin Schwartz and Sune Lehmann, Bipartite Networks of Wikipedia's Articles and Authors: a Meso-level Approach, Proceedings of the 5th International Symposium on Wikis and Open Collaboration (WikiSym '09). PDF

- “we use the articles in the categories (to depth three) of Physics and Philosophy and extract and focus on significant editors (at least 7 or 10 edits per each article). We construct a bipartite network, and from it, overlapping cliques of densely connected articles and editors. We cluster these densely connected cliques into larger modules to study examples of larger groups that display how volunteer editors flock around articles driven by interest, real-world controversies, or the result of coordination in WikiProjects.”

- Jesus, Rut (2010). Cooperation and Cognition in Wikipedia Articles, A data-driven, philosophical and exploratory study, PhD thesis, Faculty Of Science University Of Copenhagen, PDF

- E.g. read pages 61ff. for the methodology used

- Harrer, Andreas; Johannes Moskaliuk, Joachim Kimmerle and Ulrike Cress(2008): Visualizing Wiki-Supported Knowledge Building: Co-Evolution of Individual and Collective Knowledge. Proceedings of the International Symposium on Wikis 2008 (Wikisym). New York, NY: ACM Press. PDF

- Halatchliyski, I., Moskaliuk, J., Kimmerle, J., & Cress, U. (2010). Who integrates the networks of knowledge in Wikipedia? Proceedings of the 6th International Symposium on Wikis and Open Collaboration. New York: ACM Press. doi 10.1145/1832772.1832774.

- Halatchliyski, I., & Cress, U. (2012). Soziale Netzwerkanalyse der Wissenskonstruktion in Wikipedia.In M. Hennig & C. Stegbauer (Eds.), Probleme der Integration von Theorie und Methode in der Netzwerkforschung (pp. 159-174). Wiesbaden: VS Springer.

- Lehmann, S., M. Schwartz, and L. K. Hansen, “Biclique communities,” Physical Review E (2008), arxiv.org.

- “We present a novel method for detecting communities in bipartite networks. Based on an extension of the k-clique community detection algorithm, we demonstrate how modular structure in bipartite networks presents itself as overlapping bicliques.”

- Moskaliuk Johannes, Joachim Kimmerle and Ulrike Cress (2008): Learning and Knowledge Building with Wikis: The Impact of Incongruity between People’s Knowledge and a Wiki’s Information. In G. Kanselaar, V. Jonker, P.A. Kirschner, & F.J. Prins (Eds.), International Perspectives in the Learning Sciences: Cre8ing a learning world. Proceedings of the International Conference of the Learning Sciences 2008, vol. 2. (pp. 99-106). Utrecht, The Netherlands: International Society of the Learning Sciences, Inc. PDF reprint

- Meishar-Tal, H. & Tal-Elhasid, E. (2008) Measuring collaboration in educational wikis - a methodological discussion, International Journal of Emerging Technologies in Learning, http://dx.doi.org/10.3991/ijet.v3i1.750

- Müller Claudia and Benedikt Meuthrath, Analyzing Wiki-based Networks to Improve Knowledge Processes in Organizations (2007). Proceedings of I-KNOW ’07 Graz, Austria, September 5-7, 2007. PDF

- “Four perspectives on Wiki networks are introduced to investigate all dynamic processes and their interrelationships in a Wiki information space. The Social Network Analysis (SNA) is used to uncover existing structures and temporal changes. [...] The collaboration network shows the nature of cooperation in a Wiki and reveals special roles, like the Wiki-Champion.”

- Ortega, Felipe; Gonzalez-Barahona, Jesus M.; Robles, Gregorio (2007): The Top Ten Wikipedias: A quantitative analysis using WikiXRay. ICSOFT 2007 PDF

- de Pedro Puente, Xavier, (2007) New method using Wikis and forums to assess individual contributions, WikiSym '07, PDF

- Pfeil U., Zaphiris P., and Ang C.S., 2008, Cultural Differences in Collaborative Authoring of Wikipedia, J. of Computer-Mediated Communication, 12:1, pp. 88-113.

- Rodríguez-Posada, Emilio J.; Juan Manuel Dodero, Manuel Palomo-Duarte, Inmaculada Medina-Bulo (2011). Learning-Oriented Assesment of Wiki Contributions: How to Assess Wiki Contributions in a Higher Education Learning Setting. Proceedings of CSEDU2011, 3rd International Conference on Computer Supported Education. Noordwijkerhout, The Netherlands. 2011. PDF Reprint

- See StatMediaWiki

- Roth, C. (2007) Viable wikis - Struggle for life in the wikisphere. Proceedings of the 2007 international symposium on Wikis, Montréal, Oct 2007 http://doi.acm.org/10.1145/1296951.1296964 - PDF at WikiSym

- Roth, C., Taraborelli, D., Gilbert, N. (2008) Measuring wiki viability. An empirical assessment of the social dynamics of a large sample of wikis. Proceedings of the 2008 international symposium on Wikis, Porto, September 2008. (or Proceedings of the 4th International Symposium on Wikis - WikiSym 2008, New York, NY: ACM Press). PDF Draft Preprint - PDF - PDF (at Wikitracer).

- Roth Camille; Jean-Philippe Cointet, Social and semantic coevolution in knowledge networks, Social Networks, Volume 32, Issue 1, January 2010, Pages 16-29, ISSN 0378-8733, 10.1016/j.socnet.2009.04.005. (http://www.sciencedirect.com/science/article/pii/S0378873309000215)

- Sinclair, Philip Andrew. Network centralization with the Gil Schmidt power centrality index, Social Networks, Volume 31, Issue 3, July 2009, Pages 214-219, ISSN 0378-8733, 10.1016/j.socnet.2009.04.004. (http://www.sciencedirect.com/science/article/pii/S0378873309000203)

- Taraborelli, D,; Roth, C and N. Gilbert (2009). Measuring wiki viability (II). Towards a standard framework for tracking content-based online communities. PDF

- Sabel, Mikalai. (2007). Structuring wiki revision history. Proceedings of the 2007 international symposium on wikis (pp. 125-130). Montréal: ACM. PDF at WikiSys.

- Víegas, F., Wattenberg, M. and Dave, K. 2004. Studying cooperation and conflict between authors with history flow visualizations. In Proceedings of the SIGCHI Conf. on Human Factors in Computing Systems, 575–582, (April, 2004, Vienna, Austria), New York, NY, USA, 2004. ACM Press.

- Voss J. 2005. Measuring Wikipedia, In Proceedings International Conference of the International Society for Scientometrics and Informetrics ISSI : 10th, Stockholm (Sweden July 24-28, 2005). http://eprints.rclis.org/archive/00003610/

- Wasserman, S; K. Faust: “Social network analysis: methods and applications“ Cambridge Univ. Press, Cambridge (1997)

- Wilkinson, D.M. and B. A. Huberman (2007a). Assessing the value of cooperation in Wikipedia. First Monday, 12(4), 2007.

- Wilkinson, D.M. and B. A. Huberman. (2007b). Cooperation and Quality in Wikipedia, WikiSym 07, PDF at WikiSym

- V. Zlatic, M. Bozicevic, H. Stefancic, and M. Domazet. Wikipedias: Collaborative web-based encyclopedias as complex networks. Physical Review E, 74(1):016115, 2006.

Trust and quality, in particular metrics

- Adler, B.T. and de Alfaro, L. 2007. A Content-Driven Reputation System for the Wikipedia. In Proceedings of the 16th International Conference on the World Wide Web. 261-270, (May, 2007), Banff, Canada.

- Adler, B.T., Chatterjee, K., de Alfaro, L., Faella, M., Pye, I. and Raman, V. 2008. Assigning Trust To Wikipedia Content. In Proceedings of the 2008 International Symposium on Wikis. (September, 2008), Porto, Portugal.

- Athenikos, Sofia J. and Xia Lin (2009), Visualizing Intellectual Connections among Philosophers Using the Hyperlink & Semantic Data from Wikipedia?, Proceedings of the 5th International Symposium on Wikis and Open Collaboration (WikiSym '09). PDF

- Blumenstock, J.E. 2008. Size Matters: Word Count as a Measure of Quality on Wikipedia. In Proceedings of the 17th international conference on World Wide Web. 1095-1096, (April, 2008). Beijing, China.

- Chesney. T (2006). An empirical examination of Wikipedias credibility. First Monday, 11(11), 2006.

- Crawford. H. Encyclopedias. In R. Bopp and L. C. Smith, editors, Reference and information services: an introduction, 3rd ed., pages 433–459, Englewood, CO, 2001. Libraries Unlimited.

- Desilets. A, S. Paquet, and N. Vinson (2006). Are wikis usable? In Proc. ACM Wikisym, 2006.

- Dondio, P. and Barrett, S. 2007. Computational Trust in Web Content Quality: A Comparative Evalutation on the Wikipedia Project. In Informatica – An International Journal of Computing and Informatics, 31/2, 151-160.

- Encyclopaedia Britannica (2006). Fatally flawed: refuting the recent study on encyclopedic accuracy by the journal Nature, March 2006.

- Giles, Jim (2005). Internet encyclopaedias go head to head. Nature, 438:900–901, 2005.

- Mark Kramer, Andy Gregorowicz, and Bala Iyer. 2008. Wiki trust metrics based on phrasal analysis. In Proceedings of the 4th International Symposium on Wikis (WikiSym '08). ACM, New York, NY, USA, , Article 24 , 10 pages. DOI=10.1145/1822258.1822291, PDF from wikisym.org

- “Wiki users receive very little guidance on the trustworthiness of the information they find. It is difficult for them to determine how long the text in a page has existed, or who originally authored the text. It is also difficult to assess the reliability of authors contributing to a wiki page. In this paper, we create a set of trust indicators and metrics derived from phrasal analysis of the article revision history. These metrics include author attribution, author reputation, expertise ratings, article evolution, and text trustworthiness. We also propose a new technique for collecting and maintaining explicit article ratings across multiple revisions.”

- Aniket Kittur, Bongwon Suh, and Ed H. Chi. 2008. Can you ever trust a wiki?: impacting perceived trustworthiness in wikipedia. In Proceedings of the 2008 ACM conference on Computer supported cooperative work (CSCW '08). ACM, New York, NY, USA, 477-480. DOI=10.1145/1460563.1460639 http://doi.acm.org/10.1145/1460563.1460639, PDF from psu.edu

- “Wikipedia has become one of the most important information resources on the Web by promoting peer collaboration and enabling virtually anyone to edit anything. However, this mutability also leads many to distrust it as a reliable source of information. Although there have been many attempts at developing metrics to help users judge the trustworthiness of content, it is unknown how much impact such measures can have on a system that is perceived as inherently unstable. Here we examine whether a visualization that exposes hidden article information can impact readers' perceptions of trustworthiness in a wiki environment. Our results suggest that surfacing information relevant to the stability of the article and the patterns of editor behavior can have a significant impact on users' trust across a variety of page types.”

- Andrew Lih, Wikipedia as participatory journalism, 5th International Symposium on Online Journalism (April 16-17, 2004) University of Texas at Austin, PDF CiteSeerX

- “This study examines the growth of Wikipedia and analyzes the crucial technologies and community policies that have enabled the project to prosper. It also analyzes Wikipedia’s articles that have been cited in the news media, and establishes a set of metrics based on established encyclopedia taxonomies and analyzes the trends in Wikipedia being used as a source.”

- Lim, E.P., Vuong, B.Q., Lauw, H.W. and Sun, A. 2006. Measuring Qualities of Articles Contributed by OnlineCommunities. In Proceedings of the 2006 IEEE/WIC/ACM International Conference on Web Intelligence. 81-87, (December, 2006), Hong Kong.

- Roth, C., Taraborelli, D., Gilbert, N. (2011) Preface, Symposium on collective representations of quality. pdf http://dx.doi.org/10.1007/s11299-011-0092-7

- Sabel. Mikalai; A. Garg, and R. Battiti. WikiRep: Digital reputations in virtual communities. In Proceedings of XLIII Congresso Annuale AICA, pages 209–217, Oct 2005.

- Thomas Wöhner and Ralf Peters, Assessing the Quality of Wikipedia Articles with Lifecycle Based Metrics, Proceedings of the 5th International Symposium on Wikis and Open Collaboration (WikiSym '09).

- “[...] quality assessment has been becoming a high active research field. In this paper we offer new metrics for an efficient quality measurement. The metrics are based on the lifecycles of low and high quality articles, which refer to the changes of the persistent and transient contributions throughout the entire life span.”

- Stvilia, B., Twidale, M.B., Smith, L.C. and Gasser, L. 2005. Assessing information quality of a community-based encyclopedia. In Proceedings of the International Conference on Information Quality, 442–454, (November, 2005), Cambridge, USA

- Zeng. H; M. Alhossaini, L. Ding, R. Fikes, and D. L. McGuinness. Computing Trust from Revision History. In Proceedings of the 2006 International Conference on Privacy, Security and Trust, October 2006.

Various subjects

Such as embedded Wiki tools, wiki policies, passive use, community of users

- Dekel, U, A Framework for Studying the Use of Wikis in Knowledge Work Using Client-Side Access Data, WikiSym '07, PDF

- Hoisl, B., Aigner, W. and Miksch, S. 2007. Social Rewarding in Wiki Systems – Motivating the Community. In Proceedings of the second Online Communities and Social Computing. 362-371, (July, 2007), Beijing, China.

- J. F. Ortega & J. G. Gonzalez-Barahona. Quantitative Analysis of the Wikipedia Community of Users, WikiSym '07, PDF

- Sabel, Mikalay (2007). Structuring wiki revision history. In Proceedings of the 2007 International Symposium on Wikis. 125-130. (October, 2007), Montreal, Canada.

Wiki pedagogics and analysis of collaboration in learning

This focuses on articles that are interested in assessment, rubrics, metrics and analytics. See also other articles in the Wikis category, e.g.

- Bruns, A. and Humphreys, S. 2005. Wikis in Teaching and Assessment: The M/Cyclopedia Project, PDF (dead link)

- Axel Bruns and Sal Humphreys (2007). Building Collaborative Capacities in Learners: The M/Cyclopedia Project, Revisited (WikiSym 2007) Abstract - Full paper (PDF)

- MacDonald J. (2003) Assessing online collaborative learning: process and product, Computers & Education, Vol. 40, Issue 4, PP. 337-391

- Swan, K. Shen, J. & Hiltz, R. 2006. Assessment and collaboration in online learning. Journal of Asynchronous Learning Networks, 10 (1), 45-62.