Citizen science: Difference between revisions

m (→Evaluation) |

m (→Evaluation) |

||

| (90 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

{{ | {{incomplete}} | ||

Work on this piece started on January 2012. It includes some rough literature review on chosen topics, in particular with respect to topics like "how do participants learn", "in what respect are citizens creative", "what is their motivation", "how do communities work". In other words, '''this article is just a "note taking" piece''' and it should be revised some day ... | |||

'''See also:''' | |||

* [[Portal: citizen science|The citizen scienc portal]] centralizes EduTechWiki resources on citizen science, i.e. structured entries on citizen science [[List of citizen science projects|projects]], [[List of citizen science infrastructures|infrastructures]], and [[List of citizen science software|software]]. | |||

== Introduction == | == Introduction == | ||

| Line 11: | Line 11: | ||

Citizen science does not have a uniquely accepted definition. It could mean: | Citizen science does not have a uniquely accepted definition. It could mean: | ||

* Participation of citizen for collection of data, for example observation of animals, pollution, or plant growth. | * Providing computing power through automatic middleware (a typical example is the captcha mechanism in this wiki for curbing [[spam|spam]]) | ||

* Participation of citizen for analyzing data, in various forms. | * Participation of citizen for collection of data, for example observation of animals, pollution, or plant growth. This variant is a form of "crowd sourcing". | ||

* Dissemination of scientific thought and | * Participation of citizen for categorizing and analyzing data, in various forms. An example would be helping to recognize patterns (e.g. forms of galaxies) | ||

* Amateur science, i.e. citizen create scientific thoughts and other products. | * Dissemination of scientific thought and results in schools in order to promote engagement with science or with the intent to help updating the curriculum. | ||

* Citizen assessment of science and scientific projects | * Participatory action research (also called "extreme citizen science") | ||

* Amateur science, i.e. citizen create scientific thoughts and other products (e.g. discovery of new species) | |||

* Citizen assessment of science and scientific projects. | |||

See also: [[e-science]] | |||

=== Types of citizen science === | |||

The variety of citizen science programs is important with respect to many criteria, e.g.: aims, target population, locations (schools, museums, media, Internet groups), forms, subject areas, tasks, etc. | |||

==== Conceptual models ==== | |||

Cooper et al. (2007) distinguish between the citizen science model and the participatory action research model. The former uses citizens as data collectors and will receive recognition (including results of the study). Participatory action research {{quotation|begins with the interests of participants, who work collaboratively with professional researchers through all steps of the scientific process to find solutions to problems of community relevance Finn (1994) outlined three key elements of participatory research: (1) it responds to the experiences and needs of the community, (2) it fosters collaboration between researchers and community in research activities, and (3) it promotes common knowledge and increases community awareness. Although citizen science can have research and education goals similar to many participatory action research projects (Finn 1994 and below), citizen science is distinct from participatory action research in that it occurs at larger scales and typically does not incorporate iterative or collaborative action.}}. However, some citizen science project do actively try to engage citizens to participate as we shall try to show below. | |||

Wiggins and Crowston (2011) identified {{quotation|five mutually exclusive | |||

and exhaustive types of projects, which [they] labelled Action, Conservation, Investigation, Virtual and Education. Action projects employ volunteer-initiated participatory action research to encourage participant intervention in local concerns. Conservation projects address natural resource management goals, involving citizens in stewardship for outreach and increased scope. Investigation projects focus on scientific research goals in a physical setting, while Virtual projects | |||

have goals similar to Investigation projects, but are entirely ICT-mediated and differ in a number of other characteristics. Finally, Education projects make education and outreach primary goals}}. | |||

Haklay (in press) distinguised between broad categories: ''classic citizen science'' and ''citizen cyberscience''. | |||

* Classic: Observation (e.g. birds) , environmental management | |||

* Citizen cyberscience: volunteered computing, volunteered thinking and participatory sensing. | |||

In the same book chapter, Haklay (in press) then presents a framework for classifying the level of participation and engagement of participants in citizen science activities. | |||

# Crowdsourcing: Citizen participate by providing computing power, do automatic data collection e.g. carry sensors. Cognitive engagement is minimal. | |||

# Distributed Intelligence: Participants are asked to observe and collect data (e.g. birds) and/or carry out simple interpretation activities. A typical example of the latter is GalaxyZoo. Participants need some basic training. | |||

# Participatory Science: Participants define the problem and, in consultation with scientists and experts, a data collection method is devised. Typical examples are envionmental justice cases, but it also can occur in distributed intelligence settings when they are allowed/encourage to formulate new questions. In both cases research are in charge of detailed analysis. | |||

# Extreme Citizen Science (collaborative science): Both non-professional and professional scientists are involved in identifying problems, data collections, etc. Participation level can change, i.e. participants may or may not be involved in analysis and publication of results. In this mode, scientists also should act as facilitators and not just experts. | |||

Haklay argues, that this ladder could be implemented in most projects. E.g. in volunteer crowdsourcing projects, most participants may just provide computing power. Others could help with technical problems, still others could e.g. rewrite parts of the software or get in touch with scientists and suggest new research directions. Finally, citizen science also may encourage scientists to become citizens, i.e. consider how their projects could integrate with society in several ways. Both aspects are related to the {{quotation|intriguing possibility is that citizen science will work as an integral part of participatory science in which the whole scientific process is performed in collaboration with the wider public.}} | |||

==== Empirical models ==== | |||

Wiggins and Crowston (2012) then [http://crowston.syr.edu/system/files/hicss-45-final.pdf created two typologies of citizen science projets], using data from 63 projects. A first typology is based on twelve ''participation tasks'', i.e. Observation, Species identification, Classification or tagging, Data entry, Finding entities, Measurement, Specimen/sample collection, Sample analysis, Site selection &/or description, Geolocation, Photography, Data analysis, and Number of tasks. The other typology is based on ten ''project goals'', i.e. Science, Management, Action, Education, Conservation, Monitoring, Restoration, Outreach, Stewardship, and Discovery. | |||

Participation clusters: | |||

# Involve observation and identification tasks, but never require analysis. (13) | |||

# Involve observation, data entry, and analysis, but include no locational tasks such as site selection or geolocation (6) | |||

# Engage participants in a variety of tasks, the only participation task not represented is sample analysis (17) | |||

# Engage the public in every participation task considered (15) | |||

# Involve reporting, using observations, species identification, and data entry, but with very few additional participation opportunities (12) | |||

Goal clusters: | |||

# Afforded nearly equal weights (midpoint of the scale or higher) to each of the goal areas | |||

# Most strongly focused on science | |||

# Science is the most important goal, but education, monitoring, and discovery are only slightly less important on average | |||

# Science, conservation, monitoring and stewardship are most important, while discovery is less valued than in the preceding clusters | |||

# Outlier | |||

For other typologies, see Wiggins and Crowston (2012). | |||

== Topics in the study of citizen science projects == | |||

=== Motivation === | |||

[[Motivation]] and motivation in education are complex constructs. A typical general model includes several components. E.g. Herzberg's (1959) five component model of job satisfaction includes achievement, recognition, work itself, responsibility, and advancement. | |||

Some recent research by Raddick et al. (2010) about motivations of participants in cyberscience projects analyzed free forum messages and structured interviews. The first mentioned categories include the subject (astronomy), contribution and vastness. Learning (3%) and Science (1%) were minor. Looking at all responses, the same motivations - subject (46%), contribution (22%), vastness (24%) - still dominate, but other motivations exceed 10%, e.g. beauty (16%), fun (11%) and learning (10%). | |||

It might be interesting to also look at personality traits of participants. E.g. Furnham et al. (1999) in a study about personality and work motivation found out that extroverts on the Eysenck Personality Profile correlate with a preference for Herzberg's motivators, whereas "neuroticism" correlates with so-called hygiene factors, i.e. the environment. In other words, extraverts are rather motivated by intrinsic factors whereas others by extrinsic ones. | |||

=== Virtual organisations for citizen science === | |||

An important variant of citizen science uses citizens as helpers for research. {{quotation|Citizen science is a form of organisation design for collaborative scientific research involving scientists and volunteers, for which internet-based | |||

modes of participation enable massive virtual collaboration by thousands of members of the public.}} (Wiggins and Crowson, 2010:148). The authors argue that virtual organisations for citizen science are a bit different from other virtual organizations: {{quotation|The project | |||

level of group interaction is distinct from those of small work groups and organisations (Grudin, 1994), which has implications for organisation design efforts. Project teams and communities of practice can be distinguished by their goal orientation among other features (Wenger, 1999), but empirical observation of citizen science VOs to date | |||

indicates a hybrid ‘community of purpose’ might better describe many projects, with characteristics of both a project team and a community of practice or interest.}} (p 159). | |||

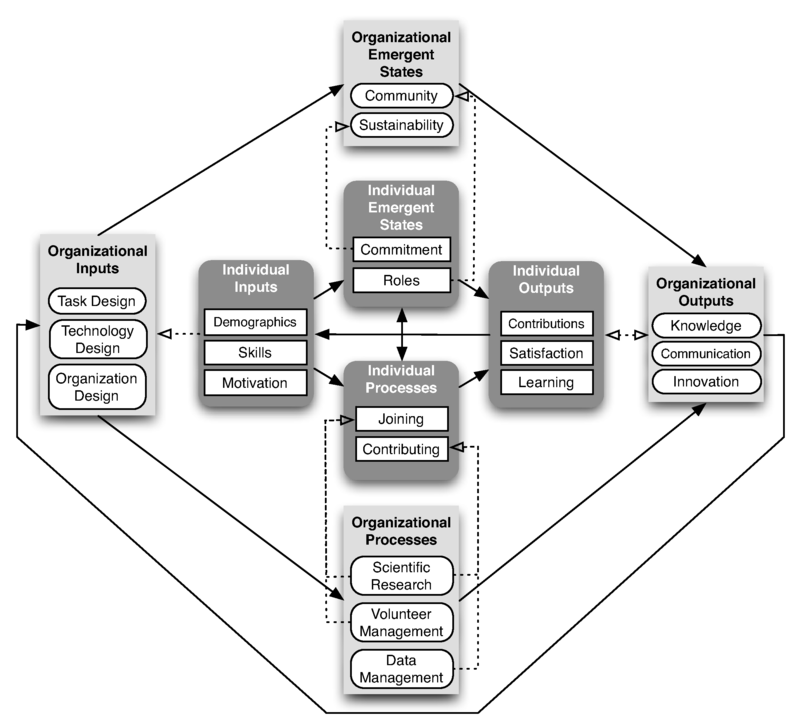

Wiggins and Crowson (2010) then suggest a conceptual model of citizen science virtual organizations. | |||

[[image:wiggins-crowston-citizen-science-model.png|thumb|800px|none|Source: [http://crowston.syr.edu/system/files/WigginsCrowstonIJODE2010.pdf Wiggins and Crowston, Preprint]. Copyright A Wiggins and K. Crowston, reproduced with permission.]] | |||

A new version of this model is under preparation, according to K. Crowston (email communication 1/2012). | |||

=== Learning in citizen science projects === | |||

Luther et al. (2009) related learning in citizen science projects to Wikipedia and open source (OSS) participation. {{quotation|researchers have identified some surprising commonalities between Wikipedia and OSS worth mentioning here. The first is legitimate peripheral participation (LPP), a theory of social learning which holds that novices in a community of practice may transform into experts by observing expert practices and taking on increasingly complex work (Lave & Wenger, 1991). In the case of Wikipedia, a user may begin by fixing typos, gradually making more substantial edits until she is writing entire articles from scratch (Brynt et al. 2005). In OSS, bug reporters may write a few lines of code to fix a glitch and eventually learn to code new modules}}. | |||

The Caise report argues that {{quotation|educational research shows that people have greater motivation to engage and learn | |||

if the subject matter is directly relevant to their lives and interests and/or if the learning process is interactive—one in which the learner can directly affect the learning process, content, and/or outcomes of the experience (Falk 2001)}}. | |||

According to Cooper et al (2007) {{quotation|Citizen science also provides informal learning experiences that improve science literacy (Krasny and Bonney 2005, Evans et al. 2005). Several studies have found that citizen science projects at the Cornell Laboratory of Ornithology have improved participants’ knowledge about biology and natural history, engaged them directly in the process of inquisitive thinking, and increased their ability to frame questions scientifically (Bonney and Dhondt 1997, Trumbull et al. 2000, 2005, Lewenstein 2001, Bonney 2004, Krasny and Bonney 2005).}} | |||

Sternberg (according to Lehtinen, 2010) points out that wisdom - i.e. the highest end of the continuum that goes from data to information, from information to knowledge and from knowledge to wisdom - is not so much taught directly but learned as a by‑product of the activities in practical situations and working or learning environments. | |||

=== Citizen science and inquiry learning === | |||

According to van Joolingen et al (2007). {{quotation|science learning should also focus on the processes and methods used by scientists to achieve such results. One obvious way to bring students into contact with the scientific way of working is to have them engage in the processes of scientific inquiry themselves, by offering them environments and tasks that allow them to carry out the processes of science: orientation, stating hypotheses, experimentation, creating models and theories, and evaluation (de Jong 2006a). Involving students in the processes of science brings them into the closest possible contact with the nature of scientific understanding, including its strengths, problems and limitations (Dunbar 1999). This is the main claim of inquiry learning: engaging learners in scientific processes helps them build a personal knowledge base that is scientific, in the sense that they can use this knowledge to predict and explain what they observe in the natural world.}} | |||

'''Adaptation of citizen science projects to inquiry learning in formal settings''' | |||

There is a fairly strong connection between some citizen science projects and [[inquiry-based learning|inquiry learning]] projects conducted in formal school settings. Some of latter participate in citizen science projects, others are somewhere in between (i.e. may use or even produce authentic data). Adapting citizen science to school context may not be easy, in particular when scientists outsource the development to curriculum developers who are not familiar with scientific inquiry. | |||

Trumbull et al. (2005) describes the adaptation of Cornell Laboratory of Ornithology citizen science labs to US National Science Education Standards, i.e. curricular content and tasks for classroom use. Evaluation of the first version of the Classroom FeederWatch (CFW) project did not show evidence of progress in scientific thinking as defined by the US education standard: {{quotation|The evaluation data overall failed to support the claim that students learned inquiry abilities or developed understandings of inquiry as a result of participating in activities associated with CFW’s early materials.}} (p. 887). The authors attribute problems to several factors: Firstly, the teacher's lack of understanding of both scientific inquiry and inquiry learning. The US standard seems to difficult to understand and translate into practice and the authors also mention that the [[GenScope]] project was challenged in a similar way. Furthermore, students didn't understand that their data was actually being used for real and therefore may have been less motivated. | |||

The probably most interesting finding was that {{quotation|the early versions of CFW featured a version of science and scientists that was too abstract and free of context. For example, the CFW exploration that directly addressed the scientific process presented the following sequence: formulate a question, predict an answer, develop procedures to gather information, collect and analyze data, communicate results, and raise new questions. As an abstraction, the description was adequate. For the purposes of teaching inquiry, the level of abstraction provided a simplistic, even distorted model of practical elements of scientific inquiry (Bencze & Hodson, 1999; Finley&Pocovi, 2000). The descriptionwas not adequate as a teaching strategy for learning how to conduct studies about birds. In particular, exploration narratives failed to explain how and why an ornithologist came to ask questions, which question got addressed, how she decided to gather data to address the question, or how he made sense of the data. There were, in short, no models that either students or teachers could examine that linked content (birds) to inquiry (bird studies).}} (p. 898). | |||

Last but not least, lack of birds and inability to identify or count birds were also major problems. Typically, bird lovers would not be challenged by either one. It's easy to build feeders to attract birds and bird counting is easy when one knows how to focus on particular features, called ''field marks''. | |||

The development team made three broad recommendations: (a) integrate content knowledge about birds and better explain inquiry (b) provide discipline specific models for conducting inquiry (e.g. ornithologists’ for bird studies), and (c) assess outcomes mindful of broad rather than narrow definitions of inquiry to better reflect the Standards. In addition, the authors point out that there were rather severe communication problems between curriculum developers and ornithologists. The former lacked understanding of inquire and latter did not understand the crucial role of prior knowledge needed to plan and conduct bird studies. | |||

In a different study on adapting an [[e-science]] environment, Waight and Abd-El-Khalick came to similar conclusions. | |||

'''Lessons from inquiry learning designs and technology''' | |||

Since many citizen science projects are quite ambitious, there might be a need for training users in a more formal way than participatory apprenticeship. Two or three decades of research in computer-supported inquiry learning may provide a few insights. According to Joolingen (2007), {{quotation|Support for learning processes typically takes the form of cognitive tools or scaffolds. The basic idea of most cognitive tools is to boost the performance of learning processes by providing information about them, by providing templates, or by constraining the learner's interaction with the learning environment.}}. In a way, successful citizen learning platforms are exactly doing that. Besides design of the environment, there are many other factors that influence the learning process, in particular personality traits, prior knowledge (including understanding of inquiry), learner motivation, the teacher, the technological infrastructure, clear assessment criteria, etc. | |||

=== Evaluation of informal science education === | |||

Informal science education refers mostly to larger or smaller top-down initiatives that aim to raise interest for STEM (Science, technology, engineering and mathematics) subjects. As an example, {{quotation|The Informal Science Education (ISE) program at the National Science Foundation (NSF) | |||

invests in projects designed to increase interest in, engagement with, and understanding of | |||

science, technology, engineering, and mathematics (STEM) by individuals of all ages and | |||

backgrounds through self-directed learning experiences}} ([http://informalscience.org/evaluations/eval_framework.pdf Ucko, 2008: 9]) | |||

'''Things to evaluate''' | |||

Dierking (2008:19), a contributor to the US ''Framework for Evaluating Impacts of Informal Science Education Projects''in Friedman (2008), suggests that a project should be able to answer at least the following questions at the outset of initiating a project: | |||

# What audience impacts will this project facilitate? | |||

# What approach/type of project will best enable us to accomplish these goals and why do we feel that this is the best approach to take? | |||

# How will we know whether the activities of the project accomplished these intended goals and objectives and with what evidence will we support the assertion that they did? | |||

# How will we ensure that unanticipated outcomes are also documented? | |||

This US "Informal education and outreach framework" (Uko 2008:11, Dierking 2008:21) identifies six impact categories with respect to both public audiences and professional audiences. | |||

{| class="wikitable" | |||

|+ The Informal Education and Outreach Framework (Uko 2008:11, Dierking 2008:21) | |||

! Impact Category !! Public Audiences !! Professional Audiences !! Generic Definition | |||

|- | |||

| Awareness, knowledge or understanding (of) || STEM concepts, processes, or careers || Informal STEM education/outreach research or practice. || Measurable demonstration of assessment of, change in, or exercise of awareness, knowledge, understanding of a particular scientific topic, concept, phenomena, theory, or careers central to the project. | |||

|- | |||

| Engagement or interest (in) || STEM concepts, processes, or careers || Advancing informal STEM education/outreach field || Measurable demonstration of assessment of, change in, or exercise of engagement/interest in a particular scientific topic, concept, phenomena, theory, or careers central to the project. | |||

|- | |||

| Attitude (towards) || STEM-related topic or capabilities || Informal STEM education/outreach research or practice || Measurable demonstration of assessment of, change in, or exercise of attitude toward a particular scientific topic, concept, phenomena, theory, or careers central to the project or one’s capabilities relative to these areas. Although similar to awareness/interest/engagement, attitudes refer to changes in relatively stable, more intractable constructs such as empathy for animals and their habitats, appreciation for the role of scientists in society or attitudes toward stem cell research. | |||

|- | |||

| Behavior (related to) || STEM concepts, processes, or careers || Informal STEM education/outreach research or practice || Measurable demonstration of assessment of, change in, or exercise of behavior related to a STEM topic. These types of impacts are particularly relevant to projects that are environmental in nature or have some kind of a health science focus since action is a desired outcome. | |||

|- | |||

| Skills (based on) || STEM concepts, processes, or careers || Informal STEM education/outreach research or practice || Measurable demonstration of the development and/or reinforcement of skills, either entirely new ones or the reinforcement, even practice, of developing skills. These tend to be procedural aspects of knowing, as opposed to the more declarative aspects of knowledge impacts. Although they can sometimes manifest as engagement, typically observed skills include a level of depth and skill such as engaging in scientific inquiry skills (observing, classifying, exploring, questioning, predicting, or experimenting), as well as developing/practicing very specific skills related to the use of scientific instruments and devices (e.g. using microscopes or telescopes successfully). | |||

|- | |||

| Other || Project specific || Project specific || Project specific | |||

|} | |||

From this table, Dierking (2008:23) then derives a simple worksheet for ''Developing Intended Impacts, Indicators & Evidence'' | |||

{| class="wikitable" | |||

|+ worksheet for ''Developing Intended Impacts, Indicators & Evidence'' | |||

|- | |||

! ISE Category of Impact !! Potential indicators !! Evidence that impact was attained | |||

|- | |||

| Awareness, knowledge or understanding of STEM concepts, processes or careers || || | |||

|- | |||

| Engagement or interest in STEM concepts, processes, or careers || || | |||

|- | |||

| Attitude towards STEM-related topics or capabilities || || | |||

|- | |||

| Behavior resulting from experience || || | |||

|- | |||

| Skills based on experience || || | |||

|- | |||

| Other (describe) || || | |||

|} | |||

'''Types of evaluation''' | |||

The NSF ''Framework for Evaluating Impacts of Informal Science Education Projects'' workshop report (p. 17) identifies three types of evaluation. In addition to the usual formative and summative evaluation, they add front-end evaluation and remedial evaluation: | |||

* '''Front-end evaluation.''' This means asking questions to find out what your audience members already know, what they don’t know, what they are interested in and what they are not interested in. Knowing these before you develop a project can save you from having to create unnecessary experiences, or from neglecting to treat subjects which are both interesting and needed. | |||

* '''Formative evaluation'''. Decades of uses of formative evaluation, which is iterative testing of learning strategies to improve them as they are developed, have proven invaluable over and over. No matter how well we imagine a strategy will work, it takes exposure to real audience members to discover just what actually works, and for whom. With formative evaluation, we can improve our strategies before they are set in concrete and become too expensive to change. | |||

* '''Remedial evaluation'''. Remedial evaluation requires discipline on our part: saving enough money and time so that we can look at our “finished” products, investigate how audience members experience them, and make hopefully minor adjustments to improve (remediate) the end results. Remedial evaluation is concentrated near the end of a project, like summative evaluation, and may use the same tools. But the purpose of remedial evaluation is different: it is performed to make one last round of improvements to the project’s deliverables, rather than to evaluate the impact of the project. Remedial evaluation can take place before, during or after summative evaluation, and may even use the same data. | |||

([http://informalscience.org/evaluations/eval_framework.pdf Framework for Evaluating Impacts of Informal Science Education Projects], retrieved 16:57, 5 January 2012 (CET)). | |||

== Software == | |||

'''See also''': [[List of citizen science software]]. Contents below will go away once we got this completed - [[User:Daniel K. Schneider|Daniel K. Schneider]] ([[User talk:Daniel K. Schneider|talk]]) 19:08, 28 November 2013 (CET) | |||

=== Guides === | |||

Robert D. Stevenson et al. (2003) at the University of Massachusetts have developed open source software tools that enable nonexperts to produce customised field guides. Field guides are used to identify species, a task that is common to many projects. | |||

* R.D. Stevenson, et al. Electronic field guides and user communities in the eco-informatics revolution. Conserv. Ecol., 7 (2003), p. 3. | |||

=== Volonteer computing === | |||

* [http://boinc.berkeley.edu/ BOINC]. Quote: "Volunteer computing is an arrangement in which people (volunteers) provide computing resources to projects, which use the resources to do distributed computing and/or storage." ([http://boinc.berkeley.edu/trac/wiki/VolunteerComputing What is volunteer computing?], 7/2013). | |||

=== Data collection === | |||

* [http://www.epicollect.net/ Epicollect] {{quotation|provides a web application for the generation of forms and freely hosted project websites (using Google's AppEngine) for many kinds of mobile data collection projects. Data can be collected using multiple mobile phones running either the Android Operating system or the iPhone (using the EpiCollect mobile app) and all data can be synchronised from the phones and viewed centrally (using Google Maps) via the Project website or directly on the phones.}} (nov 2012, by early 2013 there will be a new version). Data export in several formats. | |||

* Pathfinder (Luther et al. 2009: abstract) {{quotation|is an online environment that challenges this traditional division of labor by providing tools for citizen scientists to collaboratively discuss and analyze the data they collect.}}. The design of pathfinder was inspired by three main phenomena: Complex online participation (e.g. Wikipedia or OSS), Argument mapping systems such as [[CompendiumLD]] and social data analysis (SDA), e.g. the [http://sense.us sense.us] system. There are two main components to Pathfinder: '''tracks''' (data sets) and '''discussions'''. Both are linked. In addition, pathfinder allows to annotate a pattern or trend found in data set as ''finding''. Finally, every data set (trac)k page also contains comments and a basic wiki. The latter can be used for general casual discussions. {{quotation|The discussions component of Pathfinder allows users to engage in collaborative, structured analyses around tracks. Each discussion page is organized into three sections: the topic, responses to the topic, and an overview of these responses, displayed below the topic but before the responses themselves}} (Luther et al., 2009: 242). Responses can be tagged as so-called "Milestones", i.e. ''Questions, Hypotheses, Evidence (Pro or Con), Background, Prediction, Conclusion, or To-Do''. | |||

* Ellul et al (2011) developed a Flexible Database-Centric Platform for Citizen Science Data Capture. | |||

=== Human data analysis tasks === | |||

* [https://www.zooniverse.org/ Zooniverse] {{quotation|and the suite of projects it contains is produced, maintained and developed by the Citizen Science Alliance.}} Zooniverse may include the largest collection of citizen science projects. The first project from which this framework was developed was [http://www.galaxyzoo.org/ GalaxyZoo]. | |||

* [[pyBossa]] is a free, open-source, platform for creating and running crowd-sourcing applications that utilize online assistance in performing tasks that require human cognition, knowledge or intelligence such as image classification, transcription, geocoding and more! (retrieved nov 12 2012) | |||

* [https://www.mturk.com/mturk/welcome Amazon Mechanical Turk] can be used to find people. Usually for pay. See [http://experimentalturk.wordpress.com/about/ Experimental turk] for experiments with that. | |||

=== Social science experiments computing games === | |||

* [http://www.xtribe.eu/ Xtribe.eu] {{quotation|is a web platform designed for scientific gaming and social computation. Its task is to help Researchers in the realization of web-experiments by accomplishing those necessary and tedious tasks involving the management of social resources (User registration, validation, selection and pairing). In this way Researchers shall focus entirely on the implementation of the core part of their experiment. On the Users side, everyone may join X-Tribe and enjoy the participation in entertaining scientific experiments.}} (retrieved nov 12 2012) | |||

* [http://experimentalturk.wordpress.com/ experimental turk] using Amazon's mechanical turk. | |||

== Links == | == Links == | ||

All of these need to be updated ! Instead of doing that we will provide a whole new set of pages by the end of fall 2013 - [[User:Daniel K. Schneider|Daniel K. Schneider]] ([[User talk:Daniel K. Schneider|talk]]) 12:17, 15 July 2013 (CEST) | |||

=== General === | === General === | ||

* [http://en.wikipedia.org/wiki/Citizen_science Wikipedia] | * [http://en.wikipedia.org/wiki/Citizen_science Wikipedia] | ||

* [http://www.scientificamerican.com/citizen-science/ Citizen Science] (Scientific American). Also includes a larger list of projects. | |||

=== Organizations === | === Organizations === | ||

| Line 30: | Line 230: | ||

* [http://www.citizencyberscience.net/ Citizen CyberScience Centre] | * [http://www.citizencyberscience.net/ Citizen CyberScience Centre] | ||

* [http://www.ecastnetwork.org/ Expert and Citizen Assessment of Science and Technology] | * [http://www.ecastnetwork.org/ Expert and Citizen Assessment of Science and Technology] | ||

* [http://research2practice.info/ Research2Practice], US web site for informal STEM education. | |||

* [http://informalscience.org/ InformalScience.org]. A resource and online community for informal learning projects, research and evaluation. (USA) | |||

* [http://www8.open.ac.uk/about/teaching-and-learning/esteem/ eSTEeM] brings together STEM academics to promote innovation and scholarship (Open university, UK) | |||

* http://uclexcites.wordpress.com/ | |||

* [https://ec.europa.eu/digital-agenda/en/citizen-science EC page on citizen science] (including links to some EU projects and initiatives) | |||

=== Index pages === | === Index pages of projects === | ||

* [http://scistarter.com/ scistarter] (Science we ca do together) | * [http://scistarter.com/ scistarter] (Science we ca do together) | ||

* [http://spacehack.org/ Spacehack], a directory of ways to participate in space exploration | |||

=== On-line environments === | === On-line environments === | ||

| Line 49: | Line 256: | ||

* [http://exploration.nationalgeographic.com/mongolia Field Expedition: Mongolia] | * [http://exploration.nationalgeographic.com/mongolia Field Expedition: Mongolia] | ||

* [https://www.zooniverse.org/ ZooinVerse] | * [https://www.zooniverse.org/ ZooinVerse] | ||

* [http://www.mappingforchange.org.uk/ Mapping for change] Its mission is to empower individuals and communities to make a difference to their local area through the use of mapping and the applications of geographical information. Also see [http://www.communitymaps.org.uk/ Communitymaps.org] | |||

=== Learning === | |||

* [https://uclexcites.wordpress.com/2013/09/25/investigating-learning-in-citizen-science1-by-dr-laure-kloetzer/ Investigating learning in Citizen Science/1 by Dr. Laure Kloetzer] | |||

=== Evaluation === | === Evaluation === | ||

* [http://informalscience.org/ Informalscience.org/] is a resource and online community for informal learning projects, research and evaluation. It includes [http://informalscience.org/evaluation evaluations of several informal science learning projects] | |||

* Friedman, A. (Ed.). (March 12, 2008). Framework for Evaluating Impacts of Informal Science Education Projects [On-line]. http://informalscience.org/evaluations/eval_framework.pdf. Originally at: http://insci.org/resources/Eval_Framework.pdf (broken link) | * Friedman, A. (Ed.). (March 12, 2008). Framework for Evaluating Impacts of Informal Science Education Projects [On-line]. http://informalscience.org/evaluations/eval_framework.pdf. Originally at: http://insci.org/resources/Eval_Framework.pdf (broken link) | ||

* [http://www.nsf.gov/pubs/2002/nsf02057/nsf02057.pdf The 2002 User-Friendly Handbook for Project Evaluation], NSF (USA) by Joy Frechtling Westat | * [http://www.nsf.gov/pubs/2002/nsf02057/nsf02057.pdf The 2002 User-Friendly Handbook for Project Evaluation], NSF (USA) by Joy Frechtling Westat | ||

== Bibliography == | == Bibliography == | ||

* Arnstein, S.R. (1969). A Ladder of Citizen Participation. Journal of the America Institute of Planners, 35(4), 216-224 | |||

* Ballard, H., Pilz, D., Jones, E.T., and Getz, C. (2005). Training Curriculum for Scientists and Managers: Broadening Participation in Biological Monitoring. Corvalis, OR: Institute for Culture and Ecology. | * Ballard, H., Pilz, D., Jones, E.T., and Getz, C. (2005). Training Curriculum for Scientists and Managers: Broadening Participation in Biological Monitoring. Corvalis, OR: Institute for Culture and Ecology. | ||

* Baretto, C., Fastovsky, D. and Sheehan, P. (2003). A Model for Integrating the Public into Scientific Research. Journal of Geoscience Education. 50 (1). p. 71-75. | * Baretto, C., Fastovsky, D. and Sheehan, P. (2003). A Model for Integrating the Public into Scientific Research. Journal of Geoscience Education. 50 (1). p. 71-75. | ||

* Bauer, M., Petkova, K., and Boyadjieva, P. (2000). Public Knowledge of and Attitudes to Science: Alternative Measures That May End the "Science War". Science Technology and Human Values. 25 (1). p. 30-51. | * Bauer, M., Petkova, K., and Boyadjieva, P. (2000). Public Knowledge of and Attitudes to Science: Alternative Measures That May End the "Science War". Science Technology and Human Values. 25 (1). p. 30-51. | ||

* Bencze, L., & Hodson, D. (1999). Changing practice by changing practice: Toward a more authentic science and science curriculum development. Journal of Research in Science Teaching, 36(5), 521– 539. | |||

* Bonney, R. 2004. Understanding the process of research. Chapter 12 in D. Chittenden, G. Farmelo, and B. Lewenstein, editors. Creating connections: museums and public understanding of current research. Altamira Press, California, USA. | |||

* Bonney, R. and LaBranche, M. (2004). Citizen Science: Involving the Public in Research. ASTC Dimensions. May/June 2004, p. 13. | * Bonney, R. and LaBranche, M. (2004). Citizen Science: Involving the Public in Research. ASTC Dimensions. May/June 2004, p. 13. | ||

* Bonney, R., Cooper, C.B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K.V. and Shirk, J. (2009). Citizen Science: A Developing Tool for Expanding Science Knowledge and Scientific Literacy. BioScience. 59 (11). P. 977-984. | * Bonney, R., Cooper, C.B., Dickinson, J., Kelling, S., Phillips, T., Rosenberg, K.V. and Shirk, J. (2009). Citizen Science: A Developing Tool for Expanding Science Knowledge and Scientific Literacy. BioScience. 59 (11). P. 977-984. | ||

* Bonney, R., Ballard, H., Jordan, H., McCallie, E., Phillips, T., Shirk, J. and Wilderman, C. (2009). Public Participation in Scientific Research: Defining the Field and Assessing Its Potential for Informal Science Education. A CAISE Inquiry Group Report, Center for Advancement of Informal Science Education (CAISE), Washington, DC, Tech. Rep. | |||

* Bonney, R., and A. A. Dhondt (1997). Project FeederWatch. Chapter 3 in K. C. Cohen, editor. Internet links to science education: student scientist partnerships. Plenum Press, New York, New York, USA. | |||

* Bouwen, R., and T. Taillieu. 2004. Multi-party collaboration as social learning for interdependence: developing relational knowing for sustainable natural resource management. Journal of Community and Applied Social Psychology 14:137–153. | |||

* Bracey, G.L. 2009. “The developing field of citizen science: A review of the literature.” Submitted to Science Education. (not published yet as of 1/2011 ?) | |||

* Bradford, B. M., and G. D. Israel. 2004. Evaluating volunteer motivation for sea turtle conservation in Florida. University of Florida: Agriculture Education and Communication Department, Institute of Agriculture and Food Sciences, AEC 372. http://edis.ifas.ufl.edu. | |||

* Brossard, D., Lewenstein, B., and Bonney, R. (2005). Scientific Knowledge and Attitude Change: The Impact of a Citizen Science Project. International Journal of Science Education. 27 (9). p. 1099-1121. | * Brossard, D., Lewenstein, B., and Bonney, R. (2005). Scientific Knowledge and Attitude Change: The Impact of a Citizen Science Project. International Journal of Science Education. 27 (9). p. 1099-1121. | ||

* Cooper, C.B., Dickinson, J., Phillips, T., and Bonney, R. (2007). Citizen Science as a Tool for Conservation in Residential Ecosystems. Ecology and Society. 12 (2). | |||

* Bryant, S.L., Forte, A., and Bruckman, A. Becoming Wikipedian: transformation of participation in a collaborative online encyclopedia. Proc. SIGGROUP 2005, ACM (2005), 1-10. | |||

* Cohn, J. P. (2008). Citizen science: Can volunteers do real research? Bioscience, 58(3), 192–197 | |||

* Connors, J. P., Lei, S., & Kelly, M. (2012). Citizen science in the age of neogeography: Utilizing volunteered geographic information for environmental monitoring. Annals of the Association of American Geographers, 102(6), 1267-1289. | |||

* Cooper, C.B., Dickinson, J., Phillips, T., and Bonney, R. (2007). Citizen Science as a Tool for Conservation in Residential Ecosystems. Ecology and Society. 12 (2). [http://www.ecologyandsociety.org/vol12/iss2/art11/ http://www.ecologyandsociety.org/vol12/iss2/art11/] | |||

* Cooper, Seth, Firas Khatib, Adrien Treuille, Janos Barbero, Jeehyung Lee, Michael Beenen, Andrew Leaver-Fay, David Baker, Zoran Popović and Foldit players. Predicting protein structures with a multiplayer online games. ''Nature'' 466, 756-760 (2010). | * Cooper, Seth, Firas Khatib, Adrien Treuille, Janos Barbero, Jeehyung Lee, Michael Beenen, Andrew Leaver-Fay, David Baker, Zoran Popović and Foldit players. Predicting protein structures with a multiplayer online games. ''Nature'' 466, 756-760 (2010). | ||

* De Jong T. ( 2006a ) Computer simulations – technological advances in inquiry learning . Science 312 , 532 – 533 . | |||

* Dunbar K. ( 1999 ) The scientist invivo: how scientists think and reason in the laboratory . In Model-based Reasoning in Scientific Discovery (eds L.Magnani , N.Nersessian & P.Thagard ), pp. 89 – 98 . Kluwer Academic/Plenum Press, New York. | |||

* Ellul, C., Francis, L. and Haklay, M., 2011, A Flexible Database-Centric Platform for Citizen Science Data Capture, Computing for Citizen Science Workshop, in Proceedings of the 2011 Seventh IEEE International Conference on eScience (eScience 2011) | |||

* Evans, C., E. Abrams, R. Reitsma, K. Roux, L. Salmonsen, and P. P. Marra. 2005. The neighborhood nestwatch program: participant outcomes of a citizen-science ecological research project. Conservation Biology 19:589–594. | |||

* Falk, J., (Ed). (2001). Free-choice science education: How we learn science outside of school. New York: Teachers College Press. | |||

* Fernandez-Gimenez, M. E., Ballard, H. L., and Sturtevant, V. E. (2008). Adaptive management and social learning in collaborative and community-based monitoring: A study of five community-based forestry organizations in the western USA. Ecology and Society, 13(2). http://www.ecologyandsociety.org/vol13/iss2/art4/ | |||

* Finley, F. N., & Pocovi, M. C. (2000). Considering the scientific method of inquiry. In J. Minstrell & E. H. van Zee (Eds.), Inquiring into inquiry learning and teaching in science (pp. 47 – 62). Washington, DC: American Association for the Advancement of Science. | |||

* Firehock, K. and West, J. (2001). A brief history of volunteer biological water monitoring using macroinvertebrates. Journal of the North American Benthological Society. 14 (2) p. 197-202. | * Firehock, K. and West, J. (2001). A brief history of volunteer biological water monitoring using macroinvertebrates. Journal of the North American Benthological Society. 14 (2) p. 197-202. | ||

* | |||

* McCaffrey, R.E. (2005). Using Citizen Science in Urban Bird Studies. Urban Habitats. 3 (1). p. 70-86. | * Flichy, Patrice (2010), Le sacre de l'amateur : Sociologie des passions ordinaires à l'ère numérique, Seuil. | ||

* Friedman, A. (Ed). (2008). Framework for evaluating impacts of informal science education projects. http://insci.org/resources/Eval_Framework.pdf | |||

* Grey, F. (2009). The age of citizen cyberscience, CERN Courier, 29th April 2009, available WWW http://cerncourier.com/cws/article/cern/38718 Accessed July 2011 | |||

* Gyllenpalm, Jakob; Per-Olof Wickman, “Experiments” and the inquiry emphasis conflation in science teacher education, Science Education, 2011, 95, 5 [http://onlinelibrary.wiley.com/doi/10.1002/sce.20446/abstract Abstract] | |||

* Haklay, M. (in press). Citizen Science and Volunteered Geographic Information – overview and typology of participation, in VGI Handbook. | |||

* Hand, Eric (2010). "Citizen science: People power". Nature 466, 685-687 | |||

* Hartanto, H., M. C. B. Lorenzo, and A. L. Frio. 2002. Collective action and learning in developing a local monitoring system. International Forestry Review 4:184–195. | |||

* Heer, J., and M. Agrawala. 2008. Design considerations for collaborative visual analytics. ''Information Visualization'' 7:49–62. | |||

* Howe, J. (2006). The Rise of Crowdsourcing. Wired Magazine. June 2006. | |||

* Herzberg, F., Mausner, B., and Snyderman, B. The Motivation to Work. (2nd rev. ed.) New York: Wiley, 1959. | |||

* Irwin, A. (1995). Citizen Science. London: Routledge | |||

* Ison, R., and D. Watson. 2007. Illuminating the ossibilities of social learning in the management of Scotland’s water. Ecology and Society 12(1):21. http://www.ecologyandsociety.org/vol12/iss1/art21/. | |||

* Jordan, Rebecca C; Heidi L Ballard, and Tina B Phillips 2012. Key issues and new approaches for evaluating citizen-science learning outcomes. Frontiers in Ecology and the Environment 10: 307–309. http://dx.doi.org/10.1890/110280 | |||

* Khatib, Firas; Seth Cooper, Michael D. Tyka, Kefan Xu, Ilya Makedon, Zoran Popović, David Baker, and Foldit Players. Algorithm discovery by protein folding game players. In Proceedings of the National Academy of Sciences (2011). | |||

* Khatib, F., DiMaio, F.; Foldit Contenders Group, Foldit Void Crushers Group, Cooper, S., Kazmierczyk, M., Gilski, M., Krzywda, S., Zabranska, H., Pichova, I., Thompson, J., Popovic, Z., Jaskolski, M., and Baker, D. (2011). Crystal structure of a monomeric retroviral protease solved by protein folding game players. Nature Structural & Molecular Biology. Published online 18 September 2011. Doi:10.1038/nsmb.2119 | |||

* King, K., C.V. Lynch 1998. “The motivation of volunteers in the nature conservancy - Ohio chapter, a non-profit environmental organization.” Journal of Volunteer Administration 16, (2): 5. | |||

* Kolb, D. A. 1984. Experiential learning: experience as the source of learning and development. Prentice-Hall, Englewood Hills, New Jersey, USA. | |||

* Kountoupes, D. and Oberhauser, K. S. (2008). Citizen science and youth audiences: Educational outcomes of the Monarch Larva Monitoring Project. Journal of Community Engagement and Scholarship, 1(1):10–20. | |||

* Krasny, M., and R. Bonney. 2005. Environmental education through citizen science and paticipatory action research. Chapter 13 in E. A. Johnson and M. J. Mappin, editors. Environmental education or advocacy: perspectives of ecology and education in environmental education. Cambridge University Press, Cambridge, UK. | |||

* Lave, J. and Wenger, E. Situated Learning: Legitimate Peripheral Participation. Cambridge UP, 1991. | |||

* Lehtinen, Erno (2010). Potential of teaching and learning supported by ICT for the acquisition of deep conceptual knowledge and the development of wisdom in De Corte, E. & Fenstad J.E. (eds). From Information to Knowledge; from Knowledge to Wisdom - Wenner-Gren International Series, volume 85. http://www.portlandpress.com/pp/books/online/wg85/ | |||

* Lewenstein 2001. PIPE Evaluation Report. Year 2, 1999–2000. Department of Communications, Cornell University, Ithaca, New York, USA. | |||

* Luther, Kurt; Scott Counts, Kristin B. Stecher, Aaron Hoff, and Paul Johns. 2009. Pathfinder: an online collaboration environment for citizen scientists. In Proceedings of the 27th international conference on Human factors in computing systems (CHI '09). ACM, New York, NY, USA, 239-248. DOI=10.1145/1518701.1518741 http://doi.acm.org/10.1145/1518701.1518741 | |||

* McCaffrey, R.E. (2005). Using Citizen Science in Urban Bird Studies. Urban Habitats. 3 (1). p. 70-86. http://www.urbanhabitats.org/v03n01/citizenscience_full.html | |||

* McCurley, S. & Lynch, R. (1997). Volunteer management. Downers Grove, Illinois: Heritage Arts. | |||

* Nagarajan, M., Sheth, A., & Velmurugan, S. (2011, March). Citizen sensor data mining, social media analytics and development centric web applications. In Proceedings of the 20th international conference companion on World wide web (pp. 289-290). ACM. http://dl.acm.org/citation.cfm?id=1963315 | |||

* National Research Council. (2009). Learning science in informal environments: People. places, and pursuits. Committee on Learning Science in Informal Environments, P. Bell, B. Lewenstein, A. W. Shouse, and M. A. Feder (Eds.) Board on Science Education, Center for Education, Division of Behavioral and Social Sciences and Education. Washington, DC: The National Academies Press. | |||

* Nelson, Brian C.; Diane Jass Ketelhut, Scientific Inquiry in Educational Multi-user Virtual Environments, Educational Psychology Review, 2007, 19, 3, 265 | |||

* Osborn, D., Pearse, J. and Roe, A. Monitoring Rocky Intertidal Shorelines: A Role for the Public in Resource Management. In California and the World Ocean: Revisiting and Revising California's Ocean Agenda. Magoon, O., Converse, H., Baird, B., Jines, B, and Miller-Henson, M., Eds. p. 624-636. Reston, VA: ASCE. | * Osborn, D., Pearse, J. and Roe, A. Monitoring Rocky Intertidal Shorelines: A Role for the Public in Resource Management. In California and the World Ocean: Revisiting and Revising California's Ocean Agenda. Magoon, O., Converse, H., Baird, B., Jines, B, and Miller-Henson, M., Eds. p. 624-636. Reston, VA: ASCE. | ||

* Price, C. A., & Lee, H. S. (2013). Changes in participants' scientific attitudes and epistemological beliefs during an astronomical citizen science project. Journal of Research in Science Teaching. Volume 50, Issue 7, pages 773–801, September 2013. [http://onlinelibrary.wiley.com/doi/10.1002/tea.21090/full Abstract/PDF] {{ar}} | |||

* Raddick, M. Jordan; Georgia Bracey, Pamela L. Gay, Chris J. Lintott, Phil Murray, Kevin Schawinski, Alexander S. Szalay, and Jan Vandenberg (2010). Galaxy Zoo: Exploring the Motivations of Citizen Science Volunteers, Astronomy Education Review 9, 010103 (2010), [http://dx.doi.org/10.3847/AER2009036 DOI:10.3847/AER2009036] - [http://arxiv.org/PS_cache/arxiv/pdf/0909/0909.2925v1.pdf PDF Preprint] | |||

* Reed, J., Raddick, M. J., Lardner, A., & Carney, K. (2013, January). An Exploratory Factor Analysis of Motivations for Participating in Zooniverse, a Collection of Virtual Citizen Science Projects. In System Sciences (HICSS), 2013 46th Hawaii International Conference on (pp. 610-619). IEEE. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=6479908 | |||

* Reed, Jason; Whitney Rodriguez, and Angelique Rickhoff. 2012. A framework for defining and describing key design features of virtual citizen science projects. In Proceedings of the 2012 iConference (iConference '12). ACM, New York, NY, USA, 623-625. DOI=10.1145/2132176.2132314 http://doi.acm.org/10.1145/2132176.2132314 | |||

*Rotman, Dana; Jenny Preece, Jen Hammock, Kezee Procita, Derek Hansen, Cynthia Parr, Darcy Lewis, and David Jacobs. 2012. Dynamic changes in motivation in collaborative citizen-science projects. In Proceedings of the ACM 2012 conference on Computer Supported Cooperative Work (CSCW '12). ACM, New York, NY, USA, 217-226. DOI=10.1145/2145204.2145238 http://doi.acm.org/10.1145/2145204.2145238 | |||

* Sieber, R. (2006). Public Participation and Geographic Information Systems: A Literature Review and Framework. Annals of the American Association of Geographers, 96(3), 491-507 | |||

* Silvertown, J. (2009). A New Dawn for Citizen Science. Trends in Ecology & Evolution. 24 (9). p. 467-471 | * Silvertown, J. (2009). A New Dawn for Citizen Science. Trends in Ecology & Evolution. 24 (9). p. 467-471 | ||

* Spiro, M. (2004). What should the citizen know about science? Journal of the Royal Society of Medicine, 97 (1). | * Spiro, M. (2004). What should the citizen know about science? Journal of the Royal Society of Medicine, 97 (1). | ||

* | |||

* Stilgoe, J. (2009). Citizen Scientists – Reconnecting science with civil society, Demos, London | |||

* Sternberg, R.J. (2001) Why schools should teach for wisdom: the balance theory of wisdom in educational settings. Educational Psychologist 36, 227–245 | |||

* Thompson, S. and Bonney, R. (2007). Evaluating the impact of participation in an on-line citizen science project: A mixed-methods approach. In J. Trant and D. Bearman (Eds.), Museums and the web 2007: Proceedings. Toronto: Archives and Museum Informatics. [http://www.museumsandtheweb.com/mw2007/papers/thompson/thompson.html HTML], retrieved oct. 2012. | |||

* Trumbull, D. J., R. Bonney, D. Bascom, and A. Cabral. 2000. Thinking scientifically during participation in a citizen-science project. Science Education 84:265–27. | |||

* Trumbull, D. J., R. Bonney, and N. Grudens-Schuck. 2005. Developing materials to promote inquiry: lessons learned. Science Education 89(6):879–900. | |||

* Ucko, David A. (2008), Introduction To Evaluating Impacts Of Nsf Informal Science Education Projects, in Friedman, A. (Ed.). ''Framework for Evaluating Impacts of Informal Science Education Projects'' [On-line]. http://informalscience.org/evaluations/eval_framework.pdf. | |||

* Van Joolingen, W.R; T. De Jong, A. Dimitrakopoulou, Issues in computer supported inquiry learning in science, Journal of Computer Assisted Learning, 2007, 23, 2 | |||

* Wiggins, A. and Crowston, K. (2010) ‘Developing a conceptual model of virtual organisations for citizen science’, Int. J. Organisational Design and Engineering, Vol. 1, Nos. 1/2, pp.148–162. | |||

* Wiggins, A., & Crowston K. (2011). From Conservation to Crowdsourcing: A Typology of Citizen Science. Proceedings of the Forty-fourth Hawai'i International Conference on System Science (HICSS-44). | |||

* Waight Noemi, Fouad Abd-El-Khalick, From scientific practice to high school science classrooms: Transfer of scientific technologies and realizations of authentic inquiry, Journal of Research in Science Teaching, 2011, 48, 1. [http://dx.doi.org/10.1002/tea.20393 DOI:10.1002/tea.20393] | |||

* Wiggins, A., & Crowston K. (2012). Goals and tasks: Two typologies of citizen science projects. Forty-fifth Hawai’i International Conference on System Science (HICSS-45) | |||

* Ye, Y. and Kishida, K. Toward an understanding of the motivation Open Source Software developers. Proc. ICSE 2003, IEEE (2003), 419-429. | |||

* Zook, M., Graham, M., Shelton, T., and Gorman, S., 2010. Volunteered Geographic Information and Crowdsourcing Disaster Relief: A Case Study of the Haitian Earthquake. World Medical & Health Policy: 2(2) Art. 2 DOI: 10.2202/1948-4682.1069 | |||

[[category: e-science]] | |||

[[Category: Cloud computing]] | |||

[[Category:Virtual environments]] | |||

[[Category: Community]] | |||

[[Category: citizen science]] | |||

Latest revision as of 15:53, 6 April 2016

Work on this piece started on January 2012. It includes some rough literature review on chosen topics, in particular with respect to topics like "how do participants learn", "in what respect are citizens creative", "what is their motivation", "how do communities work". In other words, this article is just a "note taking" piece and it should be revised some day ...

See also:

- The citizen scienc portal centralizes EduTechWiki resources on citizen science, i.e. structured entries on citizen science projects, infrastructures, and software.

Introduction

Citizen science does not have a uniquely accepted definition. It could mean:

- Providing computing power through automatic middleware (a typical example is the captcha mechanism in this wiki for curbing spam)

- Participation of citizen for collection of data, for example observation of animals, pollution, or plant growth. This variant is a form of "crowd sourcing".

- Participation of citizen for categorizing and analyzing data, in various forms. An example would be helping to recognize patterns (e.g. forms of galaxies)

- Dissemination of scientific thought and results in schools in order to promote engagement with science or with the intent to help updating the curriculum.

- Participatory action research (also called "extreme citizen science")

- Amateur science, i.e. citizen create scientific thoughts and other products (e.g. discovery of new species)

- Citizen assessment of science and scientific projects.

See also: e-science

Types of citizen science

The variety of citizen science programs is important with respect to many criteria, e.g.: aims, target population, locations (schools, museums, media, Internet groups), forms, subject areas, tasks, etc.

Conceptual models

Cooper et al. (2007) distinguish between the citizen science model and the participatory action research model. The former uses citizens as data collectors and will receive recognition (including results of the study). Participatory action research “begins with the interests of participants, who work collaboratively with professional researchers through all steps of the scientific process to find solutions to problems of community relevance Finn (1994) outlined three key elements of participatory research: (1) it responds to the experiences and needs of the community, (2) it fosters collaboration between researchers and community in research activities, and (3) it promotes common knowledge and increases community awareness. Although citizen science can have research and education goals similar to many participatory action research projects (Finn 1994 and below), citizen science is distinct from participatory action research in that it occurs at larger scales and typically does not incorporate iterative or collaborative action.”. However, some citizen science project do actively try to engage citizens to participate as we shall try to show below.

Wiggins and Crowston (2011) identified “five mutually exclusive and exhaustive types of projects, which [they] labelled Action, Conservation, Investigation, Virtual and Education. Action projects employ volunteer-initiated participatory action research to encourage participant intervention in local concerns. Conservation projects address natural resource management goals, involving citizens in stewardship for outreach and increased scope. Investigation projects focus on scientific research goals in a physical setting, while Virtual projects have goals similar to Investigation projects, but are entirely ICT-mediated and differ in a number of other characteristics. Finally, Education projects make education and outreach primary goals”.

Haklay (in press) distinguised between broad categories: classic citizen science and citizen cyberscience.

- Classic: Observation (e.g. birds) , environmental management

- Citizen cyberscience: volunteered computing, volunteered thinking and participatory sensing.

In the same book chapter, Haklay (in press) then presents a framework for classifying the level of participation and engagement of participants in citizen science activities.

- Crowdsourcing: Citizen participate by providing computing power, do automatic data collection e.g. carry sensors. Cognitive engagement is minimal.

- Distributed Intelligence: Participants are asked to observe and collect data (e.g. birds) and/or carry out simple interpretation activities. A typical example of the latter is GalaxyZoo. Participants need some basic training.

- Participatory Science: Participants define the problem and, in consultation with scientists and experts, a data collection method is devised. Typical examples are envionmental justice cases, but it also can occur in distributed intelligence settings when they are allowed/encourage to formulate new questions. In both cases research are in charge of detailed analysis.

- Extreme Citizen Science (collaborative science): Both non-professional and professional scientists are involved in identifying problems, data collections, etc. Participation level can change, i.e. participants may or may not be involved in analysis and publication of results. In this mode, scientists also should act as facilitators and not just experts.

Haklay argues, that this ladder could be implemented in most projects. E.g. in volunteer crowdsourcing projects, most participants may just provide computing power. Others could help with technical problems, still others could e.g. rewrite parts of the software or get in touch with scientists and suggest new research directions. Finally, citizen science also may encourage scientists to become citizens, i.e. consider how their projects could integrate with society in several ways. Both aspects are related to the “intriguing possibility is that citizen science will work as an integral part of participatory science in which the whole scientific process is performed in collaboration with the wider public.”

Empirical models

Wiggins and Crowston (2012) then created two typologies of citizen science projets, using data from 63 projects. A first typology is based on twelve participation tasks, i.e. Observation, Species identification, Classification or tagging, Data entry, Finding entities, Measurement, Specimen/sample collection, Sample analysis, Site selection &/or description, Geolocation, Photography, Data analysis, and Number of tasks. The other typology is based on ten project goals, i.e. Science, Management, Action, Education, Conservation, Monitoring, Restoration, Outreach, Stewardship, and Discovery.

Participation clusters:

- Involve observation and identification tasks, but never require analysis. (13)

- Involve observation, data entry, and analysis, but include no locational tasks such as site selection or geolocation (6)

- Engage participants in a variety of tasks, the only participation task not represented is sample analysis (17)

- Engage the public in every participation task considered (15)

- Involve reporting, using observations, species identification, and data entry, but with very few additional participation opportunities (12)

Goal clusters:

- Afforded nearly equal weights (midpoint of the scale or higher) to each of the goal areas

- Most strongly focused on science

- Science is the most important goal, but education, monitoring, and discovery are only slightly less important on average

- Science, conservation, monitoring and stewardship are most important, while discovery is less valued than in the preceding clusters

- Outlier

For other typologies, see Wiggins and Crowston (2012).

Topics in the study of citizen science projects

Motivation

Motivation and motivation in education are complex constructs. A typical general model includes several components. E.g. Herzberg's (1959) five component model of job satisfaction includes achievement, recognition, work itself, responsibility, and advancement.

Some recent research by Raddick et al. (2010) about motivations of participants in cyberscience projects analyzed free forum messages and structured interviews. The first mentioned categories include the subject (astronomy), contribution and vastness. Learning (3%) and Science (1%) were minor. Looking at all responses, the same motivations - subject (46%), contribution (22%), vastness (24%) - still dominate, but other motivations exceed 10%, e.g. beauty (16%), fun (11%) and learning (10%).

It might be interesting to also look at personality traits of participants. E.g. Furnham et al. (1999) in a study about personality and work motivation found out that extroverts on the Eysenck Personality Profile correlate with a preference for Herzberg's motivators, whereas "neuroticism" correlates with so-called hygiene factors, i.e. the environment. In other words, extraverts are rather motivated by intrinsic factors whereas others by extrinsic ones.

Virtual organisations for citizen science

An important variant of citizen science uses citizens as helpers for research. “Citizen science is a form of organisation design for collaborative scientific research involving scientists and volunteers, for which internet-based modes of participation enable massive virtual collaboration by thousands of members of the public.” (Wiggins and Crowson, 2010:148). The authors argue that virtual organisations for citizen science are a bit different from other virtual organizations: “The project level of group interaction is distinct from those of small work groups and organisations (Grudin, 1994), which has implications for organisation design efforts. Project teams and communities of practice can be distinguished by their goal orientation among other features (Wenger, 1999), but empirical observation of citizen science VOs to date indicates a hybrid ‘community of purpose’ might better describe many projects, with characteristics of both a project team and a community of practice or interest.” (p 159).

Wiggins and Crowson (2010) then suggest a conceptual model of citizen science virtual organizations.

A new version of this model is under preparation, according to K. Crowston (email communication 1/2012).

Learning in citizen science projects

Luther et al. (2009) related learning in citizen science projects to Wikipedia and open source (OSS) participation. “researchers have identified some surprising commonalities between Wikipedia and OSS worth mentioning here. The first is legitimate peripheral participation (LPP), a theory of social learning which holds that novices in a community of practice may transform into experts by observing expert practices and taking on increasingly complex work (Lave & Wenger, 1991). In the case of Wikipedia, a user may begin by fixing typos, gradually making more substantial edits until she is writing entire articles from scratch (Brynt et al. 2005). In OSS, bug reporters may write a few lines of code to fix a glitch and eventually learn to code new modules”.

The Caise report argues that “educational research shows that people have greater motivation to engage and learn if the subject matter is directly relevant to their lives and interests and/or if the learning process is interactive—one in which the learner can directly affect the learning process, content, and/or outcomes of the experience (Falk 2001)”.

According to Cooper et al (2007) “Citizen science also provides informal learning experiences that improve science literacy (Krasny and Bonney 2005, Evans et al. 2005). Several studies have found that citizen science projects at the Cornell Laboratory of Ornithology have improved participants’ knowledge about biology and natural history, engaged them directly in the process of inquisitive thinking, and increased their ability to frame questions scientifically (Bonney and Dhondt 1997, Trumbull et al. 2000, 2005, Lewenstein 2001, Bonney 2004, Krasny and Bonney 2005).”

Sternberg (according to Lehtinen, 2010) points out that wisdom - i.e. the highest end of the continuum that goes from data to information, from information to knowledge and from knowledge to wisdom - is not so much taught directly but learned as a by‑product of the activities in practical situations and working or learning environments.

Citizen science and inquiry learning

According to van Joolingen et al (2007). “science learning should also focus on the processes and methods used by scientists to achieve such results. One obvious way to bring students into contact with the scientific way of working is to have them engage in the processes of scientific inquiry themselves, by offering them environments and tasks that allow them to carry out the processes of science: orientation, stating hypotheses, experimentation, creating models and theories, and evaluation (de Jong 2006a). Involving students in the processes of science brings them into the closest possible contact with the nature of scientific understanding, including its strengths, problems and limitations (Dunbar 1999). This is the main claim of inquiry learning: engaging learners in scientific processes helps them build a personal knowledge base that is scientific, in the sense that they can use this knowledge to predict and explain what they observe in the natural world.”

Adaptation of citizen science projects to inquiry learning in formal settings

There is a fairly strong connection between some citizen science projects and inquiry learning projects conducted in formal school settings. Some of latter participate in citizen science projects, others are somewhere in between (i.e. may use or even produce authentic data). Adapting citizen science to school context may not be easy, in particular when scientists outsource the development to curriculum developers who are not familiar with scientific inquiry.

Trumbull et al. (2005) describes the adaptation of Cornell Laboratory of Ornithology citizen science labs to US National Science Education Standards, i.e. curricular content and tasks for classroom use. Evaluation of the first version of the Classroom FeederWatch (CFW) project did not show evidence of progress in scientific thinking as defined by the US education standard: “The evaluation data overall failed to support the claim that students learned inquiry abilities or developed understandings of inquiry as a result of participating in activities associated with CFW’s early materials.” (p. 887). The authors attribute problems to several factors: Firstly, the teacher's lack of understanding of both scientific inquiry and inquiry learning. The US standard seems to difficult to understand and translate into practice and the authors also mention that the GenScope project was challenged in a similar way. Furthermore, students didn't understand that their data was actually being used for real and therefore may have been less motivated.

The probably most interesting finding was that “the early versions of CFW featured a version of science and scientists that was too abstract and free of context. For example, the CFW exploration that directly addressed the scientific process presented the following sequence: formulate a question, predict an answer, develop procedures to gather information, collect and analyze data, communicate results, and raise new questions. As an abstraction, the description was adequate. For the purposes of teaching inquiry, the level of abstraction provided a simplistic, even distorted model of practical elements of scientific inquiry (Bencze & Hodson, 1999; Finley&Pocovi, 2000). The descriptionwas not adequate as a teaching strategy for learning how to conduct studies about birds. In particular, exploration narratives failed to explain how and why an ornithologist came to ask questions, which question got addressed, how she decided to gather data to address the question, or how he made sense of the data. There were, in short, no models that either students or teachers could examine that linked content (birds) to inquiry (bird studies).” (p. 898).

Last but not least, lack of birds and inability to identify or count birds were also major problems. Typically, bird lovers would not be challenged by either one. It's easy to build feeders to attract birds and bird counting is easy when one knows how to focus on particular features, called field marks.

The development team made three broad recommendations: (a) integrate content knowledge about birds and better explain inquiry (b) provide discipline specific models for conducting inquiry (e.g. ornithologists’ for bird studies), and (c) assess outcomes mindful of broad rather than narrow definitions of inquiry to better reflect the Standards. In addition, the authors point out that there were rather severe communication problems between curriculum developers and ornithologists. The former lacked understanding of inquire and latter did not understand the crucial role of prior knowledge needed to plan and conduct bird studies.

In a different study on adapting an e-science environment, Waight and Abd-El-Khalick came to similar conclusions.

Lessons from inquiry learning designs and technology

Since many citizen science projects are quite ambitious, there might be a need for training users in a more formal way than participatory apprenticeship. Two or three decades of research in computer-supported inquiry learning may provide a few insights. According to Joolingen (2007), “Support for learning processes typically takes the form of cognitive tools or scaffolds. The basic idea of most cognitive tools is to boost the performance of learning processes by providing information about them, by providing templates, or by constraining the learner's interaction with the learning environment.”. In a way, successful citizen learning platforms are exactly doing that. Besides design of the environment, there are many other factors that influence the learning process, in particular personality traits, prior knowledge (including understanding of inquiry), learner motivation, the teacher, the technological infrastructure, clear assessment criteria, etc.

Evaluation of informal science education

Informal science education refers mostly to larger or smaller top-down initiatives that aim to raise interest for STEM (Science, technology, engineering and mathematics) subjects. As an example, “The Informal Science Education (ISE) program at the National Science Foundation (NSF) invests in projects designed to increase interest in, engagement with, and understanding of science, technology, engineering, and mathematics (STEM) by individuals of all ages and backgrounds through self-directed learning experiences” (Ucko, 2008: 9)

Things to evaluate

Dierking (2008:19), a contributor to the US Framework for Evaluating Impacts of Informal Science Education Projectsin Friedman (2008), suggests that a project should be able to answer at least the following questions at the outset of initiating a project:

- What audience impacts will this project facilitate?

- What approach/type of project will best enable us to accomplish these goals and why do we feel that this is the best approach to take?

- How will we know whether the activities of the project accomplished these intended goals and objectives and with what evidence will we support the assertion that they did?

- How will we ensure that unanticipated outcomes are also documented?

This US "Informal education and outreach framework" (Uko 2008:11, Dierking 2008:21) identifies six impact categories with respect to both public audiences and professional audiences.

| Impact Category | Public Audiences | Professional Audiences | Generic Definition |

|---|---|---|---|

| Awareness, knowledge or understanding (of) | STEM concepts, processes, or careers | Informal STEM education/outreach research or practice. | Measurable demonstration of assessment of, change in, or exercise of awareness, knowledge, understanding of a particular scientific topic, concept, phenomena, theory, or careers central to the project. |

| Engagement or interest (in) | STEM concepts, processes, or careers | Advancing informal STEM education/outreach field | Measurable demonstration of assessment of, change in, or exercise of engagement/interest in a particular scientific topic, concept, phenomena, theory, or careers central to the project. |

| Attitude (towards) | STEM-related topic or capabilities | Informal STEM education/outreach research or practice | Measurable demonstration of assessment of, change in, or exercise of attitude toward a particular scientific topic, concept, phenomena, theory, or careers central to the project or one’s capabilities relative to these areas. Although similar to awareness/interest/engagement, attitudes refer to changes in relatively stable, more intractable constructs such as empathy for animals and their habitats, appreciation for the role of scientists in society or attitudes toward stem cell research. |

| Behavior (related to) | STEM concepts, processes, or careers | Informal STEM education/outreach research or practice | Measurable demonstration of assessment of, change in, or exercise of behavior related to a STEM topic. These types of impacts are particularly relevant to projects that are environmental in nature or have some kind of a health science focus since action is a desired outcome. |

| Skills (based on) | STEM concepts, processes, or careers | Informal STEM education/outreach research or practice | Measurable demonstration of the development and/or reinforcement of skills, either entirely new ones or the reinforcement, even practice, of developing skills. These tend to be procedural aspects of knowing, as opposed to the more declarative aspects of knowledge impacts. Although they can sometimes manifest as engagement, typically observed skills include a level of depth and skill such as engaging in scientific inquiry skills (observing, classifying, exploring, questioning, predicting, or experimenting), as well as developing/practicing very specific skills related to the use of scientific instruments and devices (e.g. using microscopes or telescopes successfully). |

| Other | Project specific | Project specific | Project specific |

From this table, Dierking (2008:23) then derives a simple worksheet for Developing Intended Impacts, Indicators & Evidence

| ISE Category of Impact | Potential indicators | Evidence that impact was attained |

|---|---|---|

| Awareness, knowledge or understanding of STEM concepts, processes or careers | ||

| Engagement or interest in STEM concepts, processes, or careers | ||

| Attitude towards STEM-related topics or capabilities | ||

| Behavior resulting from experience | ||

| Skills based on experience | ||

| Other (describe) |

Types of evaluation