Methodology tutorial - theory-driven research designs

This is part of the methodology tutorial (see its table of contents).

Introduction

- Learning goals

- Understand the fundamental principles of theory-driven research

- Become familiar with some major approaches

- Prerequisites

- Moving on

- Methodology tutorial - quantitative data acquisition methods

- Methodology tutorial - quantitative data analysis

- Level and target population

- Beginners

- Quality / To do

- More or less ok in spirit. 'But: some translations are needed, some phrases need to be rewritten. Bullets need to transformed to real paragraphs.

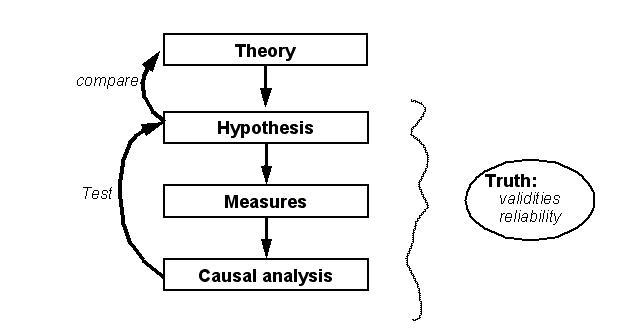

Overview of theory driven research

Most important elements of an empirical theory-driven design:

- Conceptualizations : Each research question is formulated as one or more hypothesis. Hypothesis are grounded in theory.

- Measures : are usually quantitative (e.g. experimental data, survey data, organizational or public "statistics", etc.) and make use of artifacts like surveys or experimental materials

- Analyses & conclusion : Hypothesis are tested with statistical methods

Experimental designs

The scientific ideal

Experimental research is the ideal paradigm for empirical research in most natural science disciplines. It aims to control physical interactions between variables

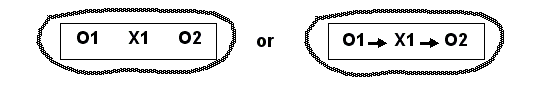

- Experimentation principle in science

- The study object is completely isolated from any environmental influence and observed (O1)

- A stimulus is applied to the object (X1)

- The object’s reactions are observed (O2).

We may draw a picture for this:

- O1 = observation of the non-manipulated object’s state

- X = treatment (stimulus, intervention)

- O2 = observation of the manipulated object’s state”.

The effect of the treatment (X) is measured by the difference between O1 and O2

In other words, an experiment can "prove" (corroborate) that an intervention X will have an effect Y. X and Y are theorectical variables that are operationalized in the following way. X becomes intervention and Y quantified measures of the effect.

The simple experiment in human sciences

In humain sciences (as well as in the life sciences) it is not possible to totally isolate a subject from its environment. Therefore we have to make sure that effects of the environment are either controlled or at least equally distributed over the control group. Let's now look at a few strategies...

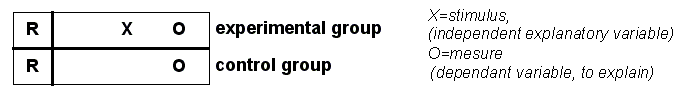

Simple experimentation using a control group :

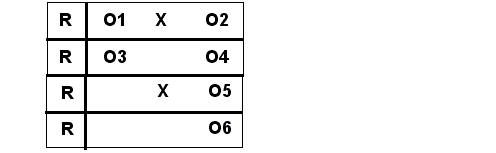

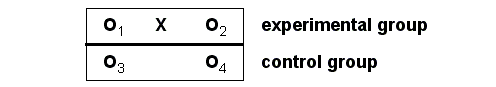

A simple control group design looks like this:

Principle:

- Two groups of subjects are chosen randomly (R) within a mother population. This ought to eliminate systematic influence of unknown variables on one group. I.e. we postulate that both groups will be under the same influence of the same uncontrolled variables.

- The independent variable (X) is manipulated by the researcher. He will put on group under an experimental condition, i.e. apply a treatment.

- Ideally, subjects should not be aware of the research goals since they might for example consciously or unconsciously want to influence the result.

- Analysis of results

We compare effects of treatement (stimulus) vs. non-treatement of the two groups. The measure "O" is also called a post-test since we apply it after the treatment.

| Treatment | effect (O) | non-effect (O) | Total effect for a group |

|

|---|---|---|---|---|

| treatment: (group X) | bigger | smaller | 100 % | We do a "vertical" comparison |

| non-treatment: (group non-X) | smaller | bigger | 100 % |

Analysis questions are formulated in this spirit: What is the probability that treatment X leads to effect O ? In the table above we can observe an experimentation effect. We can see that the effect in the experimental (treated) group is bigger than in the non experimental group and the other way round.

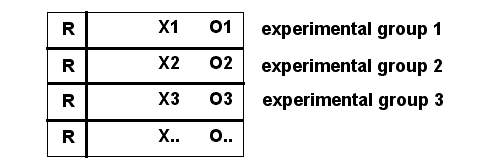

The Simple experiment with different treatments is a slightly different design alternative, but similar in spirit.

Example: First students are assigned randomly to different lab sessions using a different pedagogy (X) and we would like to know if there are different effects at the end (O).

Problems with simple experimentation

This "post-test" only design is not really optimal and for various reasons.

- Selection: Subjects may not be the same in the different groups. Since samples are typically very small (15-20 / group) this may have an effect

- Reactivity of subjects: Individuals ask themselves questions about the experiment and this leads to compensatory effects or they may otherwise change between observations

- Difficulty to control certain variables in a real context. Example: A new ICT-supported pedagogy may work better, because it stimulates the teacher, because students may increase their attention and amount of work, or simply because experimental groups may be smaller than in "real conditions" and individual student therefore get more attention.

In principle one could test such intervening variables with new experimental conditions, but for each new variable, one has to add at least 2 more experimental groups, something that is very costly. Let's now look at a more popular design...

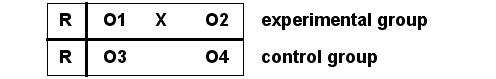

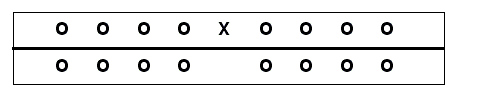

The simple experiment with pretests

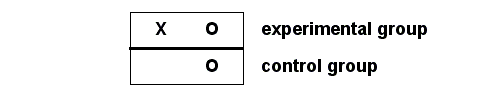

The following design attempts to control the difference that may exist between 2 experimental groups (i.e. we don't trust randomization or we can't randomly assign subjects to a group. This is typically the case when we select for example two classes in a school setting.

Here is the desing:

- Analysis

To control the potential difference between groups we compare the difference between O2 and O1 with the difference between O4 - O3

effect = (O2-O1) versus (O4-O3.

There are also disadvantage of this design, in particular the effect of the first measure on the experiment can influence the outcome. Example: (a) If X is supposed to increase a pedagogical effect, the O1 and O3 tests could have an effect (students learn by doing the test), so you can’t measure the "pure" effect of X.

This experimentation effect can be controlled by the Solomon design, which is similar in spirit, but this method requires two extra control groups and is more costly.

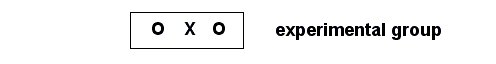

The Solomon design combines the simple experiment design with the pretest design:

We can test for example if O2>O1, O2>O4, O5>O6 and O5>O3

Final Note: Simply comparing 2 different situations is NOT an experiment ! The treatment variable X must be simple and uni-dimensional (else you don’t know the precise cause of an effect). We shall come back to this problem below when we discuss quasi-experimental research designs.

There exist even more complicated designs to measure interaction effects of 2 or more treatments, but we shall stop here.

The non-experiment: what you should not do

Let's now look at bad designs, since they often can be found in the discourse of policy makers or early drafts of research proposals.

- The (non)experiment without control group nor pretest

This bad design looks like this:

We just look at data (O) after some event (X).

Example: A bad discourse on ICT competence of pupils: “Since we introduced ICT in the curriculum, most of the school’s pupils are good at finding things on the Internet"

There is a lack of real comparison !!

- We don’t compare what happens in other schools that offer no ICT training. Maybe it is a general trend that pupils have become better a finding things on the Internet, since most households how have computers and Internet access.

- We don’t even know what happened before !

A statement like "Most of the students are good ! ..." means that you don't compare to what happens in other settings that do not include ICT in their curriculum. It's therefore pretty worthless as proof that introduction of ICT on schools had any effect.

| The variable to be explained (O) | x= ICT in school | x= no ICT in school | |

|---|---|---|---|

| bad at web search | 10 students | ??? |

|

| good at web search | 20 students | ??? |

"Things have changed ..." means that you are not aware of the situation before the change.

| The variable to be explained (O) | before | after | |

|---|---|---|---|

| bad at web search | ??? | 10 students | Horizontal comparison of % ??? |

| good at web search | ??? | 20 students |

Now let's look at another bad design ...

Experiments without randomization nor pretest

In the following design with have the Problem that there is no control over the conditions and the evolution of the control group.

An Example: Computer animations used in school A are the reason of better grade averages (than in school B). School A simply may attract pupils from different socio-economic conditions and they usually have better grades. Or politically speaking. Rich schools have to money to introduce computer animations and they also attract better learners.

Finally, let's look at the experiment without control group

- The experiment without control group

We don’t know if X is the real cause

Example: Since I bought my daughter a lot of video games, she is much better at word processing". You don’t know if this evolution is "natural" (kids always get better at word processing after using it a few times) or if she learnt it somewhere else. This is "natural evolution" or "statistical regression" of the population.

Experimental designs example

- Rebetez, C. (2004). Sous quelles conditions l’animation améliore-t-elle l’apprentissage ? Master Thesis (145p.). Master Thesis, MSc MALTT (Master of Science in Learning and Teaching Technologies), TECFA, University of Geneva. version pdf (2.6Mo).

The thesis we are presenting was writting in French, but there he also did a Master thesis in cognitive psychology in English:

- Rebetez, C. (2006). Control and collaboration in multimedia learning: is there a split-interaction? Master Thesis, School of Psychology and Education, University of Geneva. pdf (168ko)

Notice. This thesis was funded by a real research project, i.e. the student did more than it usually expected for an MA thesis.

The big research question: Our research has the objective to show the influence of the continuity of a presentation flow, of collaboration and previous states in memory and to verify the influence of individual variables like visual span and capacities to do mental rotations.

Original “Notre recherche a pour objectif de mettre en évidence l'influence, de la continuité du flux , de la collaboration , de la permanence des états antérieurs, ainsi que de vérifier la portée de variables individuelles telles que l’empan visuel et les capacités de rotation mentale. (p.33)”

The general objective is then further developed through 1 1/2 pages in the thesis. Causalities are discussed in verbal form (p. 34-40) and then "general" hypothesis are presented on 2 pages.

Explanatory (independent) variables, i.e. conditions

- Animation, static vs. dynamic condition: allows to visualize transition between states. Static presentation forces a student to imagine movement of elements.

- Permanence, present or absent condition: If older states of the animation are shown, students have better recall and therefore can more easily build up their model.

- Collaboration, present or absent condition: Working together should allow students to create more sophisticated representations.

Operational hypothesis (presented in the methodology chapter, translation needed):

- Animation

- Les scores d'inférence ainsi que les scores de rétention seront plus élevés en condition dynamique qu'en condition statique.

- La charge cognitive perçue sera plus élevée en condition dynamique qu'en condition statique. Les temps de discussion ainsi que les niveaux de certitude n'ont pas de raison d'être différents entre les conditions.

- Permanence

- Les participants en condition avec permanence auront de meilleurs résultats aux questionnaires que les participants en condition sans permanence. Les résultats d'inférence sont tout particulièrement visés par cet effet.

- La charge cognitive perçue ne devrait pas être différente entre ces deux conditions. Les temps de discussion ainsi que les niveaux de certitude devraient être plus élevés avec que sans permanence.

- L'influence de la permanence sera d'autant plus grande si les participants sont en condition de présentation dynamique.

- Collaboration

- La collaboration aura un effet positif sur l'apprentissage, autant en ce qui concerne la rétention que l'inférence. Toutefois, l'inférence devrait être tout particulièrement avantagée en cas de " grounding ". Les participants en duo auront donc de meilleurs scores que les participants en solo.

- La charge cognitive perçue devrait suivre le niveau de résultat et être plus bas en condition duo qu'en solo.

- Les temps de discussion devraient être naturellement plus grand en condition duo. Les niveaux de certitude devraient également s'élever en condition duo face à la condition solo.

Method (short summary !)

Population: 160 students. All have been tested to check if the were novices (show lack of domain knowledge used in the material)

Material:

- Pedagogical material are 2 different multimedia contents (geology and astronomy), each one in 2 versions. For the dynamic condition there are 12 animations, for the static conditions 12 static pictures

- Contents of pedagogical material: "Transit of Venus" made with VRML, "Ocean and mountain building" made with Flash

- These media were integrated in Authorware (to take measures and to ensure a consistent interface)

Procedure (roughly, step by step)

- Pretest (5 questions)

- Introduction (briefing)

- For solo condition: paper folding and Corsi visio-spatial tests

- Test with material

- Cognitive load test (nasa-tlx)

- Post-test (17 questions)

Measured dependant variables:

- Number of correct answers in a retention questionnaire.

- Number of correct answers in a inference questionnaire.

- Level of response certitudes in both questionnaires.

- Subject cognitive load scores (measures with the NASA-TLX test)

- Paper-folding test score

- Visual span test score (Corsi)

- Time (seconds) and number of vignette use in the "permanent condition" permanence.

- Reflection time between presentations (seconds).

Quasi-experimental designs

It is difficult to carry out experiments in real settings, e.g. schools. However there exist so-called quasi-experimental designs. These are inspired by experimental design principles (pre- and post tests, and control groups) .

- Advantages

- Can be led in non-experimental situations, i.e. in "real" contexts

- Can be used when the treatment may become too "heavy", i.e. involves more than 2-3 well defined treatment variables.

- Disadvantage

In quasi-experimental situations, you really lack control:

- You don’t know all possible stimuli (causes not due to experimental conditions)

- You can’t randomize (distribute evenly other intervening unknown stimuli over the groups)

- You may lack enough subjects

Nevertheless, quasi-experimental research can help to test all sorts of threats from variables that you can not control. These are called threats to internal validity (see below)

- Usage examples in the social sciences

- Evaluation research

- Organizational innovation studies

- Questionnaire design for survey research (think about control variables to test alternative hypothesis)

There exist various designs. Some are easier to conduct, but they lead to less solid (valid) results. Let's examine a few...

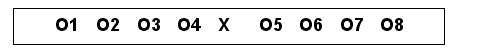

Interrupted time series design

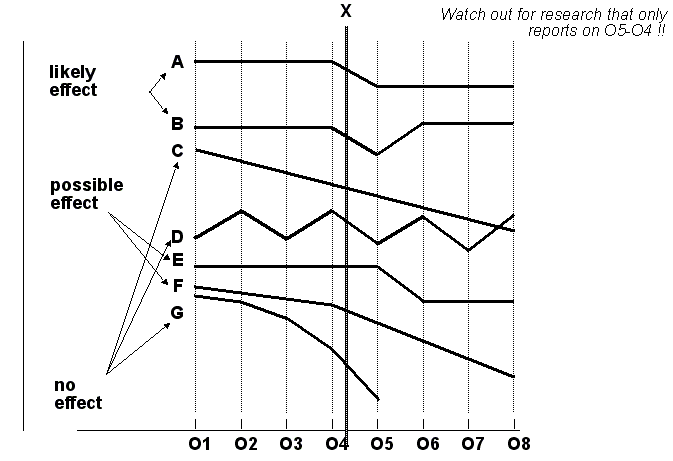

Here is a schema of the interrupted time series design that attempts to control the effect of possible other events (treatements) on a single experimental group.

- Advantages

You can control (natural) trends somewhat. I.e. when you observe or introduce a treatment, e.g. a pedagogical reform, you may not really be sure that reform features themselves had any effect or if it was something else, like a general trend in the abilities of the student population.

- Problems

You can't control external simultaneous events ( X2 that happen at the same time as X1 ) Example concerning the effect of ICT-based pedagogies in the classroom: These ICT-based pedagogies may have been introduced together with other pedagogical innovations. So which one does have an effect on overall performance ?

- Practical difficulties

- Sometimes it is not possible to obtain data for past years

- Sometimes you don’t have the time wait for long enough (your research ends too early and decision makers never want wait for long-term results). Example: ICT-based pedagogies often claim to improve meta-cognitive skills. Do you have tests for year-1, year-2, year-3 ? Can you wait for year+3 ? Can you wait even longer, i.e. test the same population when they reach university or jobs where meta-cognitive skills matter more ?

Examples of time series

Now let's have an informal look at time serious, i.e. measures that evolve over time and that can corroborate or invalidate hypothesis about an intervention X.

O1, O2, etc. are observation data (e.g. yearly), X is the treatment (intervention)

- A. a statistical effect is likely

- Example "Student’s drop-out rates are lower since we added forums to the e-learning content server"

- but attention: you don’t know if there was an other intervention at the same time.

- B. Likely Straw fire effect

- Teaching has improved after we introduced X. But then, things went back to normal

- So there is an effect, but after a while the cause "wears out". E.g. the typical motivation boost from ICT introduction in the curriculum may not last

- C. Natural trend (unlikely effect)

- You can control this error by looking beyond O4 and O5 !

- D. Confusion between cycle effects and intervention

- Example: government introduced measures to fight unemployment, but you don’t know if they only "surf" on a natural business cycle. Control this by looking at the whole time series.

- E. Delay effect

- Example: high investments in education (may take decades to take effect)

- F. Trend acceleration effect,

- difficult to discriminate from G. I.e. there is some change in the curve, but it may just be variant of exponential natural evolution.

- G. Natural exponential evolution

- same as (C).

Threats to internal validity

The big question you should ask yourself over and over: What other variables could influence our experiments ? Campbell and Stanley (1963) created an initial typology of threats for which you have to watch out:

| Type of threat | Definition and example |

|---|---|

| history | An other event than X happens between measures Example: ICT introduction happened at the same time as introduction of project-based teaching. |

| maturation | The object changed “naturally” between measures Example: Did this course change your awareness of methodology or was it simply the fact that you started working on your master thesis. |

| testing | The measure had an effect on the object Example: Your pre-intervention interviews had an effect on people (e.g. teachers changed behavior before you invited them to training sessions) |

| instrumentation | Method use to measure has changed Example: Reading skills are defined differently. E.g. newer tests favor text understanding. |

| statistical regression | Differences would have evened out naturally Example: School introduces new disciplinary measures after kids beat up a teacher. Maybe next year such events wouldn’t have happened without any intervention. |

| (auto) selection | Subjects auto-select for treatment Example: You introduce ICT-based new pedagogies and results are really good (Maybe only good teachers did participate in these experiments). |

| mortality | Subjects are not the same Example: A school introduces special measures to motivate "difficult kids". After 2-3 years drop-out rates improve. Maybe the school is situated in a area that show rapid socio-demographic change (different people). |

| interaction with selection |

Combinatory effects example: the control group shows a different maturation |

| directional ambiguity |

Its it the treatment or the subjects ? Example: Do workers show better output in "flat-hierarchy" / participatory / ICT-supported organization or do such organizations attract more active and efficient people ? |

| Diffusion or treatment imitation | Example: An academic unit promotes modern blended learning and attracts good students from a wide geographic area. A control unit also may profit from this effect. |

| Compensatory egalization | The control groups observes the experimental group Example: Subjects who don’t receive treatment, react by changing their behavior |

Let's now have a look at some designs that attempt to control such threats.

Non-equivalent control group design

This design adopts comparisons between two similar (but not equivalent) control groups.

![]() Advantages: Good at detecting other causes

Advantages: Good at detecting other causes

- If O2 - O1 is similar to O4 - O3, we can reject the hypothesis that O2 - O1 is due to X.

![]() Inconvenients and possible problems:

Inconvenients and possible problems:

- Bad control of natural tendencies

- Finding (somewhat) equivalent groups is not easy

- You also may encounter interaction effects between groups, e.g. imitation.

Experimentation and imitation effects

Here is an example of an imitation effect. In course we introduce

|

Course A introduces |

Course B doesn’t |

||

|---|---|---|---|

|

Effect 1:costs |

augment |

stable |

compare results |

|

E 2: student satisfaction |

augments |

augments | |

|

E 3: deadlines respected |

better |

stable |

Review Question: Why does student satisfaction improve at the same time for B ?

Comparative time series

One of the most powerful quasi-experimental research designs uses comparative time series. I.e. you combine the Interrupted_time_series_design with the Non-equivalent_control_group_design we presented above.

- Compare between groups (situations)

- Make series of pre- and post observations (tests)

Difficulties:

- Find comparable groups

- Find groups with more than just one or a few cases (!)

- Find data (in time in particular)

- Watch out for simultaneous interventions at point X.

Validity in quasi-experimental design

Let's now generalize a little bit our discussion and discuss the causality issue we alread addressed somewhat in the Methodology tutorial - empirical research principles.

There exist four kinds of validity according to Stanley et al.:

![]() Internal validity concerns your research design

Internal validity concerns your research design

- You have to show that postulated causes are "real" (as discussed before) and that alternative explanations are wrong.

- This is the most important validity type.

![]() External validity .... can you make generalizations ?

External validity .... can you make generalizations ?

- This is not easy ! because you may not be aware of "helpful" variables, e.g. the "good teacher" you worked with or the fact that things were much easier in your private school ....

- How can you provide evidence that your successful ICT experiment will be successful in other similar situations, or situations not that similar ?

![]() Statistical validity .... are your statistical relations significant ?

Statistical validity .... are your statistical relations significant ?

- This not too difficult for simple analysis

- Just make sure that you use the right statistics and believe them (see Methodology tutorial - qualitative data analysis)

![]() Construction validity ... are your operationalizations sound ?

Construction validity ... are your operationalizations sound ?

- Did you get your dimensions right ?

- Do your indicators really measure what you want to know ?

Important: This typology is also useful for other settings, e.g. structured qualitative analysis or statistical designs. In most other empricial research designs you must address these issues.

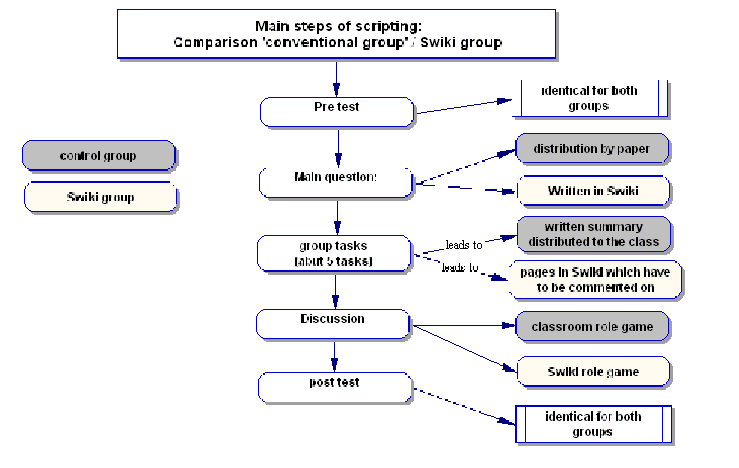

Quasi-experimental thesis example

Notari, Michele (2003). Scripting Strategies In Computer Supported Collaborative Learning Environments, Master Thesis, MSc MALTT (Master of Science in Learning and Teaching Technologies), TECFA, University of Geneva. HTML/PDF

- This master thesis concerns the design and effects of ICT-supported activity-based pedagogics in a normal classroom setting

- Target: Biology at high-school level (various subjects)

Three research questions formulated as 'working hypotheses':

- The use of a Swiki as collaborative editing tool causes no technical and comprehensive problems (after a short introduction) for high school students without experience in collaborative editing but with some knowledge of the use of a common text-editing software and the research of information in the Web.

- [Pedagogical] scripting which induces students to compare and comment on the work of the whole learning community (using a collaborative editing tool) leads to better learning performance (as assessed by pre- and post-testing) than a script leading students to work without such a tool and with little advice or / and opportunity to make comments and compare their work with the learning community.

- The quality of the product of the working groups is better (longer and more detailed) when students are induced to compare and comment on their work (with a collaborative editing tool) during the learning unit.

- Method

(Summary, quotations from thesis)

- The whole research took place in a normal curricular class environment. The classes were not aware of a special learning situation and a deeper evaluation of the output they produced.

- We tried to embed the scenarios in an absolutely everyday teaching situation and supposed students to have the same motivational state as in other lessons.

- To collect data we used questionnaires, observed students while working, and for one set up we asked students to write three tests.

- Of course the students asked about the purposes of the tests. We tried to motivate them to perform as well as they could without telling them the real reason of the tests.

- Notes

- This master theses concerns several quasi-experiments, all in real-world settings.

- Below we just reproduce the settings for one of these.

- Several explaining variables intervene in the example on next page ( the procedure as whole was evaluated, and not variables as defined by experimentalism ).

A sample "experiment" from Notari’s thesis:

Let's now look into so-called statistical designs, an approach that is typically used in "survey research".

Statistical designs

Statistical designs are also conceptuall related to experimental designs:

- Statistical designs formulate laws

- there is no interest in individual cases (unless something goes wrong)

- You can test quite a lot of laws (hypothesis) with statistical data (your computer will calculate)

- Designs are based on prior theoretical reasoning, because

- measures are not all that reliable,

- what people tell may not be what they do,

- what you ask may not measure what you want to observe ...

- there is a statistical over-determination,

- you can find correlations between a lot of things !

- you can not get an "inductive picture" by asking a few dozen closed questions.

The dominant research design is conducted "à la Popper":

- You start by formulating hypothesis (models that contain measurable variables and relations)

- You measure the variables (e.g. with a questionnaire and/or a test)

- You then test relations with statistical tools

The Most popular variant in educational technology is so-called "survey research".

Introduction to survey research

- A typical research plan looks like this

- Literature review leading to general research questions and/or analysis frameworks

- You may use qualitative methodology to investigate new areas of study

- Definition of hypothesis

- Operationalization of hypothesis, e.g. definition of scales and related questionnaire items

- Definition of the mother population

- Sampling strategies

- Identification of analysis methods

- Implementation (mise en oeuvre)

- Questionnaire building (preferably with input from published scales)

- Test of the questionnaire with 2-3 subjects

- Survey (interviews, on-line or written)

- Coding and data verification + scale construction

- Analysis

- Writing it up

- Compare results to theory

- Marry good practise of results presentation and discussion, but also make it readable

Levels of reasoning within a statistical approach

|

Reasoning level |

Variables |

cases |

Relations (causes) |

|---|---|---|---|

|

theoretical |

concept /category |

depends on the scope of your theory |

verbal |

|

hypothesis |

variables and values (attributes) |

mother population(students, schools,) |

clearly stated causalities or co-occurrences |

|

operationalization |

dimensions and indicators |

good enough sampling |

statistical relations between statistical variables (e.g. composite scales, socio-demographic variables) |

|

measure |

observed indicators (e.g. survey questions) |

subjects in the sample | |

|

statistics |

measures (e.g. response items to questions)scales (composite measures) |

data(numeric variables) |

(Just for your information. If it looks too complicated, ignore)

Typology of internal validity errors

Error of type 1: you believe that a statistical relation is meaningful ... but "in reality" it doesn’t exist.

- In complicated words : You wrongly reject the null hypothesis (no link between variables)

Error of type 2: you believe that a relation does not exist ... but "in reality" it does.

- E.g. you compute a correlation coefficient, results show that is very weak. Maybe because the relation was non-linear, or because an other variable causes an interaction effect ...

- With more complicated words: Your wrongly accept the null hypothesis

There exist useful statistical methods to diminish the risks

- See statistical data analysis techniques

- Think !

Survey research examples

- See quantitative data gathering and quantitative analysis modules for some examples

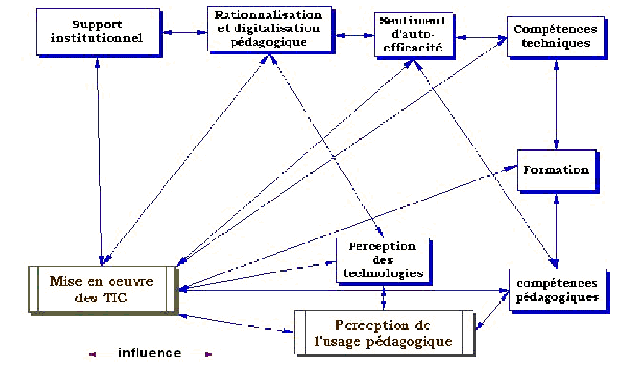

Etude pilote sur la mise en oeuvre et les perceptions des TIC

- (Luis Gonzalez, DESS thesis 2004): Main goal: "Study factors that favor teacher’s use of ICT". The author defines 8 factors and also postulates a few relationships among them

Todo: translation ....

Below we quote from the thesis (and not the research plan):

My principal hypothesis postulates the existence of a correlation between the following factors and teacher's use of ICT

- The type of support offered by the institutions

- Teacher's pedagogical competences

- Teacher's technical competences

- ICT training received by teachers

- Teacher's feeling of self-efficacy

- Teacher's percepetion of technology

- Teacher's perception of ICT's pedagogical usefulness

- Teacher's digitalization (rationalization) practices with ICT

{{quotationbox|Secondar hypothesis are:

- Teacher's percpetion of ICT's pedagogical usefulness is correlated with pedagogical competences

- Teacher's percepetion of technology is correlated with percpetion of ICT's pedagogical usefulness

- Teacher's digitalization (rationalization) practices with ICT is correlated percepetion of technology

- ICT training received by teachers is correlation with teacher's pedagogical competences and technical competences

- Teacher's feeling of self-efficacy is correlated with teacher's pedagogical competences and technical competences

- Teacher's digitalization (rationalization) practices with ICT is correlated with feeling of self-efficacy

- Sampling method

- Representative sample of future primary teachers (students), N = 48

- Non-representative sample of primary teacher’s, N = 38

- All teachers with an email address in Geneva were contacted, auto-selection (!)

- Note: the questionnaire was very long, some teachers who started doing it, dropped out after a while

- This sort of sampling is ok for a pilot study or a little master thesis.

- Questionnaire design

Definition of each "conceptual domain" (see above, i.e. main factors/variables identified from the literature).

Item sets (questions) and scales have been adapted from the literature if possible, e.g.

- L’échelle d’auto-efficacité (Dussault, Villeneuve & Deaudelin, 2001)

- Enquête internationale sur les attitudes, représentations et pratiques des étudiantes et étudiants en formation à la profession enseignante au regard du matériel pédagogique ou didactique, informatisé ou non (Larose, Peraya, Karsenti, Lenoir & Breton, 2000)

- Guide et instruments pour évaluer la situation d’une école en matière d’intégration des TIC (Basque, Chomienne & Rocheleau, 1998).

- Les usages des TIC dans les IUFM : état des lieux et pratiques pédagogiques (IUFM, 2003).

- Data collection

- Data was collected with an on-line questionnaire tool (using the ESP program)

- Purification of the instrument

- For each item set, a factor analysis was performed and indicators were constructed according to auto-correlation of items (typically the first 2-3 factors were used). Notice: If you used fully tested published scales, you don’t need to do this !

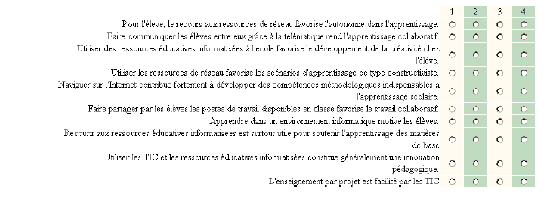

- Example - "perception of pedagogical ICT use"

In the questionnaire this concept is measured by two questions sets (scales).

Here we show one of these 2 question sets:

Question 34. PUP1: Les énoncés suivants reflètent des opinions " fort présentes " dans les discours gouvernementaux ainsi que " scientifiques " qui portent sur le recours aux ressources éducatives informatisées en éducation. Indiquez votre degré d'accord par rapport à chacun d'entre eux.

(Tout à fait en désaccord=1 Plutôt en désaccord=2 Plutôt d'accord=3 Tout à fait d'accord=4

Note: these 10 items and the 12 items from question 43 have been later reduced to 3 indicators:

- Var_PUP1 - Degré d'importance des outils d'entraide et de collaboration pour les élèves

- Var_PUP2 - Degré d'importance des outils de communication entre élèves

- Var_PUP3 - Accord sur ce qui favorise les apprentissages de type constructiviste

We shall use this example again in the Methodology tutorial - quantitative data analysis.

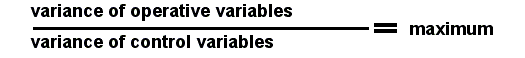

Similar comparative systems design

This design is popular in comparative public policy analysis. It can be used to compare educational systems of a few districts, states or countries.

Principle:

![]() Make sure to have good variance within “operative variables” (dependant + independent)

Make sure to have good variance within “operative variables” (dependant + independent)

![]() Make sure that no other variable shows variance (i.e. that there are no hidden control variables that may produce effects)

Make sure that no other variable shows variance (i.e. that there are no hidden control variables that may produce effects)

In more simple words: Select cases that are different in respect to the variables that are of interest to your research, but otherwise similar in all other respects.

E.g. don’t select an prestige school that does ICT and a normal school that doesn’t do ICT if you want to measure the effect of ICT. Either stick to prestige schools or "normal" schools, otherwise, you can’t tell if it was ICT that made the difference ...

Advantages and inconvenients of this method:

![]() Less reliability and construction validity problems

Less reliability and construction validity problems

![]() Better control of "unknown" variables

Better control of "unknown" variables

![]() Worse external validity (impossibility to generalize)

Worse external validity (impossibility to generalize)

![]() Weak or none statistical testing. Most often researchers just compare data but can not provide statistically significant results, since cases are too few.

Weak or none statistical testing. Most often researchers just compare data but can not provide statistically significant results, since cases are too few.

Summary of theory-driven designs discussed

In this tutorials we present some important theory-driven research designs which we summarize in the table below with a few typical use cases. There exist other theory-driven designs, e.g. simulations.

| approach | some usages |

|---|---|

| See Experimental designs |

|

| See Quasi-experimental designs |

|

| See Statistical designs |

|

| See Similar comparative systems design |

|

Of course, you can combine these approaches within a research project. You also may use different designs to look a the same question in order triangulate answers.

Bibliography

- Campbell, D. T., and Stanley, J.C, "Experimental and Quasi-Experimental Designs for Research on Teaching." In N. L. Gage (ed.), Handbook of Research on Teaching. Boston, Houghton, 1963. PDF

- Campbell, D. T. & Stanley, J. (1966). Experimental and quasi-experimental designs for research. Rand McNally, Chicago. (The revised original. It remains the reference for quasi-experimental research).

- Cook, T., & D. Campbell. (1979). Quasi-experimental design. Chicago: Rand McNally.

- Dawson, T. E. (1997, January 23–25). A primer on experimental and quasi-experimental design. Paper presented at the Annual Meeting of the Southwest Educational Research Association, Austin, TX.

- Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Boston, MA: Houghton Mifflin.