Methodology tutorial - quantitative data acquisition methods: Difference between revisions

m (using an external editor) |

m (using an external editor) |

||

| Line 313: | Line 313: | ||

Below is a question with several sub-questions about teachers' behavior. You can see that each question is numbered and the response items have codes. These are irrelevant for the user, but useful for data transcription and also to map variables in your statistics program to the questions and response items. | Below is a question with several sub-questions about teachers' behavior. You can see that each question is numbered and the response items have codes. These are irrelevant for the user, but useful for data transcription and also to map variables in your statistics program to the questions and response items. | ||

<div style="padding:2px;border-style:line;border-width:thin;margin-left:1em;margin-right:1em;margin-top:0.5em;margin-bottom:0.5em;"> | |||

----- | |||

Below you will find general statements about teachers' behavior. Please indicate to what extent you agree or disagree with them? Please tick the appropriate circle on the scale (totally disagree - totally agree) for each statement. | Below you will find general statements about teachers' behavior. Please indicate to what extent you agree or disagree with them? Please tick the appropriate circle on the scale (totally disagree - totally agree) for each statement. | ||

| Line 356: | Line 355: | ||

|} | |} | ||

----- | ----- | ||

</div> | |||

== Experiments == | == Experiments == | ||

Revision as of 18:28, 18 October 2008

This article or section is currently under construction

In principle, someone is working on it and there should be a better version in a not so distant future.

If you want to modify this page, please discuss it with the person working on it (see the "history")

<pageby nominor="false" comments="false"/>

This is part of the methodology tutorial (see its table of contents).

Introduction

- Learning goals

- Learn to discriminate between different data sources

- Learn the basic steps of questionnaire design

- Understand that measure of behavior (or perception of behavior) is better than opinions in most cases.

- Learn some principles about phrasing questions and how to create respons items

- Understand that you should find similar research and reuse questionnaires whenever you can.

- Prerequisites

- Methodology tutorial - empirical research principles

- Methodology tutorial - theory-driven research designs, in particular statistical designs

- Moving on

- Methodology tutorial - quantitative data analysis

- Methodology tutorial - qualitative data acquisition methods

- Level and target population

- Beginners

- Quality

- Somewhat ok. However, reading this will probably not help you to create a decent questionnaire. It's harder than you may thing and you do need advise from an expert ...

- ToDO

- Focus on other data than survey data (if this happens then we will split this entry)

In educational technology (as well in most other social sciences) one works with a variety of quantitative data sources. See also the Methodology_tutorial_-_empirical_research_principles#Measurement_techniques overview of "measurement techniques"

- Researcher generated data, for example

- Tests, e.g. a measure of task performance

- Questionnaires

- Quantified qualitative observations of various kinds, e.g. texts written by students, transcribed interviews.

- Real data, for example

- Grades

- "Official and semi-official Statistics", e.g. aggregate population data

- Log files

- I.e. traces of user interactions with a system

- This includes data from tools specifically made for research or data that just "exist" (e.g. database entries for forum participations)

In this tutorial we will focus on survey design and therefore questionnaire design

Basic Principles of questionnaire design

Below we formulate a short recipee that you should follow.

- Make a list of concepts (theoretical variables) in your research questions for which you need data.

- For each of these concepts make sure that you identify its dimensions (or make sure that they are not multi-dimensional). If don't know what "dimensions" are, go back and read about Methodology_tutorial_-_empirical_research_principles#The_operationalization_of_general_concepts the operationalization of general concepts

- Consult the research literature, i.e. find similar research that used questionnaires.

- Discuss with domain experts

- Make a list of conceptual variables (either simple concepts or dimensions of complex concepts)

- For each conceptual variable think about how you plan to measure it

- First of all (!!) go through the literature and find out if and how other people went about it

- It is much better to use a suitable published instrument than building your own. You can then compare your results and you will have much less explanations and justifications to produce !

- Plan some measurement redundancy

- Do not measure a conceptual variable with just one question or observation. Use at least four questions

- Rather ask people how they behave instead of how they think they behave

- E.g. don’t ask: "Do you use socio-constructivist pedagogies ?" but ask several questions about typical tasks assigned to students.

- Do not ask people to confirm your research questions

- E.g in a survey or test do not ask: "Did you manage to make your teaching more socio-constructivist with this new tech." (Again) ask/observe what the teacher really does and what his students had to do.

Finally, you also could triangulate surveys with data of different nature, e.g. combine survey data with objective data and quantified observational data (like log file analysis) or even qualitative data like interviews with a few selected individuals.

Questionnaire design

Basic question and response item design

- Wording and contents of the questions

- Only ask

questions that your target population understands

- Questions should avoid addressing 2 issues in 1 question!

- Bad example: Did you like this system and didn't you have any technical problems with it ?

- Make the questions as short as possible. Else people won't read/remember) the whole question.

- Ask several questions that measure the same concept

- Try be all means to find sets of published items (questions) in the literature that you can reuse

- Response items

- Avoid open-ended answers (these will give a lot of coding work)

- Use scales that have at least a range of 5 response options

- otherwise people will have a tendency to drift to the "middle" and you will have no variance.

- e.g. avoid:

agree () neither/or () disagree ()

- Response options should ideally be consistent across items measuring a same concept

- If you feel that most people will check a "middle" value, use a large "paired" scale without a middle point

e.g. 1=totally disagree, 10=totally agree 1 2 3 4 5 6 7 8 9 10

Again: Use published scales as much as you can. This strategy will help you in various ways:

- You get better reliability (user's understanding of questions) since published items have been tested

- Scales construction will be easier and faster (you can skip doing Kronbach alpha's)

- It will make your results more comparable

- ....

- Testing

- You must test your questionnaire with at least 2 people !

- From my experience as a methodology crash course teacher I can say that I never have seen an even moderately acceptable questionnaire made in one go by a beginner student. Do not overestimate your skills ! - Daniel K. Schneider

Example questionnaire: Social presence

Social presence is an important variable in (informed) distance education. We shall have a look at a study that tries to link social presence to learner satisfaction.

Gunawerda, C.N., & Zittle, F.J. (1997). Social presence as a predictor of satisfaction within a computer-mediated conferencing environment. The American journal of distance education, 11(3), 8-26.

The GlobalEd questionnaire (which can be found in the Compendium of Presence Measures) was developed to evaluate a virtual conference. Participants (n=50) of the conference filled out the questionnaire. Internal consistency of the social presence scale was a=0.88. Social presence was found to be a strong predictor of user satisfaction.

The questionnaire used the following 14 questions to measure social presence (the total questionnaire included 61 items):

- Messages in GlobalEd were impersonal

- CMC is an excellent medium for social interaction

- I felt comfortable conversing through this text-based medium

- I felt comfortable introducing myself on GlobalEd

- The introduction enabled me to form a sense of online community

- I felt comfortable participating in GlobalEd discussions

- The moderators created a feeling of online community

- The moderators facilitated discussions in the GlobalEd conference

- Discussions using the medium of CMC tend to be more impersonal than face-to-face discussion

- CMC discussions are more impersonal than audio conference discussions

- CMC discussions are more impersonal than video teleconference discussions

- I felt comfortable interacting with other participants in the conference

- I felt that my point of view was acknowledged by other participants in GlobalEd

- I was able to form distinct individual impressions of some GlobalEd participants even though we communicated only via a text-based medium.

A 5-point rating scale was used for each question

Example questionnaire: socio-constructivist teachers

Class (and Schneider) 2005, PhD thesis project (scale based on Dolmans 2004)

The problem was how to identify socio-constructivist elements in a distance teaching course for interpreter trainers.

Decomposition of “socio-constructivist design” in (1) active or constructive learning, (2) self-directed learning, (3) contextual learning and (4) collaborative learning, (5) teacher’s interpersonal behavior (according to Dolmans et al., 1993)

Note that headers regarding these dimensions (e.g. "Constructive/active learning" are not shown to the subjects. We do not want them to reflect about theory, but just to answer the questions ... So they are just shown below to help your understanding

| Statements: Teachers stimulated us ... | Totally disagree | Disagree | Somewhat agree | Agree | Totally agree | |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| ( Constructive/active learning ) | ||||||

| 4 | ... to search for explanations during discussion | O | O | O | O | O |

| 5 | ... to summarize what we had learnt in our own words | O | O | O | O | O |

| 6 | ... to search for links between issues discussed in the tutorial group | O | O | O | O | O |

| 7 | ... to understand underlying mechanisms/theories | O | O | O | O | O |

| 8 | ... to pay attention to contradictory explanations | O | O | O | O | O |

| ( Self-directed learning ) | ||||||

| 9 | ... to generate clear learning issues by ourselves unclear | O | O | O | O | O |

| 10 | ... to evaluate our understanding of the subject matter by ourselves | O | O | O | O | O |

| ( Contextual learning ) | ||||||

| 11 | ... to apply knowledge to the problem discussed | O | O | O | O | O |

| 12 | ... to apply knowledge to other situations/problems | O | O | O | O | O |

| 13 | ... to ask sophisticated questions | O | O | O | O | O |

| 14 | ... to reconsider earlier explanations | O | O | O | O | O |

| ( Collaborative learning ) | ||||||

| 15 | ... to think about our strengths and weaknesses concerning our functioning in the tutorial group | O | O | O | O | O |

| 16 | ... to give constructive feedback about our group work | O | O | O | O | O |

| 17 | ... to evaluate our group cooperation regularly | O | O | O | O | O |

| 18 | ... to arrange meetings with him/her to discuss how to improve our functioning as a group | O | O | O | O | O |

General questionnaire design issues

- The Introduction

(applies to both written questionnaires on paper or on-line surveys)

- You should write a short introduction that states the purpose of this questionnaire

Such an introduction:

- guarantees that you only will publish statistical data (no names !)

- specify how long it will take to fill it in

- Ergonomics

- Do not include anything else than questions and response items (besides the introduction)

- Make sure that people understand where to "tick".

- Coding information for the researcher

- Assign a code (e.g. number) to each question item (variable) and assign a number (code) to each response item

- see example on previous and next slide

- This will help you when you transcribe data or analyze data

- use "small fonts" (this information is for you)

- Example of a question set

Below is a question with several sub-questions about teachers' behavior. You can see that each question is numbered and the response items have codes. These are irrelevant for the user, but useful for data transcription and also to map variables in your statistics program to the questions and response items.

Below you will find general statements about teachers' behavior. Please indicate to what extent you agree or disagree with them? Please tick the appropriate circle on the scale (totally disagree - totally agree) for each statement.

| Statements: Teachers stimulated us ... | Totally disagree | Disagree | Somewhat agree | Agree | Totally agree | |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 4 | ... to search for explanations during discussion | O | O | O | O | O |

| 5 | ... to summarize what we had learnt in our own words | O | O | O | O | O |

| 14 | ... to reconsider earlier explanations | O | O | O | O | O |

Experiments

- Designing a true experiment needs advice from some expert. Typically, a beginner makes the mistake to differentiate 2 experimental conditions by more than one variable !!

There are many kinds of experimental measures

- observations (e.g. Video, or recordings of computer input)

- tests (similar to surveys)

- tests (similar to examination questions)

- tests (performance in seconds)

- tests (similar to IQ tests)

Consider all the variables you want to measure

- most times the dependant variables (to explain) are measured with tests

- usually the independent (explanatory) variables are defined by the experimental

conditions (so you don’t need to measure anything, just remember to which experimental group the subject belonged)

See the literature !!

- First of all, read articles about similar research !

- Consult test psychologists if you need to measure intellectual performance, personality traits, etc.

- Use typical school tests if you want to measure typical learning achievement

Sampling

The ground rules

The number of cases you have to take into account is rather an absolute number

- therefore not dependent on the size of the total "population" you study

The best sampling is random, because:

- you have a likely chance to find representatives of each "kind" in your sample

- you avoid auto-selection (i.e. that only "interested" persons will answer your survey or submit to experiments

When you work with small samples, you may use a quota system

- e.g. make sure that you have both "experts" and "novices" in a usability study of some software

- e.g. make sure that you (a) both interview teachers who are enthusiastic users and the contrary, (b) schools that are well equipped and the contrary in a study on classroom use of new technologies.

A first look at significance

Significance of results depend both on strength of correlations and size of samples

Rherefore: the more cases you have got, the more likely your results will be interpretable !

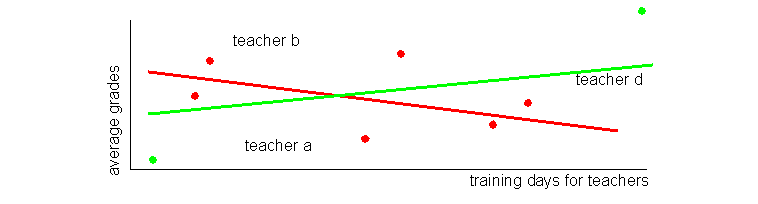

Let’s assume that initially you have data from only 6 teachers (the red dots): Your data suggest a negative correlation: more training days lead to worse averages

Now let's see what happens if we only add 2 new teachers (the 2 green dots). The observed relation will switch from negative to positive, i.e. the data suggests a (weak) positive correlation.

So: doing a statistical analysis on very small data sets is like gambling. If your data set had included 20 teachers or more, adding these 2 "green" individuals more wouldn’t have changed the relationship. This is why (expensive) experimental research usually attempts to have at least 20 sujects in a group.

Typical sampling for experiments

Sampling for experiments is a simpler art. You should have

- preferably 20 subjects / experimental condition

- at least 10 / experimental condition (but expect most relations to be non-significant)

Example - The effect of multimedia on retention

The model includes three variables

- Explanatory (independent) variable X: Static diagrams vs. animation vs. interactive animation

- Dependant (to be explained) variable Y1: Short term recall

- Dependant (to be explained) variable Y2: Long-term recall

Both dependant variables (Y1 and Y2) can be measured by recall tests

For variable X we have three conditions. Therefore we need 3 * 20 = 60 subjects

- If you expect very strong relations (don’t for this type of research !) you can get away with 3 * 15

Note: we can not administer the three different conditions to each individual (because by moving from one experiment to another they will learn). You may consider building 3 * 3 = 9 different kinds of experimental materials however and have each individual do each experiment in a different condition. However, they may get tired or show other experimentation effects ... and producing good material is more expensive than finding subjects.

Typical samples for survey research

Try to get as many participants as you can if you use written or on-line surveys. Dealing with on-line data doesn't cost very much, but you certainly will get a sampling bias.

40 participants is a minimum, 100 is good and 200 is excellent for a MSc thesis.

Otherwise you can’t do any sort of interesting data analysis, because your significance levels will be too high (i.e. bad) when you analyze even moderately complex relationships.

Typical samples for aggregate data

e.g. schools, districts, countries etc.

Since these data reflect real "realities" you can do with less (however talk to an expert, a lot depends on the kinds of analysis you plan to do).