Methodology tutorial - design-oriented research designs

This article or section is currently under construction

In principle, someone is working on it and there should be a better version in a not so distant future.

If you want to modify this page, please discuss it with the person working on it (see the "history")

<pageby nominor="false" comments="false"/>

Research Design for Educational Technologies - Design oriented approaches

This is part of the methodology tutorial (see its table of contents).

Note: There should be links to selected wiki articles !

Further reading: see the design methodologies category for a list of design-related articles in this wiki.

Key elements of a design-oriented approach:

The global picture

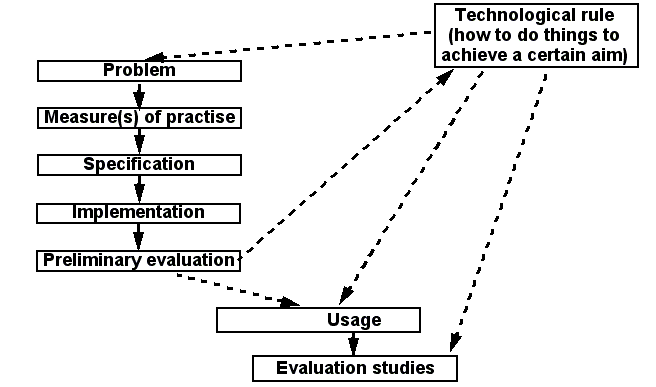

Typical ingredients or steps of design research can be summarized with the following picture (Pertti Järvinen, 2004)

- The idea is to investigate at least one of the dotted lines.

- Technological rule as theory on how to things can be the research's input, output, or both.

- Technological rules

- ... tell you how to do things and are dependant on other theories (and beliefs)

- Bunge (quoted by Järvinen:99): "A technological rule: an instruction is defined as a chunk of general knowledge, linking an intervention or artifact with a desired outcome or performance in a certain field of application".

- Types of outcomes (artifacts, interventions)

- Constructs (or concept) form the " language " of a domain

- Models are sets of propositions expressing relationships among constructs

- Methods are a set of steps to perform a task (guidelines, algorithms)

- Instantiations are realizations of an artifact in its environment

- Types of research

- Build: Demonstrate feasibility of an artifact or intervention

- Evaluate: Development of criteria, and assessment of both artifact building and artifact usage

- What does this mean ?

- There are 4*2 ways to lead interesting design research.

- Usually, it’s the not the artefact (e.g. a software program) you build that is interesting, but something behind (constructs, models, methods) or around it (usage).

Examples of design rules

Below we shall present below a few examples of design rules (output of research) that are popular in educational technology. See the instructional design method article for more examples.

As you can see design (or the essence of it in terms of design rules) can be expressed in various ways:

- As concept map

- As list

- As UML diagram

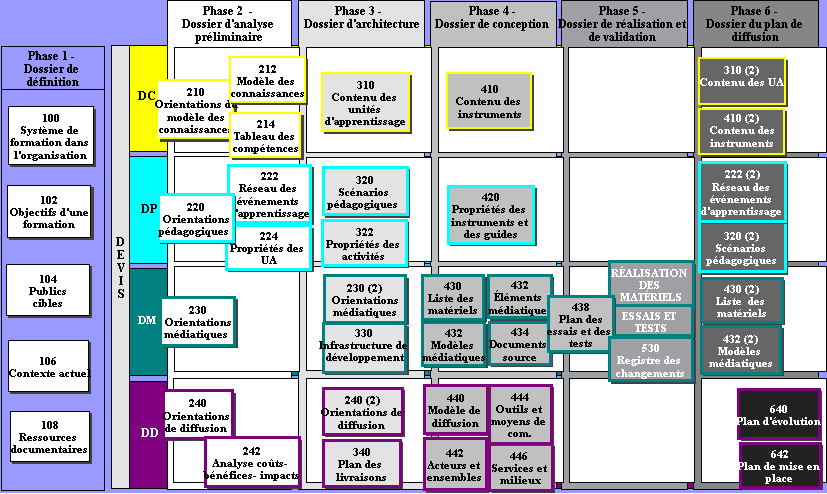

The MISA instructional design method

MISA is an instructional engineering method describing graphically the instructional design processes and their products which define a learning system completely. MISA supports 35 main tasks or processes and around 150 subtasks. The method has been totally represented within the MOT knowledge editor.

A course designer works on "4 models"

- Knowledge and Skill Representation

DC: Design of Content (know-that and know-how) - Application of Teaching Methods and Approaches

DP: Design of Pedagogical specifications - Specification of Learning Materials

DM: Design of Materials - Delivery Planning

DD: Design of Delivery

The 4 components split over the 6 phases lead to the 35 main tasks:

Using such a method is worth the effort:

- if you plan do it right (e.g. buy the MOT editor)

- if you focus on a whole course instead of difficult problems

- if you plan to train yourself in instructional design

It is not clear to us what parts this model are based on research or wether it represent an engineering doctrine that aims to provide rules an educational designer should follow ...

Gagné’s 9 steps of instruction for learning

Gagné's Nine events of instruction represents a set of nine sequential rules specifying the contents of a "good" lesson (unit of learning). It is grounded in behaviorist-cognitivist theory of instruction.

- Gain attention e.g. present a good problem, a new situation, use a multimedia advertisement.

- Describe the goal : e.g. state what students will be able to accomplish and how they will be able to use the knowledge, give a demonstration if appropriate.

- Stimulate recall of prior knowledge e.g. remind the student of prior knowledge relevant to the current lesson (facts, rules, procedures or skills). Show how knowledge is connected, provide the student with a framework that helps learning and remembering. Tests can be included.

- Present the material to be learned e.g. text, graphics, simulations, figures, pictures, sound, etc. Chunk information (avoid memory overload, recall information).

- Provide guidance for learning e.g. presentation of content is different from instructions on how to learn. Use of different channel (e.g. side-boxes)

- Elicit performance "practice", let the learner do something with the newly acquired behavior, practice skills or apply knowledge. At least use MCQ’s.

- Provide informative feedback , show correctness of the trainee’s response, analyze learner’s behavior, maybe present a good (step-by-step) solution of the problem

- Assess performance test, if the lesson has been learned. Also give sometimes general progress information

- Enhance retention and transfer : inform the learner about similar problem situations, provide additional practice. Put the learner in a transfer situation. Maybe let the learner review the lesson.

Design rules from computer science

You can look at various modeling and diagram types of the Unified modeling language in order to have glance at the most popular design language in computer science with which many design problems can be modelled.

UML is a kind of qualitative data analysis tool with which various kinds of designs can be expressed. E.g. the following picture from the IMS LD Best Practice specification shows a diagram for competency-based learning with two major alternatives, advising-then-anticipating and anticipating-then-advising.

.

Read the UML activity diagram article to learn more about activity diagrams.

The design process

Modern design science is influenced by several fields, e.g. architecture or software engineering.

In educational technology research, one uses most often some kind of "agile" and iterative design method for developping software. In this wiki, we don't cover (at least at the time of writing) software design methodology very much, but some of the approaches presented below have their origin in CS.

Anyhow, most research subjects (not just software development!) can be tackled through a design science approach.

Alternatives

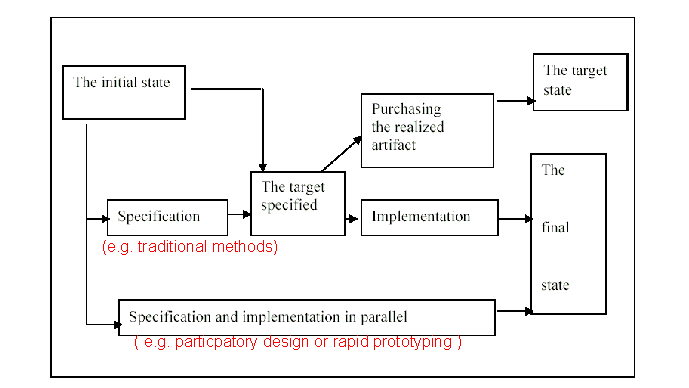

Pertti Järvinen (2004: 103) formulated several alternatives for the design process.

Basically, you must choose between a more top-down approach (also called the waterfall model) and a more (fully) participatory "agile" approach.

The participatory design model

Read participatory design for more information.

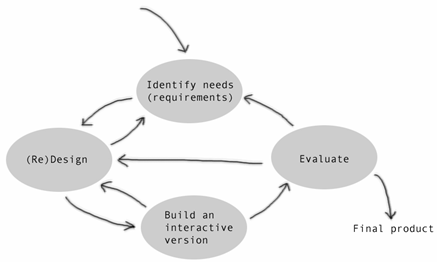

User-centred design:

- involves users as much as possible so that they can influence it

- integrates knowledge and expertise from other disciplines than just IT

- is highly iterative so that testing can insure that design meets users’ requirements

Participatory design does not just mean to ask users what they want and to otherwise investigate their needs, but it means that they actively will participate in all the design cycles.

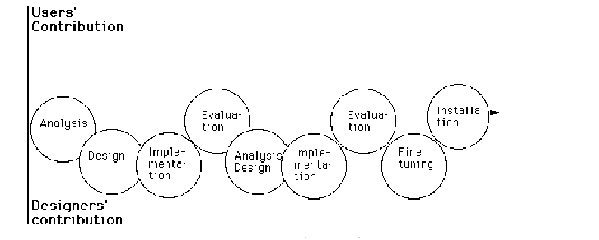

Traditional Information System prototyping approaches (after Grønbæk, 1991).

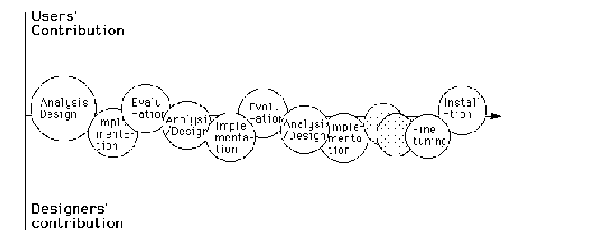

Cooperative prototype approach (after Grønbæk, 1991)

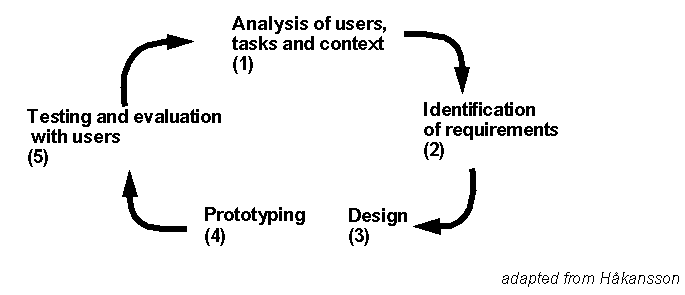

A similar model from Preece, Rogers and Sharp (2002), figure from Hakansson

Typical user analysis techniques

(Adapted from Håkansson, See the modules on qualitative data gathering and analysis)

- Questionnaires

- if user number is high

- if you know precisely what to ask (e.g. to identify user profiles, to test hypothesis gained from in-depth studies, etc.

- Semi-structured Interviews

- to explore new issues

- to let participants develop argumentation (subjective causalities)

- Focus groups

- group interview”, collecting multiple viewpoints

- Observations/Ethnography

- To observe work as it happens in its natural setting (observe task related workflow, interactions)

- to understanding context (other interactions, conditions)

- Scenarios (for task description)

- An “informal narrative description”, e.g. write real stories that describe in detail how someone will use your software (do not try to present specifications here !)

- Cultural probes

- Alternative approach to understanding users and their needs, developed by Gaver (1999) ?

Definition of requirements

Different types

- Functional requirements

- Environmental requirements

- Physical, social, organizational, technical

- User requirements

- Usability requirements

Building prototypes

Prototypes can be anything !!

- Quote: "From paper-based storyboards to complex pieces of software: 3D paper models, cardboard mock-ups, hyperlinked screen shots, video simulations of a task, metal or plastic versions of the final product" (Håkansson).

Prototypes are of different nature according to the stage and the evolution of the design process:

- Useful aid when discussing ideas (e.g. you only need a story-board here)

- Useful for clarifying vague requirements (e.g. you only need some UI interface mockup)

- Useful for testing with users (e.g. you only need partial functionality of the implementation)

Design-based research

Evaluation

Evaluation criteria

Evaluation usually happens according to some "technological rule"

A good example are Merril’s first principles of instruction:

- Does the courseware relate to real world problems?

- Does the courseware activate prior knowledge or experience?

- Does the courseware demonstrate what is to be learned ?

- Can learners practice and apply acquired knowledge or skill?

- Are learners encouraged to integrate (transfer) the new knowledge or skill into their everyday life?

Another example is the LORI model, available as an online form consisting of rubrics, rating scales and comment fields.

Evaluation methodology

Design evaluation methodology draws all major social science approaches,

e.g. Håkansson cites:

- Heuristics

- Experiments

- Questionnaires

- Interviews

- Observations

- Think-aloud

Therefore:

- have a look at the other parts of this tutorial

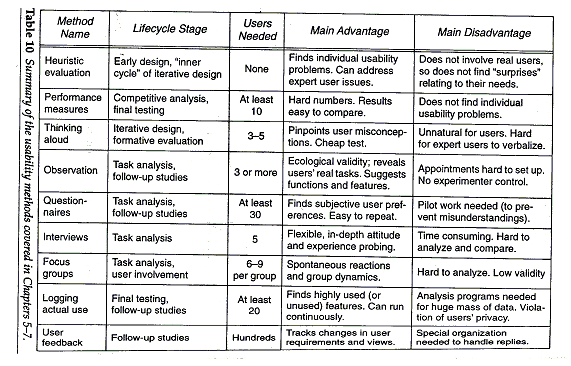

Nielsons (1993) usability methods

http://fdlwww.kub.nl/~krahmer/evaluation-introduction.ppt

Examples

V. Synteta’s master thesis

Title: EVA_pm: Design and Development of a Scaffolding Environment For Students Projects

- Objectives (quotations from the thesis)

- This study is also an intervention for improving PBL efficiency. It entails the development of a Scaffolding Learning Environment (SLE1) that is trying to learn from the lessons of the past and leverage from stresses on new technologies like XML2 and the World Wide Web making a lightweight and easily portable environment.

- Most of the research for improving PBL efficiency tries to remediate specific weaknesses of PBL, but doesn’t propose a complete system that supports a substantial student project through all it’s phases and for all contexts.

- Our key goal was to develop a constructivist environment and a method for scaffolding students’ projects (assignments) from their management up to the writing of their final report.

So, the objectives of this SLE are:

- to help students develop scientific inquiry and knowledge integration skills, to focus on important and investigate key issues;

- to support them directing investigations;

- to make students better manage the time and respect the time constraints;

- to overcome possible writer’s block, or even better to avoid it;

- to help students acquire knowledge on project design and research skills;

- to improve team management and collaboration (especially collaborative editing of student groups);

- to make students reflect on their work;

- to support the tutor’s role in a PBL approach;

- to facilitate monitoring and evaluation for the tutor;

- to help the tutor verify whether knowledge is being acquired;

- to motivate the peers, and eventually to distribute the results to bigger audiences.

- Research questions

- see above

- Method

- Field exploration

- A very important part of this research was to conceive a grammar that would model the work of an academic project. There are different sources of information that have been used to achieve this goal. (....)

- Survey of needs with a questionnaire:

- In order to gather precious information from the key persons involved in projects, like professors and their assistants, a questionnaire was articulated in such a way that would provoke a productive discussion, leading to comments and suggestions that would improve this research. The idea was to give the questionnaire to a small sample of the unit and stop the survey when the same answers came up again.

- The development method

- ... that has been adopted corresponds to participatory design and specifically to cooperative prototyping .

- Both "prototyping" and "user involvement" (or "user centered design") are concepts that have frequently been suggested to address central problems within system development in recent years. The problems faced in many projects reduce to the fact that the systems being developed do not meet the needs of users and their organizations. [...]

F. Radeff’s master thesis

Title: Le portail personnalisable comme interface d'optimisation de ressources académiques

- Research questions

La question principale de ce travail est : pourquoi les bibliothèques universitaires ne proposent-elles pas de portails personnalisables, ces derniers constituant vraisemblablement une bonne solution pour l'optisation des ressources des bibliothèques à l'ère numérique.

Cette question peut s'articuler en 3 sous-questions :

- pourquoi pas plus de portails personnalisables ?

- pourquoi les gens ne personnalisent-ils pas ?

- la personnalisation correspond-elle à un besoin ?

- Method, not clearly articulated, e.g.

- La méthodologie retenue est une revue de la littérature, afin d'expliciter les concepts, de dégager les grands modèles et d'examiner les dispositifs existants, accompagnée d'un monitoring sur l' implémentation partielle d'un prototype de dispositif de portail personnalisable.

- L'implémentation partielle n'ayant pu être menée à terme, le monitoring a été supprimé et j'ai élargi le champ de la revue de littérature. L'analyse est donc qualitative, les données quantitatives initialement prévues n'ayant pu être collectées.

- Le matériel recueilli lors du prototype MyBCU ainsi que l'expérience acquise en 2001-2002 comme webmaster [...] m'ont néanmoins permis de tenter de répondre aux questions initiales.

Links

- Maria Håkansson, Intelligent System Design, IT-university, Goteburg, Design Methodology slides, Series of HTML Slides, retrieved 13:13, 7 October 2008 (UTC).

References

- Järvinnen, (2004), On research methods, Tampere: Opinpajan Kirja. [Methods book for ICT-related issues, good but difficult and dense reading]

- Nielsen, J. (1993), Usability engineering, Boston: AP professional.

More references about design science, etc.:

- See Design science and Design methodology (and follow up other links)