Latent semantic analysis and indexing: Difference between revisions

m (using an external editor) |

m (→Bibliography) |

||

| (12 intermediate revisions by the same user not shown) | |||

| Line 5: | Line 5: | ||

'''Latent Semantic Indexing (LSI)''' and '''Latent Semantic Analysis (LSA''' refer to a family of text indexing and retrieval methods. | '''Latent Semantic Indexing (LSI)''' and '''Latent Semantic Analysis (LSA''' refer to a family of text indexing and retrieval methods. | ||

We believe that both LSI and LSA refer to the same topic, but LSI is rather used in the context of web search, whereas LSA is the term used in the context of various forms of academic [[content analysis]]. - [[User:Daniel K. Schneider|Daniel K. Schneider]] 12: | We believe that both LSI and LSA refer to the same topic, but LSI is rather used in the context of web search, whereas LSA is the term used in the context of various forms of academic [[content analysis]]. - [[User:Daniel K. Schneider|Daniel K. Schneider]] 12:59, 12 March 2012 (CET) | ||

{{quotation|Latent Semantic Indexing (LSI) is an indexing and retrieval method that uses a mathematical technique called Singular value decomposition (SVD) to identify patterns in the relationships between the terms and concepts contained in an unstructured collection of text. LSI is based on the principle that words that are used in the same contexts tend to have similar meanings. A key feature of LSI is its ability to extract the conceptual content of a body of text by establishing associations between those terms that occur in similar contexts.}} (Deerwester et al, 1988 cited by [http://en.wikipedia.org/wiki/Latent_semantic_indexing Wikipedia] | {{quotation|Latent Semantic Indexing (LSI) is an indexing and retrieval method that uses a mathematical technique called Singular value decomposition (SVD) to identify patterns in the relationships between the terms and concepts contained in an unstructured collection of text. LSI is based on the principle that words that are used in the same contexts tend to have similar meanings. A key feature of LSI is its ability to extract the conceptual content of a body of text by establishing associations between those terms that occur in similar contexts.}} (Deerwester et al, 1988 cited by [http://en.wikipedia.org/wiki/Latent_semantic_indexing Wikipedia] | ||

{{quotation|Latent Semantic Analysis (LSA) is a theory and method for extracting and representing the contextual-usage meaning of words by statistical computations applied to a large corpus of text. The underlying idea is that the totality of information about all the word contexts in which a given word does and does not appear provides a set of mutual constraints that largely determines the similarity of meaning of words and set of words to each other. The adequacy of LSA's reflection of human knowledge has been established in a variety of ways. For example, its scores overlap those of humans on standard vocabulary and subject matter tests, it mimics human word sorting and category judgments, simulates word-word and passage-word lexical priming data and, as reported in Group Papers, accurately estimates passage coherence, learnability of passages by individual students and the quality and quantity of knowledge contained in an essay.}} ([http://lsa.colorado.edu/whatis.html What is LSA?], retrieved 12:10, 12 March 2012 (CET). | {{quotation|Latent Semantic Analysis (LSA) is a theory and method for extracting and representing the contextual-usage meaning of words by statistical computations applied to a large corpus of text. The underlying idea is that the totality of information about all the word contexts in which a given word does and does not appear provides a set of mutual constraints that largely determines the similarity of meaning of words and set of words to each other. The adequacy of LSA's reflection of human knowledge has been established in a variety of ways. For example, its scores overlap those of humans on standard vocabulary and subject matter tests, it mimics human word sorting and category judgments, simulates word-word and passage-word lexical priming data and, as reported in Group Papers, accurately estimates passage coherence, learnability of passages by individual students and the quality and quantity of knowledge contained in an essay.}} ([http://lsa.colorado.edu/whatis.html What is LSA?], retrieved 12:10, 12 March 2012 (CET). | ||

In more practical terms: {{quotation|Latent semantic analysis automatically extracts the concepts contained in text documents. In simplified statistical terms, it factor analyzes the text to extract concepts and then clusters the documents into similar categories based on the factor scores. This might be used to analyze interviews, open-ended questions on surveys, collections of email or study the literary style of authors. It is most useful when analyzing hundreds or thousands of documents, but can be applied to smaller numbers of short documents if they describe a small number of concepts.}} ([https://oit.utk.edu/research/Pages/software.aspx University of Tennessee, OIT], retrieved 14 march 2012. | |||

== The principle == | |||

Evangelopoulos & Visinescu (2012) define three major steps for LSA: | |||

0) Documents should be prepared in the following way: | |||

* Exclude trivial words as well as low-frequency terms | |||

* Conflate terms with techniques like stemming or lemmatization. | |||

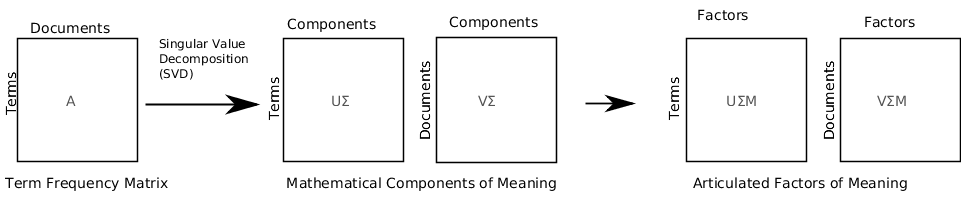

1) A term-frequency matrix (A) must be created that includes the occurences of each term in each document. | |||

2) Singular Value Decomposition (SVD): | |||

* Extract least-square principal components for two sets of variables: set of terms and set of documents. | |||

* SVD products include the term eigenvectors U, the document eigenvectors V, and the diagonal matrix of singular values Σ. | |||

3) From these, factor loadings can be produced for terms UΣ and documents VΣ | |||

[[image:LSA-schema-evangelopoulos-2012.svg|frame|none|Steps in Latent Semantic Analysis. Figure redrawn from Evangelopoulos & Visinescu (2012)]] | |||

== Software == | == Software == | ||

| Line 15: | Line 35: | ||

Free '''latent semantic analysis''' and easy to use software is difficult to find. However, there are a number of good packages for the tech savy, e.g.: | Free '''latent semantic analysis''' and easy to use software is difficult to find. However, there are a number of good packages for the tech savy, e.g.: | ||

* [http://cran.at.r-project.org/web/packages/lsa/index.html LSA package for R] developed by Fridolin Wild. {{quotation|The basic idea of latent semantic analysis (LSA) is, that text do have a higher order ( | * [http://radimrehurek.com/gensim/ Gensim - Topic Modelling for Humans], implemented in Python. {{quotation|Gensim aims at processing raw, unstructured digital texts (“plain text”). The algorithms in gensim, such as Latent Semantic Analysis, Latent Dirichlet Allocation or Random Projections, discover semantic structure of documents, by examining word statistical co-occurrence patterns within a corpus of training documents. These algorithms are unsupervised, which means no human input is necessary – you only need a corpus of plain text documents.}} ([http://radimrehurek.com/gensim/intro.html introduction]), retrieved 12:10, 12 March 2012 (CET) | ||

* [http://cran.at.r-project.org/web/packages/lsa/index.html LSA package for R] developed by Fridolin Wild. {{quotation|The basic idea of latent semantic analysis (LSA) is, that text do have a higher order (latent semantic) structure which, however, is obscured by word usage (e.g. through the use of synonyms or polysemy). By using conceptual indices that are derived statistically via a truncated singular value decomposition (a two-mode factor analysis) over a given document-term matrix, this variability problem can be overcome.}} ([http://cran.at.r-project.org/web/packages/lsa/index.html Latent Semantic Analysis], retrieved 12:59, 12 March 2012 (CET)) | |||

* [http://www.d.umn.edu/~tpederse/senseclusters.html SenseClusters] by Ted Pedersen et al. This {{quotation|is a package of (mostly) Perl programs that allows a user to cluster similar contexts together using unsupervised knowledge-lean methods. These techniques have been applied to word sense discrimination, email categorization, and name discrimination. The supported methods include the native SenseClusters techniques and Latent Semantic Analysis. }} ([http://www.d.umn.edu/~tpederse/senseclusters.html SenseClusters], retrieved 12:10, 12 March 2012 (CET). | * [http://www.d.umn.edu/~tpederse/senseclusters.html SenseClusters] by Ted Pedersen et al. This {{quotation|is a package of (mostly) Perl programs that allows a user to cluster similar contexts together using unsupervised knowledge-lean methods. These techniques have been applied to word sense discrimination, email categorization, and name discrimination. The supported methods include the native SenseClusters techniques and Latent Semantic Analysis. }} ([http://www.d.umn.edu/~tpederse/senseclusters.html SenseClusters], retrieved 12:10, 12 March 2012 (CET). | ||

* [http://code.google.com/p/airhead-research/S-Space Package] a Java package by Jurgens David and Keith Stevens. {{quotation|The S-Space Package is a collection of algorithms for building Semantic Spaces as well as a highly-scalable library for designing new distributional semantics algorithms. Distributional algorithms process text corpora and represent the semantic for words as high dimensional feature vectors. These approaches are known by many names, such as word spaces, semantic spaces, or distributed semantics and rest upon the Distributional Hypothesis: words that appear in similar contexts have similar meanings.}} ([http://code.google.com/p/airhead-research/ Project overview], retrieved 12: | |||

* [http://code.google.com/p/airhead-research/ S-Space Package] a Java package by Jurgens David and Keith Stevens. {{quotation|The S-Space Package is a collection of algorithms for building Semantic Spaces as well as a highly-scalable library for designing new distributional semantics algorithms. Distributional algorithms process text corpora and represent the semantic for words as high dimensional feature vectors. These approaches are known by many names, such as word spaces, semantic spaces, or distributed semantics and rest upon the Distributional Hypothesis: words that appear in similar contexts have similar meanings.}} ([http://code.google.com/p/airhead-research/ Project overview], retrieved 12:59, 12 March 2012 (CET)) | |||

* [http://code.google.com/p/lsa-lda/wiki/LDAviaGibbsHOWTO lsa-lda] | |||

* [http://code.google.com/p/semanticvectors/ Semantic Vectors] can be used to find related terms and concepts to a target term. {{Quotation|creates semantic WordSpace models from free natural language text. Such models are designed to represent words and documents in terms of underlying concepts. They can be used for many semantic (concept-aware) matching tasks such as automatic thesaurus generation, knowledge representation, and concept matching}}. [http://code.google.com/p/semanticvectors/ Semantic Vectors], retrieved 12:59, 12 March 2012 (CET)). This not LSI/LSA, but similar if we understand right... | |||

'''Commercial software''' | |||

* [http://www.sas.com/text-analytics/text-miner/index.html SAS Text Miner]. Quote: {{quotation|provides a rich suite of linguistic and analytical modeling tools for discovering and extracting knowledge from across text collections.}} | |||

== Links == | == Links == | ||

| Line 38: | Line 68: | ||

== Bibliography == | == Bibliography == | ||

* Evangelopoulos, N., Zhang, X., and Prybutok, V. Latent semantic analysis: Five methodological recommendations. European Journal of Information Systems 21, 1 (Jan. 2012), 70–86. | |||

* Graesser, A. C. , P. Wiemer-Hastings, K. Wiemer-Hastings, D. Harter & N. Person and the TRG, Using latent semantic analysis to evaluate the contributions of students in AutoTutor, Interactive Learning Environments, 8 (2000) 128-148. | |||

* Landauer, T. K., & Dumais, S. T. (1996). How come you know so much? From practical problem to theory. In D. Hermann, C. McEvoy, M. Johnson, & P. Hertel (Eds.), Basic and applied memory: Memory in context. Mahwah, NJ: Erlbaum, 105-126. | * Landauer, T. K., & Dumais, S. T. (1996). How come you know so much? From practical problem to theory. In D. Hermann, C. McEvoy, M. Johnson, & P. Hertel (Eds.), Basic and applied memory: Memory in context. Mahwah, NJ: Erlbaum, 105-126. | ||

* Landauer, T. K., & Dumais, S. T. (1997). A solution to Plato's problem: The Latent Semantic Analysis theory of the acquisition, induction, and representation of knowledge. Psychological Review, 104, 211-240. | * Landauer, T. K., & Dumais, S. T. (1997). A solution to Plato's problem: The Latent Semantic Analysis theory of the acquisition, induction, and representation of knowledge. Psychological Review, 104, 211-240. | ||

* Landauer, T. K.; P. W. Foltz & D. Laham, An introduction to latent semantic analysis, ''Discourse processes'', 25 (1998) 259-284. | |||

* Landauer, T. (2007). LSA as a theory of meaning. In Handbook of Latent Semantic Analysis, T. Landauer, D. McNamara, S. Dennis, and W. Kintsch, Eds. Lawrence Erlbaum Associates, Mahwah, NJ, 2007, 3–32. | |||

* Landauer Thomas, Peter W. Foltz, & Darrell Laham (1998). "Introduction to Latent Semantic Analysis" (PDF). Discourse Processes 25 (2–3): 259–284. http://dx.doi.org/10.1080/01638539809545028 - [http://lsa.colorado.edu/papers/dp1.LSAintro.pdf "PDF] | * Landauer Thomas, Peter W. Foltz, & Darrell Laham (1998). "Introduction to Latent Semantic Analysis" (PDF). Discourse Processes 25 (2–3): 259–284. http://dx.doi.org/10.1080/01638539809545028 - [http://lsa.colorado.edu/papers/dp1.LSAintro.pdf "PDF] | ||

| Line 49: | Line 87: | ||

* Jurgens, David and Keith Stevens, (2010). The S-Space Package: An Open Source Package for Word Space Models. In System Papers of the Association of Computational Linguistics. [http://cs.ucla.edu/~jurgens/papers/jurgens_and_stevens_2010_S-Space_Package.pdf PDF] | * Jurgens, David and Keith Stevens, (2010). The S-Space Package: An Open Source Package for Word Space Models. In System Papers of the Association of Computational Linguistics. [http://cs.ucla.edu/~jurgens/papers/jurgens_and_stevens_2010_S-Space_Package.pdf PDF] | ||

* Wild, Fridolin and Christina Stahl, (2007). Investigating Unstructured Texts with Latent Semantic Analysis, in Lenz, Hans -J. (ed). Advances in Data Analysis, | * McCarthy, Philip M.; Adam M. Renner, Michael G. Duncan, Nicholas D. Duran, Erin J. Lightman, Danielle S. McNamara. 2008. Identifying topic sentencehood. Behavior Research Methods 40:3, 647-664. | ||

Studies in Classification, Data Analysis, and Knowledge Organization, Advances in Data Analysis, Part V, 383-390, DOI: 10.1007/978-3-540-70981-7_43 | |||

* Penumatsa, Phanni; Matthew Ventura, Arthur C. Graesser, Max Louwerse, Xiangen Hu, Zhiqiang Cai, And Donald R. Franceschetti (2006). The Right Threshold Value: What Is The Right Threshold Of Cosine Measure When Using Latent Semantic Analysis For Evaluating Student Answers? International Journal on Artificial Intelligence Tools 2006 15:05, 767-777 [http://dx.doi.org/10.1142/S021821300600293X DOI10.1142/S021821300600293X] | |||

* Sidorova, A., Evangelopoulos, N., Valacich, J., and Ramakrishnan, T. Uncovering the intellectual core of the information systems discipline. MIS Quarterly 32, 3 (Sept. 2008), 467–482. | |||

* Wiemer-Hastings, P.; K. Wiemer-Hastings, A. Graesser & TRG (1999). Improving an intelligent tutor's comprehension of students with latent semantic analysis, In S. Lajoie & M. Vivet (Eds.), Artificial intelligence in education, Amsterdam: IOS Press. 535-542. | |||

* Wild, Fridolin and Christina Stahl, (2007). Investigating Unstructured Texts with Latent Semantic Analysis, in Lenz, Hans -J. (ed). Advances in Data Analysis, Studies in Classification, Data Analysis, and Knowledge Organization, Advances in Data Analysis, Part V, 383-390, DOI: 10.1007/978-3-540-70981-7_43 | |||

[[Category: Analytics]] | [[Category: Analytics]] | ||

[[Category: Research methodologies]] | [[Category: Research methodologies]] | ||

Latest revision as of 19:30, 1 November 2012

Introduction

Latent Semantic Indexing (LSI) and Latent Semantic Analysis (LSA refer to a family of text indexing and retrieval methods.

We believe that both LSI and LSA refer to the same topic, but LSI is rather used in the context of web search, whereas LSA is the term used in the context of various forms of academic content analysis. - Daniel K. Schneider 12:59, 12 March 2012 (CET)

“Latent Semantic Indexing (LSI) is an indexing and retrieval method that uses a mathematical technique called Singular value decomposition (SVD) to identify patterns in the relationships between the terms and concepts contained in an unstructured collection of text. LSI is based on the principle that words that are used in the same contexts tend to have similar meanings. A key feature of LSI is its ability to extract the conceptual content of a body of text by establishing associations between those terms that occur in similar contexts.” (Deerwester et al, 1988 cited by Wikipedia

“Latent Semantic Analysis (LSA) is a theory and method for extracting and representing the contextual-usage meaning of words by statistical computations applied to a large corpus of text. The underlying idea is that the totality of information about all the word contexts in which a given word does and does not appear provides a set of mutual constraints that largely determines the similarity of meaning of words and set of words to each other. The adequacy of LSA's reflection of human knowledge has been established in a variety of ways. For example, its scores overlap those of humans on standard vocabulary and subject matter tests, it mimics human word sorting and category judgments, simulates word-word and passage-word lexical priming data and, as reported in Group Papers, accurately estimates passage coherence, learnability of passages by individual students and the quality and quantity of knowledge contained in an essay.” (What is LSA?, retrieved 12:10, 12 March 2012 (CET).

In more practical terms: “Latent semantic analysis automatically extracts the concepts contained in text documents. In simplified statistical terms, it factor analyzes the text to extract concepts and then clusters the documents into similar categories based on the factor scores. This might be used to analyze interviews, open-ended questions on surveys, collections of email or study the literary style of authors. It is most useful when analyzing hundreds or thousands of documents, but can be applied to smaller numbers of short documents if they describe a small number of concepts.” (University of Tennessee, OIT, retrieved 14 march 2012.

The principle

Evangelopoulos & Visinescu (2012) define three major steps for LSA:

0) Documents should be prepared in the following way:

- Exclude trivial words as well as low-frequency terms

- Conflate terms with techniques like stemming or lemmatization.

1) A term-frequency matrix (A) must be created that includes the occurences of each term in each document.

2) Singular Value Decomposition (SVD):

- Extract least-square principal components for two sets of variables: set of terms and set of documents.

- SVD products include the term eigenvectors U, the document eigenvectors V, and the diagonal matrix of singular values Σ.

3) From these, factor loadings can be produced for terms UΣ and documents VΣ

Software

Free latent semantic analysis and easy to use software is difficult to find. However, there are a number of good packages for the tech savy, e.g.:

- Gensim - Topic Modelling for Humans, implemented in Python. “Gensim aims at processing raw, unstructured digital texts (“plain text”). The algorithms in gensim, such as Latent Semantic Analysis, Latent Dirichlet Allocation or Random Projections, discover semantic structure of documents, by examining word statistical co-occurrence patterns within a corpus of training documents. These algorithms are unsupervised, which means no human input is necessary – you only need a corpus of plain text documents.” (introduction), retrieved 12:10, 12 March 2012 (CET)

- LSA package for R developed by Fridolin Wild. “The basic idea of latent semantic analysis (LSA) is, that text do have a higher order (latent semantic) structure which, however, is obscured by word usage (e.g. through the use of synonyms or polysemy). By using conceptual indices that are derived statistically via a truncated singular value decomposition (a two-mode factor analysis) over a given document-term matrix, this variability problem can be overcome.” (Latent Semantic Analysis, retrieved 12:59, 12 March 2012 (CET))

- SenseClusters by Ted Pedersen et al. This “is a package of (mostly) Perl programs that allows a user to cluster similar contexts together using unsupervised knowledge-lean methods. These techniques have been applied to word sense discrimination, email categorization, and name discrimination. The supported methods include the native SenseClusters techniques and Latent Semantic Analysis.” (SenseClusters, retrieved 12:10, 12 March 2012 (CET).

- S-Space Package a Java package by Jurgens David and Keith Stevens. “The S-Space Package is a collection of algorithms for building Semantic Spaces as well as a highly-scalable library for designing new distributional semantics algorithms. Distributional algorithms process text corpora and represent the semantic for words as high dimensional feature vectors. These approaches are known by many names, such as word spaces, semantic spaces, or distributed semantics and rest upon the Distributional Hypothesis: words that appear in similar contexts have similar meanings.” (Project overview, retrieved 12:59, 12 March 2012 (CET))

- Semantic Vectors can be used to find related terms and concepts to a target term. “creates semantic WordSpace models from free natural language text. Such models are designed to represent words and documents in terms of underlying concepts. They can be used for many semantic (concept-aware) matching tasks such as automatic thesaurus generation, knowledge representation, and concept matching”. Semantic Vectors, retrieved 12:59, 12 March 2012 (CET)). This not LSI/LSA, but similar if we understand right...

Commercial software

- SAS Text Miner. Quote: “provides a rich suite of linguistic and analytical modeling tools for discovering and extracting knowledge from across text collections.”

Links

Introductions

- Patterns in Unstructured Data, A Presentation to the Andrew W. Mellon Foundation by Clara Yu, John Cuadrado, Maciej Ceglowski, J. Scott Payne (undated). A good introduction to LSI and its use in search engines.

- Latent Semantic Analysis (Infoviz)

Technical introductions

- Latent semantic indexing (Wikipedia)

- Latent semantic analysis (Wikipedia)

Bibliography

- Evangelopoulos, N., Zhang, X., and Prybutok, V. Latent semantic analysis: Five methodological recommendations. European Journal of Information Systems 21, 1 (Jan. 2012), 70–86.

- Graesser, A. C. , P. Wiemer-Hastings, K. Wiemer-Hastings, D. Harter & N. Person and the TRG, Using latent semantic analysis to evaluate the contributions of students in AutoTutor, Interactive Learning Environments, 8 (2000) 128-148.

- Landauer, T. K., & Dumais, S. T. (1996). How come you know so much? From practical problem to theory. In D. Hermann, C. McEvoy, M. Johnson, & P. Hertel (Eds.), Basic and applied memory: Memory in context. Mahwah, NJ: Erlbaum, 105-126.

- Landauer, T. K., & Dumais, S. T. (1997). A solution to Plato's problem: The Latent Semantic Analysis theory of the acquisition, induction, and representation of knowledge. Psychological Review, 104, 211-240.

- Landauer, T. K.; P. W. Foltz & D. Laham, An introduction to latent semantic analysis, Discourse processes, 25 (1998) 259-284.

- Landauer, T. (2007). LSA as a theory of meaning. In Handbook of Latent Semantic Analysis, T. Landauer, D. McNamara, S. Dennis, and W. Kintsch, Eds. Lawrence Erlbaum Associates, Mahwah, NJ, 2007, 3–32.

- Landauer Thomas, Peter W. Foltz, & Darrell Laham (1998). "Introduction to Latent Semantic Analysis" (PDF). Discourse Processes 25 (2–3): 259–284. http://dx.doi.org/10.1080/01638539809545028 - "PDF

- Dumais, Susan T. (2005). "Latent Semantic Analysis". Annual Review of Information Science and Technology 38: 188.

- Jurgens, David and Keith Stevens, (2010). The S-Space Package: An Open Source Package for Word Space Models. In System Papers of the Association of Computational Linguistics. PDF

- McCarthy, Philip M.; Adam M. Renner, Michael G. Duncan, Nicholas D. Duran, Erin J. Lightman, Danielle S. McNamara. 2008. Identifying topic sentencehood. Behavior Research Methods 40:3, 647-664.

- Penumatsa, Phanni; Matthew Ventura, Arthur C. Graesser, Max Louwerse, Xiangen Hu, Zhiqiang Cai, And Donald R. Franceschetti (2006). The Right Threshold Value: What Is The Right Threshold Of Cosine Measure When Using Latent Semantic Analysis For Evaluating Student Answers? International Journal on Artificial Intelligence Tools 2006 15:05, 767-777 DOI10.1142/S021821300600293X

- Sidorova, A., Evangelopoulos, N., Valacich, J., and Ramakrishnan, T. Uncovering the intellectual core of the information systems discipline. MIS Quarterly 32, 3 (Sept. 2008), 467–482.

- Wiemer-Hastings, P.; K. Wiemer-Hastings, A. Graesser & TRG (1999). Improving an intelligent tutor's comprehension of students with latent semantic analysis, In S. Lajoie & M. Vivet (Eds.), Artificial intelligence in education, Amsterdam: IOS Press. 535-542.

- Wild, Fridolin and Christina Stahl, (2007). Investigating Unstructured Texts with Latent Semantic Analysis, in Lenz, Hans -J. (ed). Advances in Data Analysis, Studies in Classification, Data Analysis, and Knowledge Organization, Advances in Data Analysis, Part V, 383-390, DOI: 10.1007/978-3-540-70981-7_43