Technology-enhanced assessment

Centre for Research in Applied Measurement and Evaluation, University of Alberta

Background and Definition

Technology-enhanced assessment refers to innovative assessment practices and systems that use technology to support the management and delivery of assessment (O'Leary et al., 2018). For example, technology-enhanced assessment uses a wide range of technologies to deliver questions (e.g., via computers, laptops, tablets, and smartphones), to let students interact with questions (e.g., watch videos, take digital notes, view closed captions, highlight and zoom in on text), and to provide prompt feedback and score reporting (e.g., automated essay scoring). Various types of technology-enhanced assessments have been developed for a broad scope of educational assessment aspects and purposes, such as formative or summative assessment, classroom or large-scale assessment, and self- or peer-assessment. The following are examples of well-established technology-enhanced assessments:

- An online self-diagnosis assessment, such as an online math self-assessment tool

- Large-scale and high-stakes digital assessments such as the PISA Collaborative Problem Solving Assessment, and NAEP Digitally Based Assessment

- In-class academic readiness test, such as the Renaissance Learning Star Reading Test

Types and Benefits

Unlike traditional paper-pencil tests, technology-enhanced assessments have brought surprising benefits to both students and teachers. Such benefits include reducing test costs, improving the scoring process, providing flexible test schedules, and providing prompt diagnostic reports (Bennet, 2015). Technology-enhanced assessments are often categorized based on how far they have advanced, in other words, how different they are compared to the traditional paper-based assessments as described in Figure 1.

Bennet (2015) categorized technology-enhance assessments into three stages. The first stage refers to assessments that simply present traditional questions (e.g., multiple-choice questions) on the computer screen. Assessment systems in this stage often allow students to type answers using electronic devices such as a keyboard and a tablet. Systems in the second class often present innovative question types to maximize the amount and the quality of interactions between students and questions. For example, questions can be presented in multiple formats, such as using a video or hypermedia or a simulation environment. Such questions aim to provide an authentic assessment environment, providing opportunities for students to apply their knowledge in real-life scenarios. In the last stage, decisions about assessment design, content, and format are informed by learner models (or student models), so that we can provide a more interactive assessment environment for students.

Design Considerations

Ripley (2009) introduced several key elements that have to be carefully monitored when transitioning from a traditional paper-based assessment to a technology-enhanced assessment. Three critical elements discussed below.

Accessibility Arrangements

“Carefully designed assessments should be able to provide universal access to students of all learning and language backgrounds (NAEP, 2018).”

A technology-enhanced assessment often provides increased accommodations for students with different backgrounds using new technological-aids. For example, students can request alternative item delivery formats (e.g., text-to-speech) if necessary. Further, some assessments follow a universal design by providing alternative adjustment options for every question, so that students could access exams without any additional accommodation requests. Commonly provided accessibility arrangement options are as follows:

- Alternative questions, alternative format (font size, large screen), extra time & rest break, reading to students, scribe, assistive technology (or software)

- One-on-one testing

- Universal design (self-adjustable font size, text-to-speech control, contrast change for readability, highlighter tools for reading)

Question Types

Numerous innovative question types have been introduced and explored to enhance positive assessment experiences for students. Commonly introduced item types include

- Questions with multimedia or hypermedia, such as audio and video

- Allowing the use of digital tools (such as an onscreen calculator and dictionary)

- Problem-solving questions with realistic scenarios

- Role-play or dialogue questions

- Interactive Computer Tasks

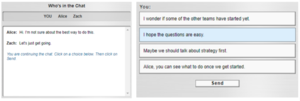

For example, the Programme for International Student Assessment (or PISA) introduced collaborative problem-solving questions in PISA 2015. The questions required students to participate in a role-play scenario as a leader of a group to successfully complete provided problem-solving questions. Figure 2 represents one of the example scenarios and questions. In this question, students were expected to lead group members, Alice and Zach, by selecting appropriate actions.

The National Assessment of Educational Progress (or NAEP) introduced interactive computer tasks in NAEP 2009. The tasks required students to conduct science experiments in a virtual environment to answer questions. Figure 3 represents example experiment scenarios, where students were expected to interact with virtual equipment to conduct science experiments. Followed by the experiments, students were provided with several questions to report the experiment results and demonstrate their understanding.

Technology and Security Issues & Solutions

Technology-related issues should be carefully monitored to provide seamless assessment experiences. For example, students might experience issues accessing the test interface due to a slow Internet connection or unsupported browser versions. Therefore, thorough pre-evaluations on screens, headphones, earphones, earbuds, keyboards, pointing devices, and network connections are required. In addition, various techniques have been introduced recently to promote a secure remote testing environment, such as examinee authentication – verifying the identity of the student using voiceprints, retinal scans, and facial recognition (Weiner & Necus, 2017).

Recently, more advanced technologies have been introduced to verify learners while minimizing and intrusive factors for their assessment experience. Coursera, which is one of the leading online course providers, has introduced using keystroke dynamics to verify their learners (Mass et al., 2014). Coursera uses two biometric authentication approaches to verify learners, which are manual face-photo matching and typing pattern recognition. While manual face-photo matching between students’ ID and their web-cam photos provided highly reliable results, the system was less accessible for students without certain devices (e.g. webcam). On the contrary, their keystroke verification system could provide an authentication process with minimal intrusion to student’s learning experiences by analyzing students’ typing patterns. The system attempts to record the raw stream of keypress data (e.g., the rhythms and cadence of keypress events) and convert them to a set of time-series features (Maas et al., 2014). In conjunction with machine learning classification approaches, the keystroke features could help implement highly efficient identification system. In addition, they reported that verified certificate programs could greatly motivate learners, leading to higher performance and success rates.

Links

- Technology-enhanced assessment design process

- Introducing Technology-enhanced assessment

- PISA 2015 Collaborative Problem Solving Items

- NAEP Task Library

References

- Bennett, R. E. (2015). The changing nature of educational assessment. Review of Research in Education, 39(1), 370-407.

- NAEP (2018). Digitally Based Assessments. Retrieved January 31, 2019, from https://nces.ed.gov/nationsreportcard/dba/

- Maas, A., Heather, C., Do, C. T., Brandman, R., Koller, D., & Ng, A. (2014). Offering Verified Credentials in Massive Open Online Courses: MOOCs and technology to advance learning and learning research (Ubiquity symposium). Ubiquity, 2014(May), 2.

- O'Leary, M., Scully, D., Karakolidis, A., & Pitsia, V. (2018). The state‐of‐the‐art in digital technology‐based assessment. European Journal of Education, 53(2), 160-175.

- Ripley, M. (2009). Transformational computer-based testing. The transition to computer-based assessment, 92.

- Weiner, J., & Necus, I. (2017). Infrastructure to Support Technology-Enhanced Global Assessment. In J. Scott, D. Bartram, & D. Reynolds (Eds.), Next Generation Technology-Enhanced Assessment: Global Perspectives on Occupational and Workplace Testing (Educational and Psychological Testing in a Global Context, pp. 71-101). Cambridge: Cambridge University Press. doi:10.1017/9781316407547.005